An opinion piece by the co-founder and CEO of Iguazio, an Israeli MLOps platform company.

August 29, 2022

An opinion piece by the co-founder and CEO of Iguazio, an Israeli MLOps platform company.

Data science teams have dedicated many years to researching and developing high-performance algorithms for a variety of applications, from personalization to drug discovery and supply chain optimization.

With the rise and adoption of big data, organizations have increasingly implemented these ML approaches for real-world business applications. Proofs of concept (PoCs) have repeatedly showcased the impact of this discipline in terms of increased revenue, improved customer experience, and reduced resource waste.

But too often, these PoCs do not make it into production, making this exercise a wasted opportunity. While several factors contribute to whether a PoC eventually sees implementation, MLOps is one of the key drivers, if not the primary one.

MLOps offers a paradigm shift to a production-first approach for ML applications – that is, starting with the end in mind.

The potential benefits:

Faster end-to-end data science process

Lower cost of AI infrastucture

Increased collaboration and efficiency within and across teams

Enabled support for scalable and efficient real-time applications

Enabled support for big data applications within a shared and scalable feature store

Reliable and automated productionization with complete CI/CD and monitoring

The value of ML, driven by MLOps

MLOps is a discipline that unifies ML development and productionization to provide best practices and support for the end-to-end ML lifecycle. It serves as the backbone upon which organizations can operationalize ML within a scalable and agile paradigm — and where businesses can ideate and deliver ML solutions successfully.

Following a production-first approach, businesses should start investing in MLOps early to avoid incurring unnecessary ML technical debt that can come with later adoption. And advocating for MLOps should start as soon as your first ML initiative is undertaken.

Here are five steps ML practitioners and department heads can take to advocate for and lead MLOps adoption within their organization.

Step 1: Get buy-in from senior management

All successful initiatives need to get and keep buy-in from senior management to succeed. To get this support, you need to showcase:

One or more successful PoCs

Why MLOps is important for your business

Why MLOps needs dedicated resources

Successful PoCs

ML initiatives typically take the shape of PoCs, which are best represented via one-page case studies that clearly state technical requirements, offline performance, and business KPIs. A good example of a standardized template is Google’s Model Cards.

It is important to highlight technical requirements — not just business requirements — because productionizing ML pipelines involves access to multiple engineering components. These include data lakes, networking, and endpoints, which may require an inter-team effort depending on your organization’s structure.

The aim of a PoC is to illustrate the value ML could bring to the business and the corresponding engineering requirements that require adopting MLOps. It is important even at this early stage to understand the full scope of engineering requirements for the eventual application at scale, to avoid costly re-engineering work.

Why MLOps Is Important

Some 87% of ML projects do not make it to production — ever. There are multiple reasons why most successful PoCs are eventually abandoned:

The wide resource and knowledge gap in moving models from development to production makes ML operationalization hard to achieve; the former is the responsibility of data science, while the latter is the task of ML engineering.

PoCs are built on a subset of offline pre-aggregated data; productionization requires scaling up to live and often big data, as well as setting up the underlying infrastructure with highly optimized operations.

PoCs are built on batch data, but many production systems are real-time; you need to design entirely new processes, which have to allow for very stringent deployment requirements while keeping these critical workloads reliable and scalable.

The duplication of efforts and unclear responsibilities across an organization cause initiatives to get stale and be deprioritized.

MLOps bridges these gaps by designing and building a well-defined path to production, where tools and processes are standardized within and across teams following best practices on reusability, scalability, and simplicity.

The resulting production-first approach to developing AI applications is why we need MLOps to make ML successful. An agile approach lets you demonstrate the value of ML initiatives quickly and also keep improving performance over time. This is fundamental for an experimental discipline where failing is an acceptable outcome — as long as you do it fast.

Need for dedicated resources

Organizations have invested many resources in creating well-established DevOps teams in past years. It is thus a fair question for senior stakeholders to ask, ‘why not just take advantage of these teams, who already know and manage production systems, to productionize ML pipelines too?’

While it is true that MLOps draws from many DevOps concepts, there is an important difference in concerns between the two disciplines: The latter focuses on code ‘only,’ while the former also involves models and data.

Moving beyond DevOps and investing in building MLOps tools either in-house or through third-party providers are necessary to cover the novel engineering requirements of machine learning pipelines.

Step 2. Continuously involve business stakeholders

The first phase of any ML pipeline is scoping. This is where a business use case is transformed into technical requirements and the road is paved for your machine learning initiative to succeed.

Ultimately, the purpose of any ML pipeline is to provide some utility. Even the most extraordinary performing model will be useless if it is not relevant to the business.

Involving business stakeholders early in the model pipeline is thus fundamental to defining meaningful utility metrics together. These metrics are then translated into corresponding ML metrics and parameters you can use to evaluate and fine-tune the model.

When setting up monitoring within your MLOps infrastructure, you need to track both business and ML metrics. Business stakeholders should also have access to reports and dashboards for better visibility and review.

Step 3. Identify where you are in the MLOps journey

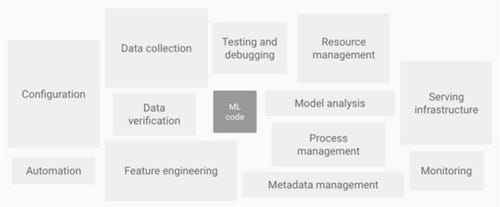

MLOps infrastructures entail many components, as seen in this diagram:

Figure 1:  Components of an MLOps infrastructure (Source: Hidden Technical Debt in Machine Learning Systems)

Components of an MLOps infrastructure (Source: Hidden Technical Debt in Machine Learning Systems)

Introducing these components is an iterative process in which organizations look at their ML pipeline needs and come up with a plan of adoption.

Following Google’s framework, we can refer to this process as an MLOps journey that leads to higher maturity levels of MLOps best practices.

At the first maturity level, this infrastructure is minimal: Model training is a manual process based on a data snapshot, and model deployment involves creating a single prediction service.

As teams progress in their MLOps journey and achieve greater maturity, automation is introduced first for the training pipeline and, secondly, for the serving pipeline. More best practices are embedded, such as model blessing and data drift detection, ultimately leading to a complete and fully automated CI/CD pipeline with monitoring and built-in fault tolerance.

If you have one pipeline to productionize, it is best to develop mature end-to-end MLOps processes and tools now, either in-house or through third-parties. The latter option will help in minimizing engineering overhead in learning, developing and maintaining the vast and ever-changing suite of MLOps tools and practices.

If you have multiple pipelines to productionize and you want to embrace MLOps best practices, a smart approach is to take a highly representative pipeline and develop the end-to-end MLOps processes and tools required to reliably support it. You can then iteratively expand the infrastructure with other high-priority pipelines while taking advantage of modularity and containerization.

When designing an actionable MLOps plan, it is important to

Prioritize consistency, reliability, and scalability, even if that means reducing the possible paths to production.

Design not only the technological processes but also the intra- and inter-team processes.

Step 4. Create a well-defined path to production

An MLOps-enabled production-first approach to developing AI applications is the key to continuous success in ML.

When expanding an end-to-end MLOps infrastructure to support multiple pipelines, you must keep the production system reliable and easy to maintain. This can prove difficult for ML applications where data formats, algorithmic approaches, and frameworks are so varied and continuously changing.

As a solution, it is often recommended that you allow for full freedom during model experimentation but limit the ways in which a model pipeline can be productionized.

Data scientists should have access to templated pipelines and clear documentation to guide model development for production. Machine learning engineers should also regularly gather requirements to prioritize updates in support of the MLOps infrastructure.

Step 5. Define how teams collaborate

The end-to-end ML lifecycle involves many teams: data scientists, MLOps engineers, DevOps engineers, and various business stakeholders.

As part of any successful MLOps initiative, all parties should agree on responsibilities and collaboration within and across these teams.

A RACI (responsible, accountable, consulted and informed) matrix highlighting the assigned responsibilities throughout an end-to-end ML lifecycle for all parties is a great tool. It allows everyone to know when to act and interact concerning the various components and processes involved in your MLOps infrastructure.

The resulting seamless team collaboration ensures agile development, smooth handovers, efficient monitoring, and full visibility — which, in turn, minimizes time to revenue and production.

You May Also Like