It's a bird, it's a plane, it's a classified flying object

Featuring in-depth explanations and interview with AiBUY about its computer vision classification applications in retail and eCommerce

July 9, 2020

Sponsored Content

Featuring in-depth explanations and interview with AiBUY about its computer vision classification applications in retail and eCommerce

Image classification is one of the fundamental computer vision tasks. Just as humans can categorize images and objects into distinct classes, different applications require machines to distinguish among different object categories.

Given an input image and a set of predefined labels (e.g. {bird, plane, superman}), a classification algorithm should be able to identify image features and assign a class score to the image.

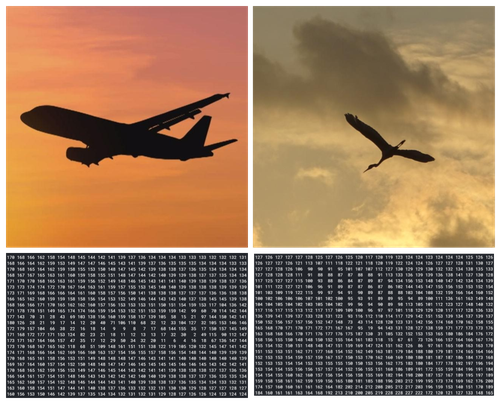

That sounds relatively easy, doesn’t it? Well, yes, until you are reminded of how computers perceive images: as matrices containing numbers.

Image by Author

The semantic gap: humans can easily perceive image contents and categorize objects. However, algorithms need to deal with image representations and learn meaning based on numbers alone. Impressive, right?

Apart from the semantic gap, there are plenty of other challenges when tackling image classification: background clutter, different illumination settings, and occlusion are just a few. However, thanks to advanced neural network architectures and to largely available computational power, image classification is considered a solved problem. Both machine learning algorithms and deep learning methods provide high accuracy on image classification tasks, sometimes even more reliable than humans.

Pretty neat, right? Let us take a closer look at how it all became possible.

Background and history

Image classification algorithms have the following goal: they need to identify relevant patterns in images, and to ensure those patterns are discriminative, i.e. can be used to distinguish among different object classes.

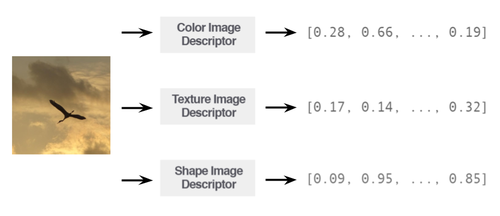

Historically, image contents were summarized by so-called feature vectors. These were manually defined, and their extraction was the very first step of a learning pipeline. The hand-crafting of features could be achieved by a variety of algorithms, each responsible for different image aspects. For example, keypoint detectors and descriptors (such as FAST, SIFT, SURF) could represent salient regions in an image, while others aimed to encode texture, shape, or color aspects of the input image. Once these features have been extracted, the image would be represented by a vector, which served as input to the machine learning algorithm.

Image by Author

Different descriptors are defined for an image, encoding different image aspects. The process is simplified, for the purpose of the illustration. Adapted from: 'Deep Learning for Computer Vision' by Adrian Rosebrock.

However, there were a few issues with manually crafting features: first of all, the quality of the extracted features had a direct impact on the performance of the classifier. Secondly, extensive domain knowledge was required in order to assemble discriminative feature vectors. This made the tackling of image classification tasks only available to domain experts that could define relevant class descriptors.

Convolutional Neural Networks

Convolutional Neural Networks do not require the handcrafting of features from input images. Instead, the features are learned automatically throughout the training of the (deep) neural networks. This is made possible by a series of mathematical operations applied to the input image (in the most basic architecture, a series of convolution and pooling, applied in this order). At each layer in the neural network, the input image is transformed into a different representation, which encodes significant features in the image. The first layers of the network learn representations of basic (low-level) features, such as edges and corners. Further down the network, layers output representations of more specific features, corresponding to parts of the object: wheels, windows, eyes, beaks. After the last layer, the representations are high-level and allow a clear separation between different objects: the network has learned to distinguish among the different classes.

The process described above is known as hierarchical feature learning: starting from low-level features and up to high-level features, the convolutional neural networks learn representations of concepts that build on top of each other. This is very similar to how humans perceive objects in their environment: an airplane is built of components (wings, engines, fuselage, landing gear) and those components can be further broken down into distinguishable features.

The architectural design of convolutional neural networks has a few serious implications on the learning process. Firstly, the handcrafting of features can be skipped, since the features can now be learned by the network without any expert intervention. Secondly, the hierarchical learning of features allows the use of advanced training techniques, such as transfer learning.

Use case

Vision systems are some of the most popular digital sensing systems, that are as common in industrial use as they are in daily life. Surveillance cameras, infrared scanning systems, and LiDAR technologies are all built on the capacity to model 2D and 3D environment information. Visual sensing technology lays the foundation for multidisciplinary development, from research engineering projects to large-scale industrial ventures.

Shoppable on-screen media technology

AiBUY is an onscreen shopping tool that utilizes computer vision to recognize products within images and videos, in real-time. Currently, they are focusing on the onscreen detection of fashion items and embedding the shopping tool to enable immediate purchase without being redirected or leaving the screen. A user would simply be watching their favorite content when an AiBUY enabled shopping tool would prompt the viewer with purchasing the items in view.

During an interview with AiBUY’s CTO, Ryan C. Scott, he explained how it works, saying, “The AiBUY system is based on a TensorFlow framework and uses Python as the main language. The training dataset holds about 400,000 images with excellent categorical markup, aggregated and prepared with the help of scripts, supplemented by 1,200,000 images of mixed or poor categorical markup quality from external Affiliate Network companies.”

Scott continues with more exclusive behind the scenes on how they built it, saying, “Firstly the focus was on detecting a person and their gender. Secondarily adding various other classes e.g. if a woman, then dresses, blouses, etc,” similar to nested categories on most fashion sites. The detected images are then searched within a given retailer’s integrated catalog and the most relevant products are recommended. Scott explains, “By integrating directly with the retailer’s eCommerce platform, we import and synchronize the product information and ensure data like inventory levels and product variance selections are maintained.” Noting one of the latest improvements was “the use of the Annoy library(by Spotify) to improve the performance functionality in searches of nearest neighbors for product classification.” Nonetheless, as Scott humbly remarks “we are always reviewing our codebase to find potential bottlenecks so we can continuously evolve and adapt to the ever-changing outer world.”

Ready to use neural architectures

Multiple deep neural architectures have been used over the years for tackling image classification. The ImageNet challenge offered researchers a chance to show the world their architectural breakthroughs: AlexNet, VGGNet, GoogleLeNet. Year after year, the performance of the models got better, and newer models (ResNet, DenseNet) brought up new learning concepts (such as residual learning), which pushed the performance even higher.

Intricate deep architectures have become ubiquitous and are used for state-of-the-art computer vision tasks. But does that mean that they need to be implemented from scratch?

Luckily not!

Ready to use architectures are provided by all deep learning frameworks. PyTorch, Keras, TensorFlow - they all offer alternatives for tackling image classification (and other computer vision tasks).

Both pre-trained models and barebone architectures are available. They can be either fine-tuned to a smaller dataset or trained from scratch when a lot of data is available.

Looking for an image classification solution yourself? Tell us about your image classification trials and tribulations and you could be featured in our next interview series! If you have a specific question that needs more of a personal perspective, get in touch.

Josh Miramant is the CEO and founder of Blue Orange Digital, a data science and machine learning agency with offices in New York City and Washington DC. Miramant is a popular speaker, futurist, and a strategic business & technology advisor to enterprise companies and startups. He helps organizations optimize and automate their businesses, implement data-driven analytic techniques, and understand the implications of new technologies such as artificial intelligence, big data, and the Internet of Things.

Visit BlueOrange.digital for more information and to view Case Studies.

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)