Microsoft Launches Phi-2: A Small Yet Mighty AI Model

Phi-2 defeated the 70 billion-parameter Llama 2 at coding and even trounced Gemini Nano, a small version of Google's latest LLM

At a Glance

- Microsoft has released technical details for Phi-2, its latest small but powerful language model.

Microsoft has launched the latest in its line of small open source language models: Phi-2. The model is just 2.7 billion parameter in size but outperforms models by up to 25 times its size.

Phi-2 is part of Microsoft’s Phi project, an attempt by its research team to make small, yet powerful language models. The project includes the 1.3 billion parameter Phi-1, which it said achieved state-of-the-art performance on Python coding among existing small language models, and Phi-1.5, which is adept at common sense reasoning and language understanding. The latter was also given multimodal capabilities from a November update.

Microsoft CEO Satya Nadella first offered a glimpse of Phi-2 at last month's Ignite event. The company has now released the model and also shared its technical details in a research blog post.

Phi-2 is available through the Azure AI Studio model catalog. It is also accessible via Hugging Face. Phi-2 cannot be used for commercial uses, however. Under its Microsoft Research license, Phi-2 can only be used for non-commercial, research-oriented endeavors. Any attempt to use these materials for commercial gain would be in violation of the license terms.

AI model performance

Phi-2 is larger than prior versions of Phi but is designed to be more powerful. It can achieve state-of-the-art performance akin to models with fewer than 13 billion parameters.

The researchers said the model is also safer in terms of outputs compared to prior Phi models, despite it not undergoing alignment through reinforcement learning from human feedback (RLHF). Microsoft claims the model shows better behavior in toxicity and bias compared to some existing models.

To achieve Phi’s improved performance, Microsoft researchers focused on what they call "textbook-quality" data for training, carefully selecting web data and utilizing synthetic datasets to power the model. Knowledge from Phi-1.5 was brought over for improved performance and accelerated training.

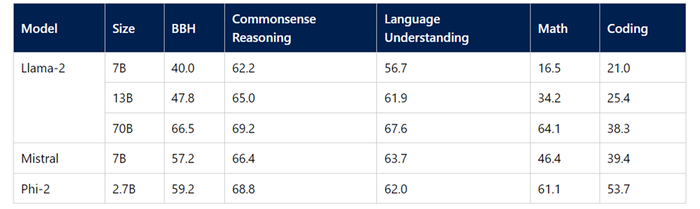

Phi-2 outperformed Meta’s Llama 2-7b and Mistral-7B across various benchmark tests covering capabilities such as commonsense reasoning. Phi-2 even surpassed the 70 billion parameter version of Llama 2 at coding.

“With its compact size, Phi-2 is an ideal playground for researchers, including for exploration around mechanistic interpretability, safety improvements, or fine-tuning experimentation on a variety of tasks,” according to Microsoft.

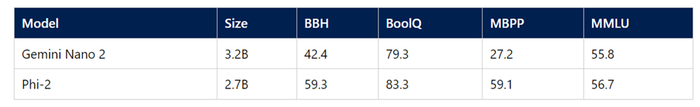

Microsoft researchers even tested Phi-2 against Gemini Nano, the newly announced small version of Google’s new flagship foundation model.

Gemini Nano is 500 million parameters larger than Phi-2. The Microsoft model defeated it in terms of scores on popular benchmark tests including BoolQ, MMLU and MBPP.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)