April 6, 2017

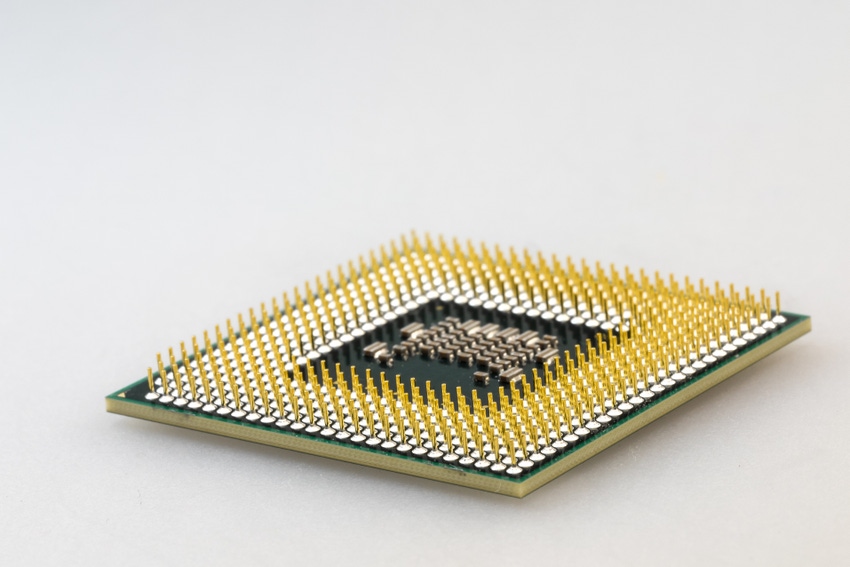

Google's claiming that their AI computer chips are up to 30 times faster than today's conventional CPUs and GPUs.

With AI quickly becoming the main area of focus for many of Silicon Valley's biggest technology companies, they are hard at work developing hardware that's up to the task of running such advanced software. Today's conventional CPUs and GPUs just won't do, which is why companies like NVIDIA, Intel and Google are developing computer chips specially designed for AI.

In a recent blog post, Google Cloud announced that their AI chips are 15 to 30 times faster than most of the processors currently on the market. They also claimed that these AI chips, TPUs, which stands for tensor processing units, offer this amount of power, whilst being incredibly energy-efficient.

"On our production AI workloads that utilize neural network inference, the TPU is 15x to 30x faster than contemporary GPUs and CPUs," wrote Google. The blog post went on to highlight their AI chips' energy efficiency. "The TPU also achieves much better energy efficiency than conventional chips, achieving 30x to 80x improvement in TOPS/Watt measure."

Google's post went on to explain how the neural networks powering these AI applications actually require a relatively small amount of code, and was based on TensorFlow, which is an "open-source machine learning framework."

Even though these chips aren't going to be in your phones or tablets any time soon, Google's already using them extensively in their data centres. These AI chips are enabling a whole series of tasks that we probably don't notice, yet are unequivocally improving our online experiences.

Google's TPUs are delivering search results, identifying your friends in photos on social media, they're translating text in real-time, helping you to draft Gmail message replies and, most importantly, keeping spam out of your email inbox.

However, Google isn't the only company working on AI processors. Qualcomm is striving to incorporate AI technology into its mobile chips, which means that soon our phones may be able to carry out complex machine learning tasks, rather than having to wait for Google Cloud's AI chips to do the work for them.

NVIDIA has already made huge strides into being the world's foremost player in AI. Finally, Intel purchased AI start-up Nervana to help design custom processors that will enable to train new AI technologies faster than ever before.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)