March 20, 2017

NVIDIA is attempting to convince AI and machine learning based start-ups to opt for their hardware over that of Amazon Web Services and Microsoft.

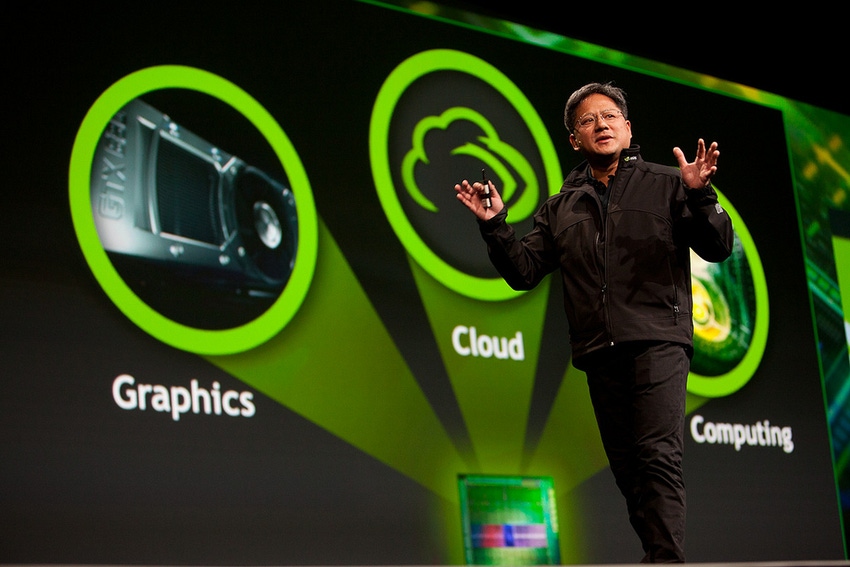

The competition to be a the very top of the AI pyramid is hotting up and NVIDIA is continuing its bid to be THE go-to guys when it comes to developing hardware for artificial intelligence platforms. They recently announced that they'll be working with car manufactures, Bosch, on AI-powered cars, and now, NVIDIA is looking to get start-ups focusing on machine learning an AI using their GPUs as opposed to the cloud services offered by companies like AWS and Microsoft.

In an interview with Techworld Jack Watts, startup business manager at NVIDIA, spoke of there new start-up program, dubbed the Inception Program, and explained that its main aim was, "nurturing those startups and helping them get access to hardware and marketing lift with us, but really the whole thing is about driving this community of startups which are doing weird and wonderful things with AI and Nvidia GPUs."

According to NVIDIA's website, here's what their Inception Program will offer member start-ups:

"As an Inception member, you’ll get access to our global ecosystem—a massive network of deep learning experts and thought leaders."

"Inception members can apply for GPU hardware grants, try out the latest software, and get remote access to state-of-the-art technology."

"The NVIDIA Deep Learning Institute (DLI) will show you the latest techniques in designing, training, and deploying neural network-powered machine learning in your applications. Perks like credits and exclusive courses are also available to Inception members."

"Whether your startup is in stealth mode, prepping for an entrance, or already in market and wanting to raise visibility, you’ll have support for your marketing goals. These include blogs, podcasts, event support, videos, and a variety of other opportunities."

NVIDIA invests in next-gen AI leaders through its GPU Ventures program. Inception applicants are automatically submitted for consideration.

Rahul Amin, CTO and co-founder of the start-up Onfido, admitted that it's "hard" to decide when to buy hardware as opposed to simply putting their data directly in the cloud, and that they currently do a mixture of both. "We probably haven't found the right balance yet," he said. He continued, "GPUs have been a fundamental part of this process for doing machine learning."

Amin then went on to explain how they needed to use hardware in order to be as efficient as possible. "We started off with a singular machine with a gaming graphics card, as a startup it was all about being efficient. We started to train models for extracting text from a document and as the company grew we have introduced various servers and each development machine has GPUs in them."

"It has always been a concern how much we spend on GPUs," Amin admitted.

However, both options are expensive, and Amin explained that the key was being able to use a combination of both in order to get the best results, be more efficient and cost effective. "If you spin up a machine in the cloud it is going to cost you if you run it for a long time, so balancing what is the best way to train models has been an interesting decision and a conversation we continually have in terms of optimising our hardware and providing engineers with the right level of hardware," he finished.

Image courtesy of BagoGames

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)