A cube of porcupine? Sure thing

January 6, 2021

A cube of porcupine? Sure thing

OpenAI, the company behind the impressive and somewhat controversial GPT-3 language model, has revealed its latest creation: DALL-E.

A portmanteau of ‘Dali’ and Disney’s ‘WALL-E’, DALL-E is essentially GPT-3 for images – just write a prompt and the system uses its 12 billion parameters to create a corresponding image from its huge library of samples.

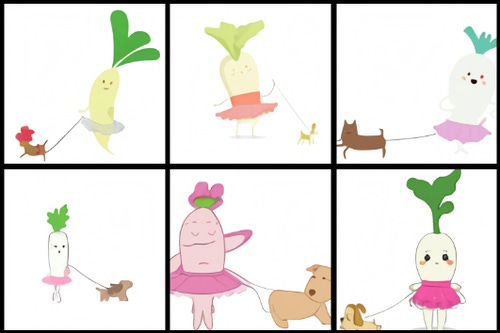

Prompts can range from the straightforward – ‘a red chair’ – to the ludicrous, as per the image above, born of the prompt: ‘An illustration of a baby daikon radish in a tutu walking a dog.’

OpenAI said in a blog post: “We’ve found that it has a diverse set of capabilities, including creating anthropomorphized versions of animals and objects, combining unrelated concepts in plausible ways, rendering text, and applying transformations to existing images.”

The scope of the project is impressive. Consider the phrase ‘a hedgehog wearing a red hat, yellow gloves, blue shirt, and green pants.’ To correctly interpret this sentence, DALL-E must not only correctly compose each piece of apparel with the animal, but also form the associations (hat, red), (gloves, yellow), (shirt, blue), and (pants, green) without mixing them up. This feature is called variable binding, and DALL-E is quite good at it, although its creators say the system is likely to become confused as more objects are introduced.

Imagery through language

Unlike a 3D rendering engine, where inputs must be specified unambiguously and in detail, DALL-E is often able to ‘fill in the blanks’ when the prompt implies that the image must contain a certain detail that is not explicitly stated.

OpenAI uses the example of ‘a painting of a capybara sitting on a field at sunrise.’ Depending on the orientation of the capybara, it may be necessary to draw a shadow, though this detail is never mentioned.

DALL-E is also a dab hand at creating fantastical objects, unlikely to exist in the real world and composed of unrelated ideas that it absorbed during training: ‘a snail with the texture of a harp’ or a ‘cube of porcupine,’ for example.

The system is far from perfect. While many prompts yield the expected outcomes, some produce images of a more distorted, nightmarish quality. The system has also – somewhat predictably – fallen victim to the superficial stereotypes that drive much of the bias that exists within AI, particularly around terms relating to flags, animals, and local cuisine.

Nonetheless, DALL-E represents a significant achievement, and shows that manipulating visual concepts through language is now within reach. Applications for such technology are vast, and OpenAI is already working on practical applications.

“We recognize that work involving generative models has the potential for significant, broad societal impacts,” the company wrote. “In the future, we plan to analyze how models like DALL-E relate to societal issues like economic impact on certain work processes and professions, the potential for bias in the model outputs, and the longer term ethical challenges implied by this technology.”

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)