While organizations invest in AI to optimize their business processes, their processes for creating AI should be efficient as well

January 18, 2022

While organizations invest in AI to optimize their business processes, their processes for creating AI should be efficient as well

Developing and training a production-ready AI model is time-consuming and expensive.

What are the options to overcome this? How can organizations help their data scientists to work more efficiently and to achieve better results?

Many AI projects face staffing challenges and financial burdens. I learned from various brainstorming workshops: There are many fascinating project ideas. They are realistic from a machine learning perspective. The training data would be available.

However, projects are often not started for two reasons. First, their business cases are not convincing. Financial gains and expected savings do not pay for the expected project costs. If the savings are CHF 30’000, a data scientist cannot work for five months exclusively on the project. Second, many data science teams are understaffed. The teams focus on a few projects and postpone the rest.

Managers can address both challenges if they enable data scientists to work more efficiently. This reduces the project cost, thereby improving the cost-benefit ratio of the business case. Furthermore, the same team can handle more projects. There is just one important question. How can you make data scientists work more efficiently? Is it possible to speed-up mathematical thinking and creative modeling?

When searching for optimization potential, it is crucial to look at the complete AI and analytics process.

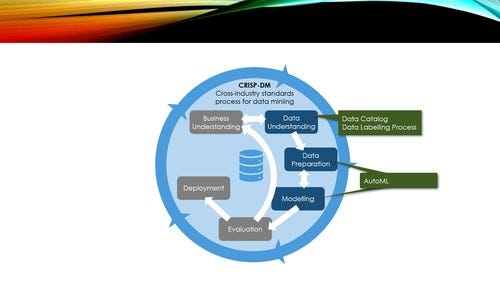

CRISP-DM is a well-known process model in this area [Cha00]. This process model distinguishes six steps (Figure 2): business understanding, data understanding, data preparation, modeling, evaluation, and deployment. The first and the last two steps are important, just not AI-specific. When focusing on optimizing AI and analytics tasks, the three middle steps are of special interest. The data understanding task covers identifying relevant data from the various sources and understanding their semantics. Data preparation is about cleaning and transforming the data for the actual training.

The modeling step is the core AI activity everyone is aware of: training a machine learning or statistical model. Luckily, organizations can optimize all these three AI-specific steps.

Figure 4: The CRISP-DM process model and AI-related efficiency improvement options

Efficiency gains for the data understanding step

The CRISP-DM process step “data understanding” covers data collection and acquisition, understanding the actual data, and verifying its quality. For this step, there are two important optimization options: training data management and data catalogs.

A data catalog compiles information about the various data sets stored in the organization’s data lake, databases, data warehouses, etc. It helps data scientists finding relevant training data. They save a lot of time. Furthermore, the resulting machine learning models get better due to better and more relevant training data.

Data catalogs help when training data exists. However, this is not always the case. When data scientists work on a new AI solution that judges whether a steering wheel on an assembly belt can be packaged and shipped to the customer, the data scientists need pictures of steering wheels as training data. They must be labeled either as “good quality” or “insufficient quality.”

Labeling training data is time-consuming, tiring, and expensive because training sets have to be large. Organizations can optimize the labeling task with services such as AWS SageMaker Ground Truth. It innovates the labeling in multiple ways:

Out-tasking the labeling to AWS-managed workers or third-party service providers. This frees data scientists from labeling large data sets themselves or having to identify and coordinate internal or external persons helping them. Out-tasking works best if the labeling does not require specialized skills. Furthermore, data-privacy issues and the protection of intellectual property can limit this out-tasking.

Managing the workforce: The service compiles badges of texts or pictures etc. to be labeled and assigns them to the various internal or external contributors.

Increase the labeling quality by letting more than one individual contributor label the same pictures, texts, etc.

Reduce human labeling effort: AWS can distinguish between “easy” training data items it can label itself and challenging training data items that a human contributor has to label.

Organizations can achieve efficiency gains when optimizing early in the process of developing an AI model by improving how to find existing respectively how to create new training data. Additionally or alternatively, they can improve the later steps of preparing training data and creating the AI model.

Optimizing data preparation and modeling

Data preparation is the hard and cumbersome work of getting the initial data set in a useful form for training an AI model. Data scientists can perform this work more efficiently if supported by AutoML or with AI-specific integrated development environments (IDEs).

The most popular IDE is Jupyter notebooks. Many organizations use them; commercial offerings such as AWS SageMaker base on them. IDEs reduce the time for writing and testing code. They are well-known for decades in traditional software engineering. Nobody writes Java or C# code in a text editor these days. IDEs such as Eclipse speed up the development and finding errors. They reduce the turn-around time for deploying and testing new code. In the world of data science, Jupyter notebooks enable data scientists to code interactively and experiment with data, ensure the reproducibility of such experimentation, and to document and share them easily with colleagues.

Auto machine learning (AutoML) promises data preparation, choosing a training algorithm, and setting the hyperparameters without human intervention. AutoML understands this process as a high-dimensional optimization problem, which the AutoML algorithm solves autonomously [THH12, TWG19]. It might sound like science-fiction, but there are various offerings on the market such as Google’s AutoML Tables, SAP’s Data Intelligence AutoML, or Microsoft Azure’s Automated ML. They work, though, at least at this moment, it is still questionable whether and under which circumstances AutoML outperforms or at least provides similar good models as models engineered by experienced data scientists [TWG19]. However, AutoML is already a game-changer today. Organizations get quicker results. They can start creating AI models without hiring large data science teams. The Pareto principle (80/20 rule) applies to data science projects as well. It is often better to have a relatively good model quickly with low effort than spending months for the perfect model.

A technology stack perspective

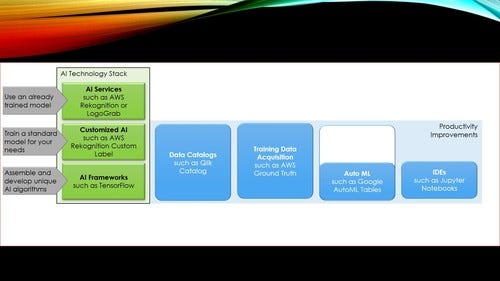

Not all productivity improvements fit for each organization and each AI strategy. In one of my previous posts, I introduced a multi-layer AI technology stack. The level an organization focuses on determines which improvement options help (Figure 3):

When companies rely completely on existing, ready-to-use AI services, they neither train machine learning models nor do they deal with training data. They outsourced everything. Nothing is left that can or should be optimized.

Companies relying on customized AI provide training data and delegate the actual training and model creation to external partners. Organizations following this strategy benefit from data catalogs and improved data labeling processes.

Many data scientists and organizations (still) prefer to train the machine learning models by themselves using AI frameworks such as TensorFlow. They benefit from data catalogs, improved data labeling processes, as well as from AutoML and IDEs.

Figure 5: The big picture of AI innovation: Technology stack, use cases, improvement options

Conclusion

While organizations invest in AI to optimize their business processes, their processes for creating AI should be efficient as well. The more projects a team of data scientists can finish successfully, the more they contribute to transforming the organization.

Common efficiency gains are achieved by improving the acquisition and management of training data and by enabling data scientists to perform core model creation tasks quicker and with less manual work.

Setting priorities right and combining the best-suited improvement options is what distinguishes innovative AI managers.

Klaus Haller is a senior IT project manager with in-depth business analysis, solution architecture, and consulting know-how. His experience covers data management, analytics and AI, information security and compliance, and test management. He enjoys applying his analytical skills and technical creativity to deliver solutions for complex projects with high levels of uncertainty. Typically, he manages projects consisting of 5-10 engineers.

Since 2005, Klaus works in IT consulting and for IT service providers, often (but not exclusively) in the financial industries in Switzerland.

References

[Cha00] P. Chapman, et al.: CRISP-DM 1.0: Step-by-step data mining guide, CRISP-DM consortium, 2000

[THH12] Chris Thorthon, et al.: Auto-WEKA: combined selection and hyperparameter optimization of classification algorithms, KDD '13 Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining, Chicago, Illinois, USA, August 2013

[TWG19] Anh Truong, et al.: Towards Automated Machine Learning: Evaluation and Comparison of AutoML Approaches and Tools, 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, November 2019

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)