A data catalog is the key difference between a data lake and a data swamp

February 4, 2021

The biggest challenge in most AI and analytics projects is the training data.

Which customers are likely to terminate the contract in the next weeks? Which screws on the assembly belt have a defect? Data scientists can answer (nearly) all questions of the world if they find the right data for training their machine learning model.

The best place to look for training data is the organization’s data warehouse.

First, it stores a tremendous amount of data. Second, the data is well-structured and well-documented there. Third, data warehouse data is consistent. A field “ordered items” has the same meaning in all tables. It bases on the same numbers. Inconsistencies between data from different databases are solved during the data preparation. However, data warehouses have one big disadvantage. They store only a fraction of a company’s data. The reason is simple. Adding data requires a time-consuming analysis and documentation of this data – an expensive undertaking. Thus, companies build data lakes.

Adding data to them is inexpensive. The in-depth analysis before adding data is shifted from the project adding data to the projects wanting to use the data. Consequently, data lakes store a tremendous amount of potentially useful data for which the use cases are not clear yet. But how can a project identify relevant data in a data lake? The solution is a data catalog. A data catalog is the key difference between a data lake and a data swamp.

Figure 1: Data warehouse, data lake, and overall company data

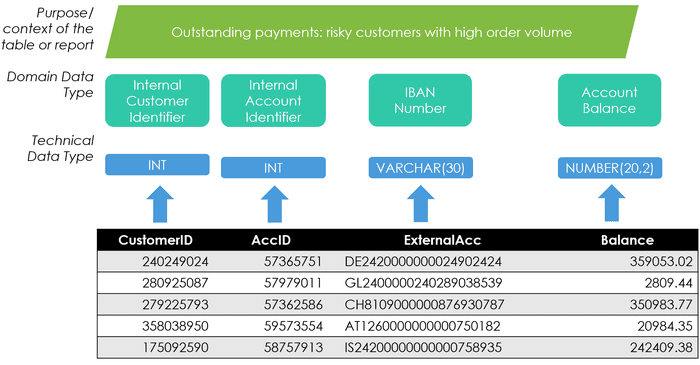

A data catalog helps to find potentially useful data sets from the vast number of available ones in a data lake. Data catalogs have typically two granularity levels (Figure 2). First, they provide information on the table respectively data set level such as “sensor data from the powerplant from 2019”. This describes what data is stored. It is typically complemented with meta-data information such as how the data was collected and derived. Second, there is information on the level of the attributes of the tables and data sets. This can be the technical data type (String versus Integer) and, ideally, the domain data type as well, such as whether a string stores employee names or the ID of a passport.

Figure 2: Understanding Data Catalogs

While data catalogs – and data glossaries defining the most important terms – are integral elements of data warehouses, they are not always available or in a good shape for data lakes since the latter invest less in the documentation. Still, data scientists need data catalogs. This raises the question of how to overcome this dilemma. Are there helpful organizational measures? Are there tools automating building a data catalog?

Data loss prevention tools such as Symantec DLP or Google Cloud DLP are often promoted for classifying (and cataloging) data. While databases provide only the technical data types for table columns, data loss prevention tools identify domain data types as well. For this, they provide rules based on keywords, patterns, or search lists. IBAN or social security numbers, for example, have specific characteristics. This is sufficient for compliance use cases where the focus is on whether a table stores certain sensitive information. However, data science requirements are a second, different use case for data classification as illustrated in Figure 3. Data scientists need tools for training models, they need access to data, but they also have to identify the data sets and data items for training the model. That is the purpose data scientists use data catalogs for.

The challenge for data scientists is finding “new” training data they do not know yet. This is rarely a widely used attribute such as a customer ID. It is more likely an attribute that stores the license number of cars or the velocity of the river at the turbine entrance. Furthermore, it is often more interesting why a customer is in a data set (or why she has a certain attribute value) than the fact that there is a customer ID.

When a data scientist should identify the characteristics of risky clients, his first task is to find a list of such risky ones – plus a list of non-risky ones. When he looks, for example, at the table in Figure 2, he sees one value of 279225793. Its technical data type is INT. This does not help him yet. When he looks at the attribute name and on some sample values, he can reason that 279225793 is of the domain data type “customer ID”. But only when the data scientist knows that the table stores a list of high payments due for more than three months, he knows that the customer IDs in this table are the list of risky clients he is looking for. This last step or insight is the most valuable one – and the most difficult to derive automatically.

Figure 3: The two use cases for data catalogs

In the case of data warehouses, there is funding and time for building and maintaining data catalogs. The situation is more challenging for data lakes. They cannot invest a lot in documentation and analysis. Data lakes must be inexpensive, or there is no business case for them. Crowd-intelligence offers a solution to this challenge.

Crowd-intelligence helps in two ways: for query building, and for data (set) annotation. The latter addresses that annotating an existing data lake with hundreds of data sets requires superhuman efforts. The solution is a procedural measure, ideally enforced on a technical level. When data scientists add data to the data lake, it must be enforced that they provide a description and additional metadata. Data scientists have nearly no effort when they provide such information when adding a data set. However, failing to collect the information at this point, results in data lakes with hundreds of data sets without annotation. This can only be cleaned-up with time-consuming and painful projects.

Support in building a query is the second way how crowd-intelligence can help. Crowd-intelligence can mimic advice from senior experts who help new colleagues. A senior expert directs her younger colleagues to data sets they need for their work. She knows where to find the data when the bank clients went to which branch. She knows where to find the clients that clicked on the details page for mortgage products in the mobile banking portal. Mimicking such behavior bases on the manual annotation of the data sets, on statistics about frequently used data sets and attributes or typical queries submitted in the past, or on a human-based rating of the relevance and quality of data sets. The latter one, for example, is implemented in Qlick’s Data Catalog using three maturity levels: bronze for raw data, silver for cleansed and somehow prepared data for data analysts to work with, and gold for reports directly useful for business users.

Data catalogs are crucial for unlocking the power of data, especially in companies with large data lakes. Combining limited human annotation with the power of analyzing the query history can mimic advice previously only available from senior experts. This is a great way to use machine intelligence to make data scientists more efficient in developing the next generation of machine learning models.

Klaus Haller is a Senior IT Project Manager with in-depth business analysis, solution architecture, and consulting know-how. His experience covers Data Management, Analytics & AI, Information Security and Compliance, and Test Management. He enjoys applying his analytical skills and technical creativity to deliver solutions for complex projects with high levels of uncertainty. Typically, he manages projects consisting of 5-10 engineers.

Since 2005, Klaus works in IT consulting and for IT service providers, often (but not exclusively) in the financial industries in Switzerland.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)