Cerebras courts the AI market with the largest chip ever built

August 20, 2019

The mighty slab of silicon is ready to take on those puny GPUs

by Max Smolaks 20 August 2019

American startup Cerebras Systems has left stealth with its first ever product, a massive processor for artificial intelligence workloads – and when we say massive, we really mean it.

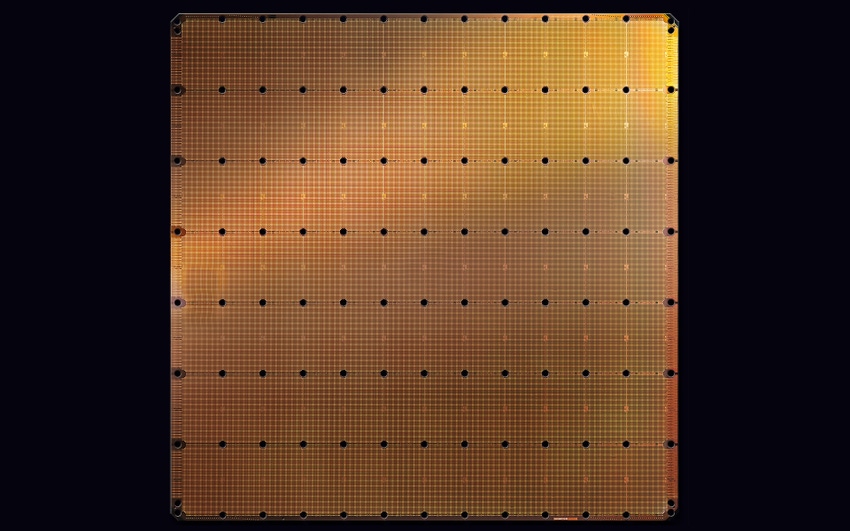

The Wafer Scale Engine (WSE) measures nearly 8.5 by 8.5 inches and features 400,000 cores, all optimized for deep learning, accompanied by a whopping 18GB of on-chip memory.

“Deep learning … has unique, massive, and growing computational requirements. And it is not well-matched by legacy machines like graphics processing units, which were fundamentally designed for other work,” said Dr. Andy Hock, director of product management for Cerebras.

“As a result, AI today is constrained not by applications or ideas, but by the availability of compute. Testing a single new hypothesis – training a new model – can take days, weeks, or even months and cost hundreds of thousands of dollars in compute time. This is a major roadblock to innovation.”

Cerebras was established in 2016 to design hardware accelerators for deep learning, a subset of machine learning based on artificial neural networks. The company is led by Andrew Feldman, who previously served as CEO of SeaMicro and general manager of AMD’s server business.

AI workloads present a perfect application for parallel computing, in which calculations are divided into smaller tasks that are run at the same time, across many cores. The latest Intel Xeon CPUs have up to 64 cores. Nvidia Tesla V100, a top-of-the-line GPU designed specifically for AI, has 640 Tensor cores – modern GPUs for gaming can have up to 3,000 cores, but are unsuitable for deep learning.

Cerebras has managed to squeeze 400,000 cores into a single chip package, which means it should be able to accomplish more demanding tasks, and do this quicker than conventional chips.

The wafer scale approach has another benefit – due to the laws of physics, connections between cores on a single chip are much, much faster than connections between cores across separate chips, even if they are installed in the same system.

Even if a conventional chip or system could match the number of cores on WSE, it would be hard to match the speed at which it can shuffle information between its cores and system memory – something that Cerebras is doing using the proprietary Swarm interconnect.

The startup says its chip contains 3,000 times more on-chip memory and can deliver 10,000 times more memory bandwidth than the industry’s largest GPU.

“Altogether, the WSE takes the fundamental properties of cores, memory, and interconnect to their logical extremes,” Hock said.

The Cerebras software stack has been integrated with popular open source machine learning frameworks like TensorFlow and PyTorch. All we want to see now is a server platform or appliance that can house, power, and most importantly, cool this beast of a processor.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)