But there are some big caveats

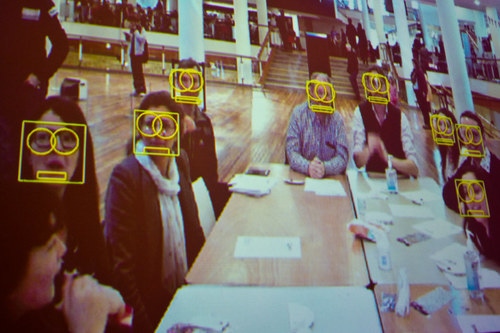

As the US faces a wave of Black Lives Matter protests met with violent police response, three major tech companies have pledged to limit the sales of controversial facial recognition software.

IBM plans to completely back out of developing “general purpose” facial recognition tools, Amazon will not sell its facial recognition tech to police forces for a year, and Microsoft will hold off until regulations are in place.

Protests work

IBM CEO Arvind Krishna said in a letter to Congress that the company "firmly opposes and will not condone uses of any technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and Principles of Trust and Transparency."

He added: "Artificial Intelligence is a powerful tool that can help law enforcement keep citizens safe. But vendors and users of Al systems have a shared responsibility to ensure that Al is tested for bias, particularity when used in law enforcement, and that such bias testing is audited and reported."

The company previously built a surveillance system for Davao City in the Philippines. Led by then-Mayor Rodrigo Duterte, the city’s government was accused of aiding death squads that killed street children, drug dealers, and petty criminals, in a 2009 Human Rights Watch report.

IBM agreed the deal in 2012 with Duterte’s daughter, Sara, while he technically served as vice mayor due to term limits. He later became the President of the Philippines. More on IBM's partnership is detailed in an extensive report by The Intercept.

IBM did not respond to requests for clarification on what "general purpose IBM facial recognition or analysis software" meant, and whether it will still do custom work for governments and law enforcement.

None without sin

Amazon’s new policy isn’t much clearer. In a brief statement, the company said it was “implementing a one-year moratorium on police use of Amazon’s facial recognition technology.”

But the use of the word “police,” rather than “law enforcement” leaves open the possibility that Amazon will continue to work with federal agencies – having pitched its Rekognition tool to ICE, FBI, and others. It is also not clear what will happen to its ongoing police contracts. The company declined to comment.

Amazon added: "We’ve advocated that governments should put in place stronger regulations to govern the ethical use of facial recognition technology, and in recent days, Congress appears ready to take on this challenge. We hope this one-year moratorium might give Congress enough time to implement appropriate rules, and we stand ready to help if requested."

Amazon is known to have lobbied against facial recognition regulation. Shareholders also voted down an employee-backed proposal to limit the sales of Rekognition. When researchers found that the tool, along with numerous others, was subject to racial bias and accuracy issues, the company allegedly attempted to dispute and minimize their work.

"At the same time Amazon tried to discredit us & our results, they were literally investing *millions* internally on ‘fixing’ what they would continue to publicly deny was even a problem... effectively corporate-level gaslighting," researcher Deborah Raji said.

Her MIT Media Lab report highlighted the biased performance results of commercial AI products, but was dubbed “misleading” by Amazon at the time.

“I was very shook by the entire experience & have been personally logging a lot of these actions in utter disbelief of the duplicity in their actions,” Raji said on Twitter. “Honestly so grateful for the research community that stood by us & other companies that took the time to replicate our results.”

The ACLU published a different report in 2018, suggesting that Amazon’s Rekognition facial recognition software was racially biased. The group used the AWS tool to match photos of 28 members of Congress with publicly available mug shots. "Nearly 40 percent of Rekognition’s false matches in our test were of people of color, even though they make up only 20 percent of Congress," ACLU attorney Jacob Snow said.

Amazon also cooperates with more than 1,300 police departments through its Ring smart doorbell, with officers able to ask for Ring video footage during investigations.

A Ring spokesperson did not respond to questions regarding the division’s ongoing police collaboration, and whether it would continue to work with local forces.

Microsoft, meanwhile, will also cease facial recognition software sales to police departments, company president and chef legal officer Brad Smith said. He told The Washington Post Live: "We have been focused on this issue for two years... we do not sell facial recognition technology to police departments in the US today."

He added that the company will not offer its technology until there is a national law "grounded in human rights that will govern this technology."

Google has not sold its facial recognition technology to law enforcement since 2018.

But the major tech companies pulling out of this market is not enough, Smith warned. “If all of the responsible companies in the country cede this market to those that are not prepared to take a stand, we won't necessarily serve the national interest, or the lives of the black and African American people of this nation well,” he said. “We need Congress to act, not just tech companies alone.

In a statement, controversial surveillance specialist Clearview AI said that while major tech companies have "decided to exit the marketplace," it would double down. It claimed other AI systems were biased, but that it had solved racial bias - a claim that has not been independently verified.

The company is facing a lawsuit by the ACLU, has been dubbed a potential danger to the First Amendment by Senator Edward Markey, and its services may be illegal in Europe – according to an EU privacy body.

A number of other facial recognition companies exist, including Banjo. Earlier this year, a OneZero report uncovered that its CEO was involved in a drive-by shooting of a Synagogue, carried out by a KKK leader. Damien Patton resigned, but the company still provides surveillance services to police forces.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)