And nearly as many times more expensive

The Department of Energy’s National Energy Technology Laboratory partnered with Cerebras Systems to test the chip designer's huge Wafer Scale Engine processor.

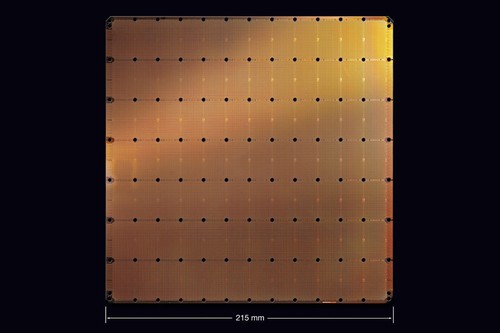

The WSE is the largest chip ever built, with 1.2 trillion transistors and 400,000 AI-optimized cores in a package measuring eight by nine inches. Sold with accouterments in a 15U rack-mounted device known as the CS-1, each one costs around $2m.

Using the whole wafer

“NETL researchers and the Cerebras team collaborated to extend the frontier of what is possible,” Dr. Brian J. Anderson, Lab Director at NETL, said. “This work at the intersection of supercomputing and AI will extend our understanding of fundamental scientific phenomena, including combustion and how to make combustion more efficient. Together, NETL and Cerebras are using innovative new computer architectures to further the NETL Mission and benefit the United States.”

In a test involving a key workload in computational fluid dynamics, the CS-1 was pitted against the Joule supercomputer, the 82nd fastest supercomputer in the world. While NTEL's Joule contains 84,000 CPU cores, only 16,384 were used, as that was the largest cluster of CPUs that Joule could allocate to the problem of this size – additional cores would not have sped up the calculation.

When compared to the 16,384 Intel Xeon Gold 6148 cores, CS-1 was 200 times faster. It was also 10,000 times faster than a GPU.

“Cerebras is proud to extend its work with NETL and produce extraordinary results on one of the foundational workloads in scientific compute,” Andrew Feldman, co-founder and CEO at Cerebras, said. “Because of the radical memory and communication acceleration that wafer scale integration creates, we have been able to go far beyond what is possible in a discrete, single chip processor, be it a CPU or a GPU.”

In a research paper published this week, Cerebras and NETL examined the promise of wafer-scale processors.

By having memory on the same silicon wafer as processing, the chip can provide orders of magnitude more memory bandwidth, single cycle memory latency, and lower energy cost for memory access.

The WSE has 18GB of on-wafer static random-access memory (consisting of 380,000x 48kb local memory) interconnected by an on-the-wafer network with injection bandwidth one fourth of the peak floating point compute bandwidth and with nanosecond per hop message latencies.

"Thus, on the CS-1, memory bandwidth matches the peak compute rate, and communication bandwidth is only slightly lower," the paper states. This is in contrast with standard CPU, GPU, and memory configurations, where network latencies are only getting worse, causing a performance bottleneck.

"We have shown here that the approach of keeping all the processing, data, and communication on one silicon wafer can, when a problem fits on the wafer, eliminate memory bandwidth and greatly reduce communication as performance limits, yielding dramatic speedup and allowing accurate real-time simulation."

The researchers said that the CS-1 was the "first ever system capable of faster-than real-time simulation of millions of cells in realistic fluid-dynamics model."

But they also noted that the machine had limitations, particularly with a small memory footprint, and that it was not the best approach for all workloads.

"Our goal is not to tout the CS-1 specifically; rather we want to illuminate the benefits of the wafer-scale approach," the authors said, adding that the CS-1 is "the first of its kind," and that they expect memory improvements. Shrinking nodes from 16nm to 7nm will provide around 40GB of SRAM, while 5nm opens it up to 50GB.

"These changes will help, and while they don’t produce petabyte capacities, there are new directions that the hardware can take, involving systems that go beyond a single wafer and a single wafer type, that can add much more."

Others are showing interest in the technology, with the CS-1’s manufacturer, TSMC, saying that it wanted to improve its wafer-scale production processes so that it could commercialize the idea. Cerebras sold another two chips to the Pittsburgh Supercomputing Center this June, for the $5m Neocortex AI supercomputer.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)