An early example of a feature clashing with the company’s ethical AI principles

February 25, 2021

An early example of a feature clashing with the company’s ethical AI principles

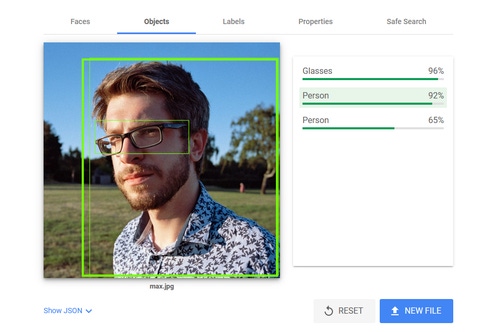

Google’s widely-used Cloud Vision API, which provides machine vision capabilities to developers, will no longer attach gendered labels like ‘man’ or ‘woman’ as image tags.

In an email to developers last week, Google said it will instead tag images of people with ‘non-gendered’ labels such as ‘person,’ citing the company’s internal guidelines on AI ethics.

“Given that a person’s gender cannot be inferred by appearance, we have decided to remove these labels in order to align with the Artificial Intelligence Principles at Google, specifically Principle #2: Avoid creating or reinforcing unfair bias,” the email said.

The Cloud Vision tool can be used to automatically detect faces, landmarks, brand logos, and even explicit content, and is deployed in applications from visual search to conservation. A report by Business Insider confirmed that this functionality has been removed from the API.

Assumption is the mother of all screw-ups

Google invited developers to comment on the change in its discussion forums. Only one developer complained, writing: “I don’t think political correctness has room in APIs. If I can 99% of the time identify if someone is a man or woman, then so can the algorithm. You don’t want to do it? Companies will go to other services.”

AI-based image recognition has been a long-standing ethical issue for Google, ever since one of its engineers found that Google’s Photos app was mis-tagging Black people as ‘gorillas.’ This is just one of a range of ethics questions facing the firm; indeed, the company’s AI ethics principles were only adopted after employees staged mass walk-outs over plans to provide the Pentagon with machine vision tools for drone strikes in 2018.

Since then, Google has come under repeated fire for alleged missteps: these included the ousting of its ethical AI team co-lead (and founder of Black In AI) Timnit Gebru over a research paper outlining the ethical challenges facing especially large natural language AI models.

With little in the way of external accountability, it remains to be seen whether Google’s ethical principles amount to more than just lip service.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)