What kind of abuse do you want a child to scream at you?

What kind of abuse do you want a child to scream at you?

Intel is planning to apply AI to monitor online video game communications, and censor hate speech.

'Bleep' will allow gamers to choose how many insults they want to hear, with a series of sliders for different forms of prejudice and bigotry. The system will filter out such content for the end-user, rather than the entire chat.

Computer, reduce sexism by 33%

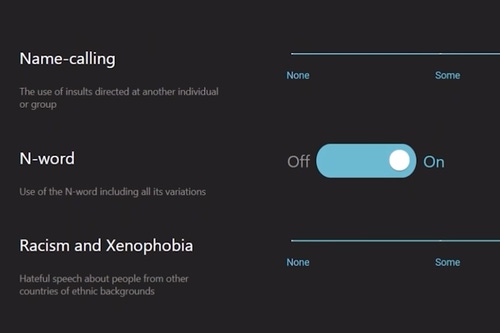

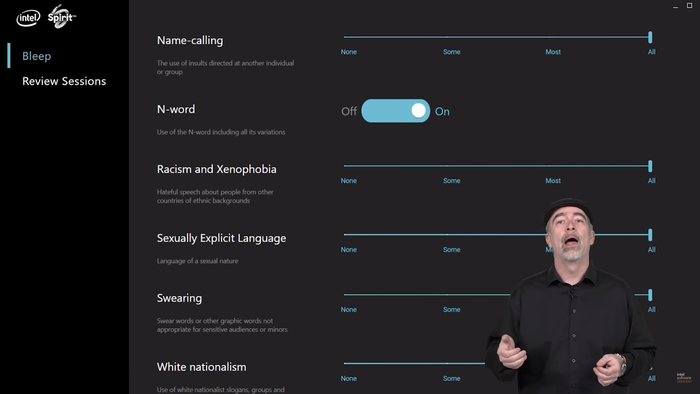

Users will be able to choose whether to bleep 'ableism and body shaming,' 'aggression,' 'LGBTQ+ hate,' 'misogyny,' 'name-calling,' ‘white nationalism,’ ‘racism and xenophobia,’ ‘swearing,’ and ‘sexually explicit language.’

They will have the option to tweak the ratio of each distinct hate speech category, choosing from none, some, most, or all. As for the n-word, it has a simple on/off toggle to filter it and 'its variations' out.

A screenshot from Intel’s session at the Game Developers Conference (GDC) 2021

Bleep is being developed in collaboration with natural language processing company Spirit AI.

“While we recognize that solutions like Bleep don’t erase the problem, we believe it’s a step in the right direction, giving gamers a tool to control their experience,” Intel’s Roger Chandler said during a GDC demonstration.

Major online video game chats are well-known for toxicity, aggression, and propensity towards death threats.

"Toxic behavior, also known as griefing or toxic disinhibition, happens when players break co-existence rules, acting in antisocial ways. It brings forth anger or frustration on other players, leading to a bad game experience. Inside the game, toxic behavior can create a spiral of insults and blame that can destroy the communication within a team," a 2018 study into game community toxicity found.

Actually tackling that problem is harder than just banning or filtering words, game studios have found. Players can be endlessly creative in their slurs and insults, and sometimes react more extremely in the face of censorship. Efforts to filter out terms can turn into an endless cat and mouse game that removes more and more words from use.

It can also be difficult to use automated systems to distinguish between truly toxic commentary, and something that’s part of the gameplay experience. “Some bad words are also used by typical players as well – This behavior is prevalent in “trash talk” culture, and an important factor in immersing players in a competitive game,” a 2014 linguistic analysis of toxic behavior in League of Legends found.

Thus, the researchers warned, any warning or censorship system "must be effective in detecting toxic playing while being flexible enough to allow for trash talk to avoid breaking the immersive gaming experience."

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)