The latest version can reportedly run BERT-Large in 1.2 milliseconds

The latest version can reportedly run BERT-Large in 1.2 milliseconds

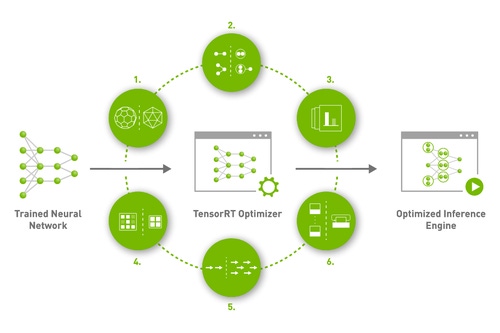

Nvidia has launched TensorRT 8 – the eighth generation of its AI software development kit.

The tech giant claims that the latest iteration slashes inference times for language queries in half.

It adds that developers can now as much as triple their model sizes without a hit on performance.

“The latest version of TensorRT introduces new capabilities that enable companies to deliver conversational AI applications to their customers with a level of quality and responsiveness that was never before possible,” Greg Estes, Nvidia’s VP of developer programs, said.

Sparsity and quantization-aware training

Nvidia said that TensorRT software has been downloaded nearly 2.5 million times over the past year, and has been used by more than 350,000 developers.

The system is based on CUDA, the company’s long-enduring parallel programming model.

Nvidia claims apps that use TensorRT in combination with its hardware perform up to 40 times faster during inference than when running on CPU-only systems. It adds that TensorRT 8 allows BERT-Large — which is among the most popular Transformer-based models — to run in 1.2 milliseconds.

The latest iteration of the dev kit contains two new features – sparsity and quantization-aware training.

The former is a performance technique in Nvidia Ampere architecture GPUs to increase efficiency, which the company said would allow developers to accelerate neural networks by reducing computational operations.

Meanwhile, quantization-aware training would allow developers to use trained models to run inference in INT8 precision without losing accuracy – thereby reducing compute and storage overhead for inference on Tensor Cores.

TensorRT 8 is generally available and free of charge to members of the Nvidia Developer program. The latest versions of plug-ins, parsers, and samples are also available as open source from the TensorRT GitHub repository.

This is the latest in a long line of recent Nvidia announcements.

In early July, the company’s CEO virtually unveiled Cambridge-1, the UK’s most powerful supercomputer, which is based on Nvidia hardware.

Late June saw its Aerial framework for GPU-Accelerated 5G Virtual Radio Access Networks (vRAN) extended to support Arm-based CPUs.

Also in June, it showed off Vid2Vid Cameo – an AI model capable of creating realistic videos of a person from a single photo – and Fleet Command, a remote management platform for businesses to monitor and manage AI edge applications.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)