Students preferred the constructive feedback provided by the AI system, researchers found

Students preferred the constructive feedback provided by the AI system, researchers found

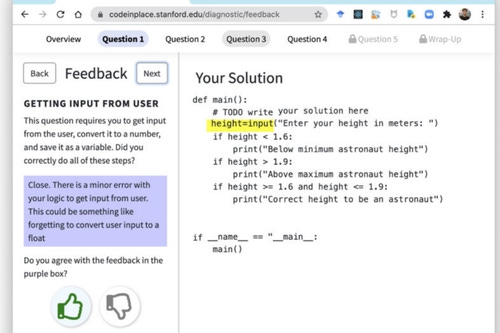

Computer scientists from Stanford University have created an AI-based education tool that can provide feedback on assignments as part of an introductory computer science course.

The system was tested as part of the university’s Code in Place course, which taught programming to 10,000 students from around the world.

The project team included Chris Piech, assistant professor of computer science, Chelsea Finn, assistant professor of computer science and electrical engineering, and Ph.D. students Mike Wu and Alan Cheng.

The team found that students agreed more often with the feedback on their papers marked by the AI system, than the feedback left by human instructors.

“The students rated the AI feedback a little bit more positively than human feedback, despite the fact that they’re both as constructive and that they’re both identifying the same number of errors. It’s just when the AI gave constructive feedback, it tended to be more accurate,” professor Finn said.

“This task is really hard for machine learning because you don’t have a ton of data. Assignments are changing all the time, and they’re open-ended, so we can’t just apply standard machine learning techniques,” Piech added.

What’s the answer to number four?

Education was forced online due to the pandemic. Globally, over 1.2 billion children were out of the classroom between 2020 and 2021, according to the World Economic Forum.

Many schools and universities scrambled for solutions to adapt to online learning, with rapid deployments of language apps, virtual tutoring, video conferencing tools, and online learning software.

The team at Stanford sought to develop its AI tool to aid assignment marking tasks, which it said required large-scale and high-quality feedback.

Researchers opted to use meta-learning, where a machine learning system can learn about many different problems, with relatively small amounts of data.

“With a traditional machine learning tool for feedback, if an exam changed, you’d have to retrain it, but for meta-learning, the goal is to be able to do it for unseen problems, so you can generalize it to new exams and assignments as well,” Wu said.

Using data from previous iterations of Stanford computer science courses, the tool's developers were able to achieve accuracy at or above the human level on 15,000 student submissions.

The team found that of the 1,096 students surveyed, those that were using AI agreed with the system’s marking 97.9 percent of the time, compared to 96.7 percent for human instructors.

“The improvement was driven by higher student ratings on constructive feedback – times when the algorithm suggested an improvement,” the study into the system’s deployment reads.

No statistically significant difference across students segmented by gender or country of origin was found in their work, despite the wide diversity of students that took part, they added.

“To the best of our knowledge, this was both the first successful deployment of AI-driven feedback to open-ended student work and the first successful deployment of prototype networks in a live application,” the researchers said.

“With promising results in both a research and a real-world setting, we are optimistic about the future of artificial intelligence in code education and beyond.”

All aboard the edtech train

There have been several edtech firms propping up of late with innovative tools and systems.

Riiid, a Korean ‘test-prep’ startup developed santA.Inside – an AI-based tutoring engine originally developed to help students improve their scores in the Test of English for International Communication (TOEIC) – a popular English language exam in Asia.

The edtech startup secured a $175 million investment from SoftBank Vision Fund 2 back in June, bringing its total funding to $250 million.

Dr. Michelle Zhou and Dr. Huahai Yang, the inventors of IBM Watson Personality Insights, got in on the edtech expanse – creating ‘cognitive AI assistants’ designed to help universities deal with requests from their students.

Their firm, Juji, claims its AI assistants “engage users in a more human-like interaction by actively listening to the users and automatically inferring their unique needs and wants.”

And a Luxembourg-based company launched a robot to help autistic children learn and practice social, emotional, and cognitive skills. LuxAI’s QTrobot for Home can emote via a built-in screen and 'speaks softly' to create an accessible and effective play-based set up for special needs education at home.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)