August 2, 2021

A modular system for testing how robots interact with the real and simulated world

Social media and advertising giant Facebook has released a new platform for building robots that leverage natural language processing and computer vision.

'Droidlet' is an open source platform for testing different computer vision and natural language processing models, available immediately on Github.

The system is designed to simplify robotics development, the company claimed, allowing for rapid prototyping.

Making it easier to test autonomy

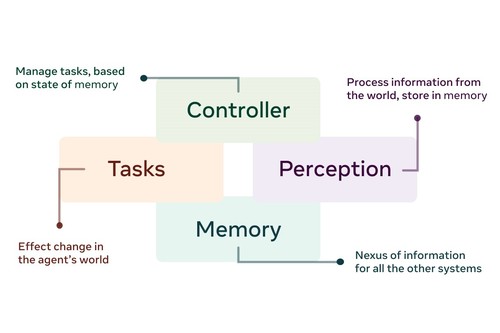

"The droidlet agent is designed to be modular, allowing swapping out components (which can be learned or heuristic) and extending the agent by adding new components," Facebook said in a research paper.

"Our goal is to build an ecosystem of language, action, memory, and perceptual models and interfaces."

This architecture allows researchers to use the same intelligent agent on different robotic hardware, but swapping out the perceptual modules and tasks as defined by each robot's physical architecture and sensor requirements.

Droidlet allows researchers to test out different computer vision or NLP algorithms with their robot, which can then be run in the real world, or in simulated environments like Minecraft or Habitat (Facebook’s AI simulation game, not the 1987 LucasArts classic), the company said.

"The goal of our platform is to build agents that learn continuously from the real world interactions," the research paper stated.

"We hope the droidlet platform opens up new avenues of research in self-supervised learning, multi-modal learning, interactive learning, human-robot interaction, and lifelong learning."

In an accompanying blog post, Facebook admitted that the platform was still in its early days, and that the field still had a long way to go before it could come close to what we are accustomed to seeing in science fiction. “But with droidlet, robotics researchers can now take advantage of the significant recent progress across the field of AI and build machines that can effectively respond to complex spoken commands like “pick up the blue tube next to the fuzzy chair that Bob is sitting in.”

Tech giant Facebook has become increasingly invested in robotics software development. Earlier this month, it teamed up with Carnegie Mellon and Berkeley to teach a Unitree robot how to adjust to different environments in real time.

The company has also built a fleet of robots to patrol its data centers, and is secretly working on autonomous systems to take over aspects of the facilities' operations.

Last week, rival Alphabet also made a move into the robotic software space. The company launched Intrinsic, a new business aimed at making industrial robots easier and more affordable to use.

Intrinsic comes after Alphabet aggressively tried to dominate the robotics industry with a series of huge acquisitions, notably Boston Dynamics. But the venture failed, and most of the companies were sold off, with Boston Dynamics now in the hands of Hyundai.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)