Promise to release the design, relevant documentation, code, and base models

AI researchers at Meta, the newly re-branded holding company previously known as Facebook, have created ‘synthetic skin’ that allows robots to feel what they touch.

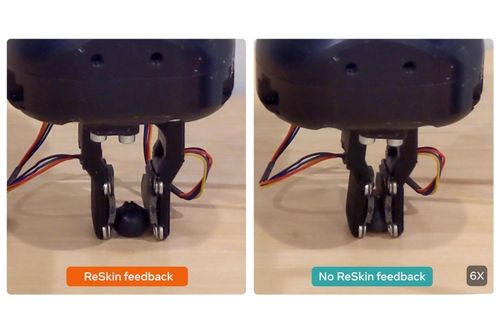

Dubbed ReSkin, the open source system relies on magnetic sensing and self-supervised learning algorithms to automatically calibrate sensors and create generalizable data that can be shared with similar systems.

ReSkin will allow users to gain access to data that helps improve deployments in touch-based tasks, a blog post reads, including object classification, proprioception (the ability to sense movement, action, and location), and robotic grasping.

“AI models trained with learned tactile sensing skills will be capable of many types of tasks, including those that require higher sensitivity, such as working in health care settings, or greater dexterity, such as maneuvering small, soft, or sensitive objects,” the team said.

Touch the Meta

Facebook AI Research, or FAIR, was not spared, it seems, from the Zuckerberg-led re-brand of its parent company. While its website still displays the Facebook AI header, the blog post specifically refers to the research team as “Meta AI.”

Last month, Facebook’s business chiefs opted to follow Alphabet’s lead and rebrand its holding company, attempting to pivot its focus to VR and AR.

The announcement was widely ridiculed and came after a string of PR disasters, with whistleblowers accusing the company of knowingly hosting hate speech and illegal activities.

Away from Facebook’s publicity troubles, its AI research team has promised to release the design, relevant documentation, code, and base models of ReSkin.

By publishing it openly, the team hopes to “help AI researchers use ReSkin without having to collect or train their own data sets.”

“That, in turn, should help advance AI's tactile sensing skills quickly and at scale,” Meta AI suggested.

The ‘synthetic skin’ can be integrated with other sensors to collect visual, sound, and touch data outside the lab and in unstructured environments.

The skin itself is inexpensive to produce – with the researchers suggesting it costs less than $6 each when ordering 100 units and “even less at larger quantities.”

It’s 2-3mm thick and has a high temporal resolution of up to 400Hz, with a spatial resolution of 1mm with 90 percent accuracy.

“These specifications make it ideal for form factors as varied as robot hands, tactile gloves, arm sleeves, and even dog shoes, all of which can help researchers collect tactile data for new AI models that would previously have been difficult or impossible to gather,” the team said.

Meta’s move to make this project open source comes after its researchers released an open source robotics development platform, dubbed Droidlet.

The platform is designed for testing different computer vision and natural language processing models. It is available on Github.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)