Multisearch is one of Google’s biggest search updates.

Google has unveiled a new tool allowing users to search using images and text at the same time.

Want to find a dress you saw online but in a red? Screenshot the dress and type in ‘red’ for Google to search for both that dress style and the color you want.

Called Multisearch, it is one of Google’s biggest search updates since the company fully adopted MUM (Multitask Unified Model) after dumping the less-powerful BERT last May. MUM amplifies search results by understanding information behind text and images to more fully answer a query. BERT adds context to a Google Search by looking at how words in a query relate to one another.

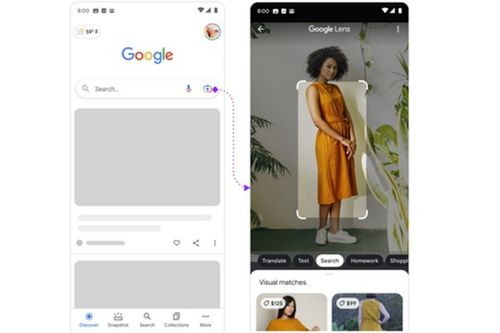

To use Multisearch, users must click the camera icon next to the search bar. Upon tapping it, Google Lens will launch to let the user to take a photo or use an image from its library. Search will then show results based on what the Lens identifies. Users add the image to search to find relevant text.

For example, if you were to search for an image of the Los Angeles Lakers professional basketball team, you can add ‘LeBron James’ to find more specified results.

Beta in U.S. for now

Multisearch is only currently available on Google Search’s iOS and Android apps as a beta feature in English in the U.S. However, it could prove a boon for shoppers trying to find a specific item or gardeners trying to identify certain plants.

Search product manager Belinda Zeng revealed Multisearch in a blog post. She said the new tool was “made possible by our latest advancements in artificial intelligence.”

Zeng said that Google's search team is exploring ways in which this feature might be enhanced by its MUM AI model. Google had only adopted BERT in November 2019 but dropped the algorithm in favor of a model around 1,000 times more powerful.

Multisearch’s operations work similarly to the Google-developed Woolaroo, which scans and identifies objects − but instead of offering refined searches, it informs the users of their names in several endangered languages. The tool was released last May and built using Vision AI.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)