LegoNN brings cost savings by reusing existing decoder modules.

Researchers from Meta’s AI team have developed a new method for building machine learning architectures that have the potential to lower the cost and reduce the complexity for tasks such as speech recognition and translation.

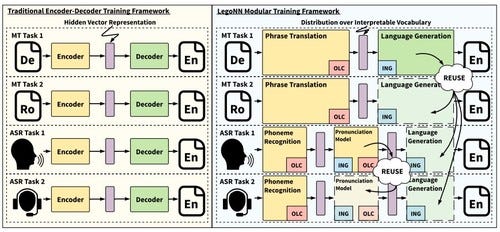

When computer systems perform automatic speech recognition (ASR) or machine translation (MT) of languages, they break down their tasks into several steps. For example, in an MT model that translates text, it has to first translate the word given and then generate text in another language.

In speech recognition, the system needs to understand the words spoken, account for various ways these words are pronounced, and then generate the text.

Both ASR and MT have some common functions, such as decoding, but the way they are programmed means they cannot share decoders without having to customize each time. For example, an English predicting decoder from the German-English machine translation system can be re-used for Romanian-English without having to fine-tune.

“This leads to wasted compute during training and less interpretable architectures overall,” according to the paper by Meta researchers.

Meta is introducing LegoNN, a method that lets developers reuse components like decoder modules without needing to fine-tune. Typically, when building systems for tasks like MT, models tend to be siloed for specific tasks – using sizable computing resources during training.

Meta’s paper, first published on open-access scholarly repository arXiv earlier this month, introduces the modularity principle to encoder-decoder architectures that would allow for reusable modules to be developed. This means developers do not have to customize things such as decoders every time, especially when the task they’re programming for is underutilized.

Figure 1:

Cost savings

It’s complex stuff, but essentially the paper suggests that AI developers attempting to conduct tasks such as ASR or MT would not need to build a dedicated decoder. Instead, they could reuse existing decoder modules – thereby reducing computational resources and saving considerable costs.

The LegoNN encoder-decoder system would give developers the ability to share already trained decoders and intermediary modules between tasks without the need to train systems separately or fine-tune for a specific task. LegoNN would also maintain the end-to-end differentiability of each distinct system, which would give developers the ability to make future adjustments if desired.

Meta’s AI research paper saw scientists use the LegoNN decoder to perform Workshop on Machine Translation tasks. They found that LegoNN was able to do what an ASR module could without any drop in performance.

Further test plans for LegoNN will see Meta’s AI team explore the model with NLP pre-training techniques like Google-developed BERT.

From LegoNN to OPT-66B

In other Meta AI news, its research team has released OPT-66B – an open source, 66 billion parameter version of its OPT language model.

The OPT-175B model has 175 billion parameters and was released in early May. Released alongside that model was a suite of smaller-scale baseline models, trained on the same datasets. Those smaller models had parameter counts of 125 million, 350 million, 1.3 billion, 2.7 billion, 6.7 billion, 13 billion and 30 billion. The 66 billion is now available on GitHub along with the Meta logbooks used for training.

The differing sized models would allow researchers the ability to study the effect of language model scaling, the company said.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)