Edge ML Processing Will Soon Bring AI To Every Device

February 16, 2018

SAN FRANCISCO, CA - A new machine learning processor promising 'ultimate scalability' will soon deliver AI capabilities to a huge range of edge devices.

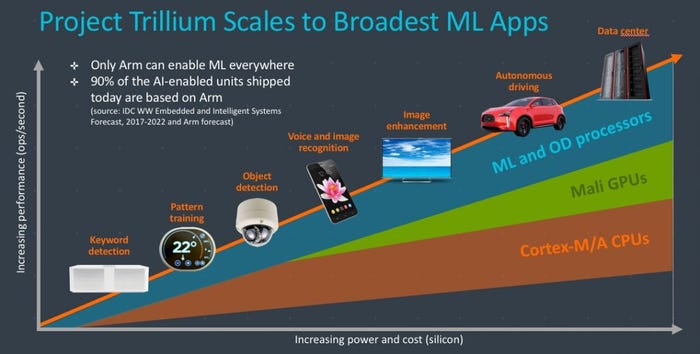

On Tuesday, Feb 13, Arm Technology - one of the most influential chip designers in the world - announced Project Trillium, a new processing platform specifically designed for machine learning and neural network capabilities. The Arm ML processor and the Arm Object Detection processor will initially give mobile devices advanced AI capabilities, but this will eventually extend to IoT sensors, smart speakers, and much more.

"The rapid acceleration of artificial intelligence into edge devices is placing increased requirements for innovation to address compute while maintaining a power efficient footprint," explained Rene Haas, President of Arm IP Products Group. "New devices will require the high-performance ML and AI capabilities these new processors deliver. Combined with the high degree of flexibility and scalability that our platform provides, our partners can push the boundaries of what will be possible across a broad range of devices."

Jem Davies, Arm VP of machine learning, told Quartz that what makes these processor designs unique is their scalability, meaning they can be used just as effectively in IoT devices as in large data centers. "Arm's new ML and object detection processors not only provide a massive efficiency uplift from standalone CPUs, GPUs, and accelerators, but they far exceed traditional programmable logic from DSPs," the company state in a press release.

The machine learning processor is built specifically for ML tasks, using scalable Arm ML architecture, promising market-leading performance and efficiency for AI applications. For mobile computing, this translates into more than 4.6 trillion operations per second; in practical terms, it enables a host of AI capabilities from language translation to facial recognition and navigation. The Arm ML processor is underpinned by the Arm OD processor, which feeds the ML unit real-time visual data for use in facial recognition.

With 90% of AI-enabled devices already shipping using Arm architecture, chipmakers like Qualcomm, Intel, NVIDIA, and Amazon will be taking notice in the race to get specialized AI chips to market. Moving AI capabilities out of the cloud and at the edge of computing promises huge benefits. "Specialized AI hardware means - in theory - better performance and better battery life. But there are also upsides for user privacy and security, and for developers as well," James Vincent writes in The Verge. "Having dedicated hardware encourages more on-device AI, which means less risk to users of data getting leaked or hacked."

Project Trillium products will be available for early preview in April, with general availability in mid-2018.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)