Novel approach needs only 'marginal amounts' of real-world data to train robots to do new tasks.

At a Glance

- GenAug generates variations of images from a simple scene to train robots in new tasks.

- GenAug can be used to generalize actions at scale using little input data.

- GenAug struggles with visual consistency for frame augmentation in a video.

Meta AI researchers have unveiled a new method that uses text-to-image models to teach robots how to do new tasks, opening the door for robotic systems to have broader and more general capabilities.

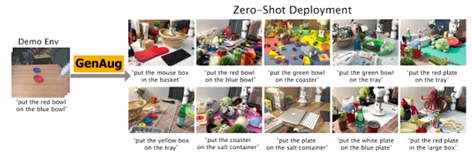

Dubbed GenAug, or Generative Augmentation for Real-World Data Collection, the method creates images of new scenarios by simply typing in text. Robots will train on these new scenarios to learn capabilities that can be generalized across tasks.

Without using generative AI, training robots for new tasks would require “large diverse datasets that are expensive to collect in real-world robotics settings,” the researchers wrote in a blog.

The new method can create entirely different and yet realistic images of environments to improve the robot’s ability to manipulate objects − with much less data. “We apply GenAug to tabletop manipulation tasks, showing the ability to re-target behavior to novel scenarios, while only requiring marginal amounts of real world data,” the researchers said.

According to Meta’s researchers, the GenAug method showed a 40% improvement in generalization to novel scenes and objects than traditional training methods for robotic object manipulation.

How it works

Robots need to be taught how to behave but also how to behave optimally. To achieve optimal behavior in settings like warehouses and factories, robotics engineers will apply techniques such as motion planning or trajectory optimization.

According to the team behind GenAug, however, such techniques “fail to generalize to novel scenarios without significant environment modeling and replanning.”

Instead, the researchers contend that using techniques such as imitation learning and reinforcement learning could generalize behaviors to teach robotic systems without having to model specific environments.

GenAug can use language prompts to change an object’s texture or shape or add new backgrounds to create examples to teach robots. Meta’s research paper shows that GenAug can be used to create 10 real-world complex demonstrations from a single, simple environment.

“We show that despite these generative models being trained on largely non-robotics data, they can serve as effective ways to impart priors into the process of robot learning in a way that enables widespread generalization,” Meta’s research reads.

Limitations

GenAug does have some limitations. For example, it does not augment action labels, meaning the system assumes the same action still works on the augmented scenes.

GenAug also cannot guarantee visual consistency for frame augmentation in a video. It takes about 30 seconds to complete all the augmentations for one scene, which might not be practical for some robot-learning approaches.

For future research into using generative AI to train robots, the Meta team said GenAug could see whether a combination of language models and vision-language models yields improve scene generations.

The code for GenAug is not yet available but is “coming soon,” according to Meta’s team.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)