It also revealed a new data center design, a coding assistant and an update on its AI supercomputer.

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Meta unveiled its first custom AI chip, a data center designed for AI, a scalable video processor and new AI coding assistant

- Meta also said it has completed the second-phase build out of its supercomputer, RSC, which was used to train LLaMA.

- Meta's strategy is to build, operate and control the full stack of next-generation AI infrastructure.

For those wondering what Meta CEO Mark Zuckerberg’s generative AI plans are since he is focused on the metaverse, wonder no more.

Today, the social media giant unveiled its first custom chip for running AI models, a new data center design ‘optimized’ for the heavy workloads of AI, and a scalable video processor. It also said it has completed the second-phase buildout of its 16,000-GPU supercomputer, which it uses to train large language models.

Meta also took the wraps off its new generative AI coding assistant, CodeCompose, which would compete against GitHub’s Copilot and Google’s enhanced Colab.

Stay updated. Subscribe to the AI Business newsletter

“We’re executing on an ambitious plan to build the next generation of Meta’s AI infrastructure,” said Santosh Janardhan, head of infrastructure at Meta, in a blog post. Meta custom designs much of its infrastructure to optimize the end-to-end experience, from data centers to server hardware to mechanical systems.

“This will be increasingly important in the years ahead,” Janardhan said. “Over the next decade, we’ll see increased specialization and customization in chip design, purpose-built and workload-specific AI infrastructure, new systems and tooling for deployment at scale, and improved efficiency in product and design support.”

What Meta announced

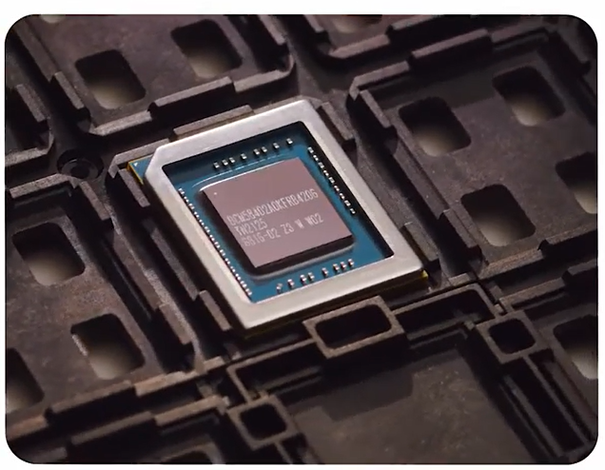

MTIA AI chip

MTIA stands for Meta Training and Inference Accelerator. It is Meta’s first custom chip for running AI models and is optimized for its own internal workloads. Zuckerberg said in a Facebook post that the chip will do things such as “power our AI recommendation systems to help figure out the best content to show you even faster.”

Meta CEO Mark Zuckerberg with the MTIA chip

Data center designed for AI

Meta unveiled a new data center design that it said is optimized for AI and is also faster and cheaper to build. This new design is “equipped to support liquid cooling hardware to handle training and inference at large scale, and a network designed to support large scale superclusters,” Zuckerberg shared.

The design will have a higher level of flexibility so Meta can pivot more quickly as the AI landscape shifts. It will house fewer but denser racks to support large scale AI clusters - so these facilities will be smaller while having the same capacity as older designs. It will be optimized for water and energy use.

Research SuperCluster (RSC) AI supercomputer

Meta has completed the second-phase build out of RSC, one of the fastest supercomputers in the world and which was used to train LLaMA. It uses 16,000 Nvidia A100 chips. At full strength, it achieved nearly five exaflops of computing power. (A human has to do one calculation per second for 31 billion years to equal what a 1-exaflopg computer system can do in one second.)

“We use it to train our large language models, as well as the world’s first AI translation system for oral languages,” Zuckerberg wrote. “Building this cluster ourselves lets us tune it to train our next generation foundation models.”

CodeCompose

This is Meta’s generative AI coding assistant to help its engineers code. “Our longer term goal is to build more AI tools to support our teams across the whole software development process, and hopefully to open source more of these tools as well,” Zuckerberg said.

MSVP chip

MSVP stands for Meta’s Scalable Video Processor and it is the company’s first-generation, server-grade video processing hardware accelerator. The chip is designed to process videos on demand (VOD) and live streams at scale, as Meta’s social platforms serve billions of people worldwide.

The chip is programmable and scalable and supports high quality transcoding for VOD and low latency and faster processing for livestreams. Meta claims the chip is nine times faster than traditional software encoders and results in the same video quality but consumes only half the energy.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)