The minds behind DALL-E create a tool that can create a representation of a model in minutes.

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

OpenAI, the Microsoft-backed company behind DALL-E and ChatGPT, has unveiled Point-E, an AI system capable of generating representations of 3D models from text prompts.

Point-E functions the same as OpenAI’s image tool DALL-E – simply type in something like, 'a corgi wearing a Santa hat' and the system will generate the response, in this case, a representation of a 3D model.

According to a paper, OpenAI claims its AI-powered method for 3D object generation produced outlines for models in just one to two minutes on a single GPU.

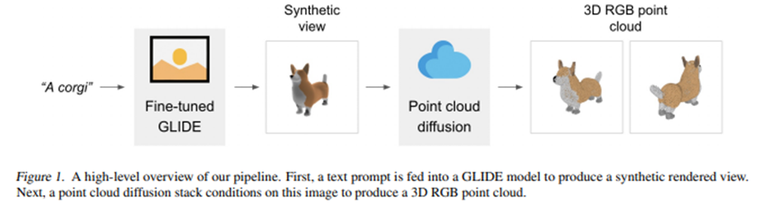

Explaining the process, the paper says: “Our method first generates a single synthetic view using a text-to-image diffusion model, and then produces a 3D point cloud using a second diffusion model which conditions on the generated image.”

Point-E is comprised of two models – text-to-image and image-to-3D.

To produce a 3D object from a text prompt, Point-E samples an image using the text-to-image model, and then samples a 3D object conditioned on the sampled image.

The text-to-image model, similarly to OpenAI's DALL-E, first sifts through large amounts of image and text data to match the desired prompt, while the image-to-3D model is trained on a smaller dataset of 3D models and image data.

Credit: OpenAI

According to OpenAI, both of these steps can be performed “in a number of seconds, and do not require expensive optimization procedures.”

Point-E doesn't generate models, but rather the point views, which can be converted to a mesh using the file formats STL or PLY to create the 3D model in platforms like Unity, Maya and Blender.

According to OpenAI’s researchers, Point-E has the potential to “democratize 3D content creation for a wide range of applications” including virtual reality, gaming and industrial design.

OpenAI’s research team released the point cloud diffusion models, as well as evaluation code and models via GitHub. An example of Point-E can be demoed via Hugging Face.

The team behind the generation method, however, acknowledged its limitations, but were content with Point-E’s pace, saying, “While our method still falls short of the state-of-the-art in terms of sample quality, it is one to two orders of magnitude faster to sample from, offering a practical trade-off for some use cases.”

Point-E isn’t the only AI-powered tool capable of generating representations of 3D models. Back in October, Google unveiled DreamFusion, a generative AI tool that can generate 3D representations of objects without 3D data. Instead, DreamFusion uses 2D images of an object generated by the Imagen text-to-image diffusion model to understand different perspectives of the model it is trying to generate.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)