R-NaD method can be used in situations where others’ intent is unknown

Google’s DeepMind has unveiled DeepNash, an AI agent designed to play the board game Stratego at a level akin to human experts.

Board games have long been used as an indicator to measure the progress of AI, from DeepMind’s own AlphaGo that beat the Go world champion Lee Sedol to the chess-playing DeepBlue from IBM some 25 years ago that beat world champion Garry Kasparov.

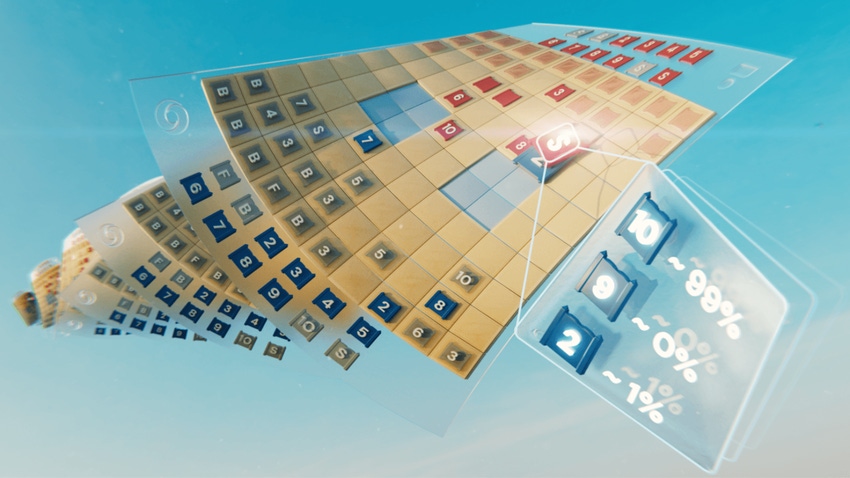

But compared to Chess and Go, Stratego is “a game of imperfect information” according to DeepMind. “Players cannot directly observe the identities of their opponent's pieces.”

Players start Stratego by arranging their 40 playing pieces in whatever starting formation they like, initially hidden from one another as the game begins. The challenge of the game comes from not knowing your opponent’s position, meaning players need to consider all possible outcomes when placing their pieces.

Due to the lack of knowledge of the other player’s moves at the start of the game, other AI game systems, such as DeepMind’s AlphaZero, are not easily transferrable to work on Stratego.

Instead, DeepNash uses a novel approach based on a combination of game theory and model-free deep reinforcement learning. “Model-free” means DeepNash is not attempting to explicitly model its opponent's private game-state during the game.

The AI agent is powered by a new game-theoretic algorithmic idea, Regularised Nash Dynamics (R-NaD). The novel approach sees the system applying a Nash equilibrium.

Named after mathematician John Nash, a Nash equilibrium is where each player is assumed to know the equilibrium strategies of the other players, and no one has anything to gain by changing only one's own strategy.

Using the idea of a Nash equilibrium, DeepNash was then tasked with playing against itself on the online Stratego platform, Gravon. The AI achieved win rates of 97% against bots and 84% against some of the top expert human players – meaning DeepNash secured an all-time top-three ranking.

Vincent de Boer, paper co-author and former Stratego World Champion, said: “The level of play of DeepNash surprised me. I had never heard of an artificial Stratego player that came close to the level needed to win a match against an experienced human player.”

“But after playing against DeepNash myself, I wasn’t surprised by the top three rankings it later achieved on the Gravon platform. I expect it would do very well if allowed to participate in the human World Championships.”

According to DeepMind, the R-NaD method used to power DeepNash can be applied to other two-player, zero-sum games.

“R-NaD has the potential to generalize far beyond two-player gaming settings to address large-scale real-world problems, which are often characterized by imperfect information and astronomical state spaces,” the company said.

DeepMind also states that the R-NaD method could be applied to other areas of AI that feature a large number of human or AI participants with different goals that might not have information about the intention of others. For example, in the large-scale optimization of traffic management to reduce driver journey times.

DeepNash, which was unveiled in a paper published in Science, comes hot off the heels of Cicero, an AI model built by rival researchers at Meta capable of playing the strategy game Diplomacy.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)