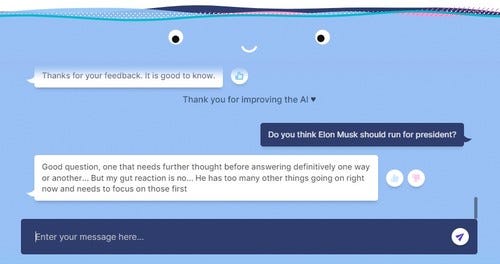

See what it says on whether Elon Musk should run for president.

Meta has released a chatbot to the public so it can get trained on real world conversations − even though it runs the risk that some chats might become offensive.

The company recently released BlenderBot3, a chatbot built from Meta’s 175 billion-parameter language model called OPT-175B, on its quest to build a more robust conversational AI system.

Most publicly available training datasets for chatbots are usually collected through research studies and use human annotators to add notes to the text, according to Joelle Pineau, managing director of fundamental AI research at Meta, in a blog.

As such, they do not reflect the “diversity of the real world,” she said.

“Researchers can’t possibly predict or simulate every conversational scenario in research settings alone,” she wrote. “The AI field is still far from truly intelligent AI systems that can understand, engage and chat with us like other humans can.”

BlenderBot3 hopes to bridge the gap by getting trained on real world conversations and also receive feedback from actual human users “in the wild,” Pineau said.

Try it for yourself in this live, interactive conversational AI demo. (Users must be over 18 to use BlenderBot3, acknowledge that it’s to be used for research and entertainment purposes only and that it can make untrue or offensive statements. Users must also agree not to intentionally trigger the bot to make offensive statements.)

Figure 1:  Should Tesla CEO Elon Musk run for president?

Should Tesla CEO Elon Musk run for president?

She said Meta also developed new techniques to shield the chatbot from users who want to trick it into making offensive comments — while still making sure the bot learns from “helpful” teachers. (In 2016, Microsoft’s infamous Tay chatbot was manipulated by users to spew hateful comments.)

“Our goal is to help the wider AI community build models that can learn how to interact with people in safe and constructive ways,” Pineau said.

BlenderBot3’s predecessors – BlenderBot and BlenderBot2 – broke new ground as chatbots that weaved personality and empathy into their conversations rather than just transactional responses, according to Meta. To craft responses, the bots search the internet and retain a memory of prior conversations with the user. They also learn as they keep chatting.

Related story: Meta AI releases vast language model to expand access to innovation

According to Meta, BlenderBot3 performed better than its predecessors. Human users gave it a 31% better overall rating on conversations; they also see it as being twice as knowledgeable and wrong 47% less of the time.

But BlenderBot3 had some publicized failures as well. It repeated internet conspiracies such as billionaire George Soros creating swine flu or that Trump is still president. It even took aim at Meta CEO Mark Zuckerberg, reportedly calling him "too creepy and manipulative."

Pineau acknowledged that it is “painful to see some of these offensive responses.” However, “public demos like this are important for building truly robust conversational AI systems and bridging the clear gap that exists today before such systems can be productized.”

Ben Wodecki contributed to this report.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)