'A new computing platform has emerged,' says Jensen Huang

At a Glance

- ChatGPT has ushered in a 'new computing platform' similar to the PC, internet and mobile web revolutions.

- Countries and companies will develop their own specialized, proprietary large language models and ChatGPT-like chatbots.

- Nvidia is putting its AI infrastructure in the cloud to give companies access to tools they need to build their models.

Sixty-seven years after the term artificial intelligence was coined, AI is finally realizing its potential − thanks to ChatGPT.

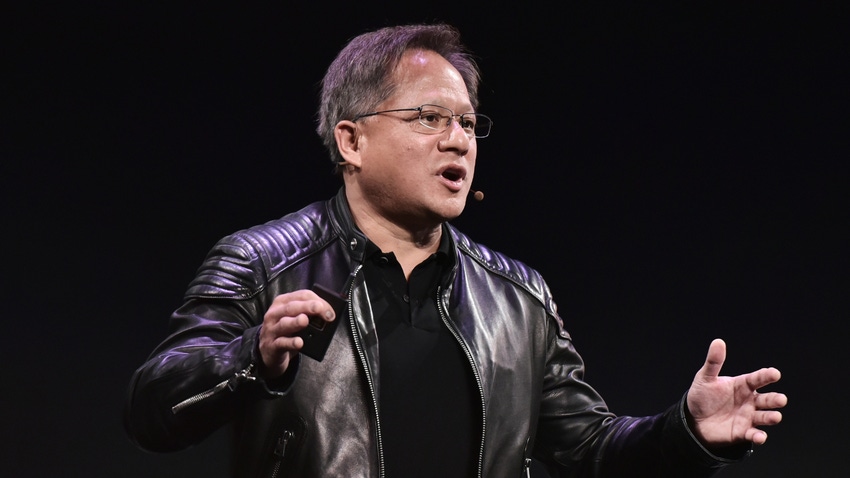

AI is at “an inflection point" due to several technological innovations that came together, said Nvidia CEO Jensen Huang, in his latest earnings call with analysts. Importantly, the excitement around generative AI's versatility and capability has "triggered a sense of urgency at enterprises around the world to develop and deploy AI strategies."

Today, “a new computing platform has emerged,” declared Huang.

New companies, new applications and new solutions to long-standing challenges are being invented at an “astounding rate,” he observed. “The level of activity around AI, which was already high, has accelerated significantly.”

Nvidia should know. It has a good sense of AI’s surging popularity because it held 91% of the enterprise GPU market in 2022, according to IDC. GPUs, or graphics processing units, are specialized chips ideal for AI and high-performance computing.

“There's no question that this is a very big moment for the computer industry. Every single platform change, every inflection point in the way that people develop computers happened because it was easier to use, easier to program and more accessible,” Huang said.

He likened the generative AI revolution to other big tech shifts: When personal computers hit the mass market, when the internet was born and mobile cloud arrived via smartphones. With mobile cloud, it became “so easy” to develop, deploy and distribute apps because of the app stores, he said.

“The same exact thing is now happening to AI,” Huang said. “In no computing era did one computing platform, ChatGPT, reach 150 million people in 60, 90 days. This is quite an extraordinary thing. And people are using it to create all kinds of things. What you're seeing now is just a torrent of new companies and new applications that are emerging.”

Why ChatGPT is more than a fad

How exactly did one chatbot trigger such an avalanche of excitement?

ChatGPT benefited from several tech breakthroughs – notably Google’s transformers as well as large language models – to become a chatbot that “surprised everybody with its versatility and its capability,” Huang said.

ChatGPT was a single AI model that can perform tasks and skills that it was never trained to do, Huang explained. It not only can write human language in different languages, but also computer languages such as Python, Python for Blender (3D program) and even the old programming language Cobalt.

“It’s a program that writes a program for another program,” Huang said. “This type of computer is utterly revolutionary in its application because it has democratized programming to so many people and really has excited enterprises all over the world.”

One anecdotal proof: Huang said Nvidia saw inferencing activity – the use of a trained neural network to make a prediction – on large language models through its Hopper and Ampere GPU data center architecture go “through the roof in the last 60 days.”

ChatGPT was launched about 90 days ago and it has since racked up a billion page views, according to Similarweb.

A proprietary ChatGPT and bottleneck

ChatGPT is built atop a large language model that took its text from the internet in multiple languages. Therefore its output is culled from general knowledge scraped from publicly available online text.

This means that other countries and companies that want to develop their own ChatGPT-like chatbot might be motivated to create their own large language models that can account for differing cultures and bodies of knowledge, as well as various fields such as biology or physics.

Companies also might want to develop their own ChatGPT-like chatbot that is trained on the firm’s proprietary information.

“The most valuable data in the world are proprietary, and they belong to the company,” Huang said. “It's inside their company. It will never leave the company. And that body of data will also be harnessed to train new AI models for the very first time.”

But the bottleneck is the amount of computing power needed to create and deploy these specialized large language models. Most companies do not have an AI supercomputer infrastructure, model algorithms, data processing, and training techniques to develop their own models.

Nvidia believes it has a solution: It launched DGX Cloud through Microsoft Azure, Google Cloud Platform and Oracle Cloud to let companies access its AI supercomputer infrastructure, models and algorithms. They also get access to its NeMo and BioNeMo customizable AI models for companies that want to build their proprietary generative AI models and services.

Whether companies will flock to it remain to be seen. Huang said that by hosting these AI capabilities in the cloud, “we can accelerate the adoption of generative AI in enterprises.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)