A concept artist's personal essay against the evils of generative AI

December 14, 2022

As a concept artist in the video game industry, I approached the recent explosion of AI-powered image generation services with thoughts that ranged from ‘That’s kind of neat’ to ‘This will be the death of art.’ At the moment, I mostly just feel used.

My major concerns and frustrations primarily revolve around the datasets used by these AI services. AI image generation is only made possible by the indiscriminate collection of billions of images from across the internet. These datasets have been found to contain private medical data as well as graphic images of sexual and physical violence. Your own face may be in there.

Those things alone should be enough to make us stop and question the ethics and legality of such a massive data collection effort. But even if you were to set those cases aside and view them as a few outliers that slipped in among five billion other pieces of content, the fact is these datasets consist of an enormous amount of copyrighted material.

Companies such as Stability AI and Open AI have created what amounts to the source code of their services using the copyrighted work of artists from all around the world. I have even been able to verify that my own work is being used through a site called ‘Have I Been Trained?’ I did not give them permission to use my work, and I have no way to ask them to stop. Even if I could ‘opt out,’ once an AI has been trained there is currently no way of getting it to forget what has been added without completely starting over.

Data ‘laundering’

Take the example of LAION, or Large-scale Artificial Intelligence Open Network. It is a nonprofit and one of the large players in this field that aims to make large-scale machine learning models, datasets and related code available to the general public. As a nonprofit, it is allowed to collect the copyrighted material as part of research.

However, the data collected is being used by companies like Stability AI to create for-profit services. By the way, Stability AI has said it supports LAION’s research. LAION is even listed on the Stability AI website alongside its products. This unethical ‘laundering’ of data through a nonprofit to avoid legal penalties should not be allowed to continue, but unfortunately it is becoming a trend.

Open AI, developers of the image generator DALL-E, actually consists of two companies: the for-profit Open AI LP and the nonprofit parent Open AI Inc. Open AI disclosed this structure on its blog: “We want to increase our ability to raise capital while still serving our mission, and no pre-existing legal structure we know of strikes the right balance. Our solution is to create Open AI LP as a hybrid of a for-profit and a nonprofit which we are calling a capped-profit company.”

Regardless of the legal implications of what they are doing, the simple fact is that it is wrong for these organizations to profit off work they do not own, and did not ask permission to use.

So why don’t the AI companies simply limit the dataset to the public domain and avoid the whole issue? I think it is for two reasons.

Reason 1: They think that if they move fast enough and grow large enough, everyone will throw up their hands and say it is too late to stop. The cat’s out of the bag. I have already seen plenty of this sentiment online, and it could be exactly what the AI companies want. They are counting on visual artists not being willing to stand up for their rights, and the ownership of their work.

Stability AI is also in the process of developing an AI music generator called Dance Diffusion. The following was stated on the developers site: ”Dance Diffusion is also built on datasets composed entirely of copyright-free and voluntarily provided music and audio samples. Because diffusion models are prone to memorization and overfitting, releasing a model trained on copyrighted data could potentially result in legal issues.”

So why was so much care taken to only use copyright-free music in the Dance Diffusion dataset, and no such consideration given to visual artists? Because the music industry is notoriously litigious, and visual artists are not. We do not have big labels looking to protect their assets in the illustration industry. It is mostly up to the individual to protect his or her work, and in the face of such overwhelming forces, that is not an easy thing to do.

Reason 2: Results would not be as good if they limit the dataset. Without all those images ripped from sites such as ArtStation and DeviantArt, users would not be able to add the name of their favorite contemporary artist to a prompt in order to copy his or her style and content.

For example, artist Greg Rutkowski’s name has been used to generate more than 93,000 images on Stable diffusion alone. Recall Dance Diffusion’s disclosure again: “Diffusion models are prone to memorization and overfitting.” Overfitting is a somewhat nebulous term, but in this context it means copying things within the dataset.

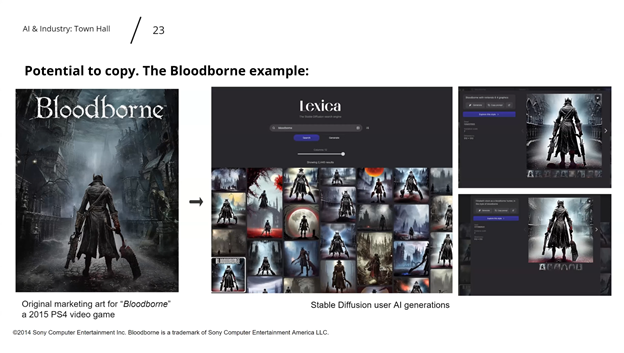

Below you can see images from Stable Diffusion that are clearly recreating the box art of a popular video game. If the companies were to limit themselves to public domain, users would not be able to generate images of intellectual properties they do not own. If I were to create and sell these images, I would at the very least get hit with a cease-and-desist order.

Slide from Concept Art Association town hall meeting

But not only are they taking money for these images, they are granting commercial use rights to the end users. I am counting the days until Disney catches on and sees thousands of images of their proprietary characters being monetized and granted the rights for commercial use by AI companies.

Shouldn’t this be enough to prove that they are profiting from the work of others without offering compensation or even the option to not participate? I support my family with the money I make creating art, and these services put my career in jeopardy. Text-to-image generators have certainly created a great deal of stress, anxiety, and anger. My plea is for the public to reconsider supporting this technology in its current state.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)