AI Business provides an in-depth look at NLP use cases and tools

AI Business provides an in-depth look at NLP use cases and tools

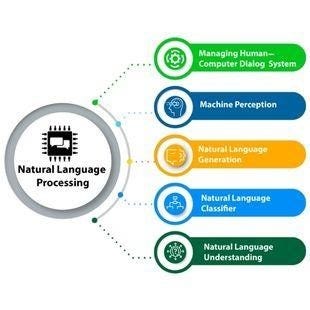

Natural Language Processing (NLP) is a branch of artificial intelligence that allows computers to communicate with humans.

Drawing on multiple disciplines, including computer science, data science and linguistics, NLP enables computers to read, understand, interpret, derive meaning and manipulate human language.

While not a new science, the technology surrounding the evolution of NLP is advancing rapidly, thanks to increased interest in human-to-machine communications, the availability of big data and enhanced algorithms.

Natural language processing is about giving machines the ability to speak. Having machines talk and respond to us in a human-like manner is already a reality. The questions you type into your search bar, the sorting of emails into your junk mail, your smart assistant and autocorrect on your phone are all powered by NLP. While humans communicate with words, computers operate in the language of numbers, enabling them to perform data-intensive tasks at lightning speed.

How NLP works, and what main tasks can be accomplished

NLP applies multiple techniques for interpreting human language, deriving meaning, and performing tasks. By transforming text into vectors (text vectorization) of numbers, machines are fed training data and expected outputs (tags) to create a machine learning algorithm. The algorithm then associates particular inputs with corresponding outputs. Statistical analysis methods then help build the computer's knowledge bank and discern which features best represent the texts before making predictions on unseen data.

Ultimately, the more data the algorithm receives, the more accurate the text analysis model is. Eventually, the machine learning models become so sophisticated that the computers learn independently and without inputting manual rules.

Image: Xoriant

Task 1: Sentiment Analysis

The most popular NLP task. Sentiment analysis, or opinion mining, classifies text by categorizing opinions into positive, negative or neutral, and helps businesses determine and monitor brand and product perception through customer feedback, social media monitoring, market research and customer service.

Task 2: Syntactic Analysis

Syntactic analysis deciphers the logical meaning of given sentences, or parts of those sentences, and measures them against the formal rules of grammar.

Task 3: Semantic Analysis

Semantic analysis extracts meaning from sentence structure, word interactions and related concepts. The machine comprehends data under various logical clusters instead of preset categories, like positive or negative/conflict or neutral.

Task 4: Tokenization

An essential task in NLP, tokenization is used to break a string of words into semantic units, known as tokens. Sentence tokenization splits words in a text generally identified by stops. Word tokenization separates words within a sentence by identifying blank spaces. For more complex structures, like-words (Tel Aviv) that often go together form collocations.

Task 5: Part-Of-Speech Tagging

PoS tagging, or grammatical tagging, applies labels to each token (word) in a text corpus to indicate the part of speech and other grammatical categories such as tense, number, case, for example, and identifies the relationship between words to understand the meaning of a sentence.

Task 6: Dependency Parsing

Dependency Parsing is the process of analyzing the grammatical structure of a sentence to find headwords, dependent words and how they relate to each other. Various tags represent the connection between two words in a sentence, known as dependency tags.

Task 7: Constituency Parsing

The process of analyzing sentences by breaking them down into sub-phrases, also known as constituents. Such sub-phrases belong to a specific category of grammar; noun phrases and verb phrases.

Task 8: Lemmatization and Stemming

Humans often use an inflected form of a word or different grammatical structures to speak. NLP uses stemming algorithms to remove prefixes and suffixes to return the word to its root, often leading to incorrect meanings and spellings. Lemmatization considers the context and converts the word to its meaningful base form, called Lemma. Sometimes, the same word can have multiple different Lemmas, so the Part of Speech (POS) tag should identify the word in that specific context.

Task 9: Stopword Removal

Crucial to the NLP process, high-frequency stop words with no semantic value to a sentence (which, to, at, for, is) needs to be filtered out to decrease the size of the dataset and the time it takes to train a model. By removing stopwords, fewer and only meaningful tokens remain, resulting in improved task performance and increased classification accuracy.

Task 10: Word Sense Disambiguation

Depending on their context, the same word can have different meanings. Often appearing unconscious in humans, computers use two main techniques to determine which sense of a word is meant in the sentence. Using either a knowledge-based approach that infers meaning by observing the dictionary definition or a supervised method that uses NLP algorithms to apply word sense disambiguation.

Task 11: Named Entity Recognition

NER is the process of extracting entities from within a text, such as names, places, organizations, email addresses, and more. This NLP technique automatically classifies the entity and organizes it into predefined categories, proving most useful when sorting unstructured data and detecting important information - crucial for large datasets.

Task 12: Text Classification

The process of automatically analyzing and structuring text to understand the meaning of unstructured data. Results then get organized into predefined categories (tags), used mainly by businesses to automate processes and discover insights that lead to better decision-making. Sentiment analysis is a form of text classification.

Tools used in NLP

Humans are conversing and engaging online. Whether via chat, email, websites, or social media, we generate vast volumes of text data every second, proving an invaluable source of information. Businesses desperate to learn the insights of their customers' preferences and expectations are investing substantially into automated processes, as manual sorting proves far too overwhelming, time-consuming and repetitive for humans. However, most companies are struggling to find the best solution to analyze all the unstructured information.

Fast evolving, updating and continually advancing features every year, NLP tools are sorting data and uncovering valuable insights in seconds. Mainly developed through SaaS tools or open-source libraries, these tools prove cost-effective and quick to execute. SaaS tools come ready-to-use with powerful cloud-based solutions ready to be implemented at low or no code.

Offering pre-trained NLP models, SaaS tools are perfect for users who want a flexible, low-code solution to simplify their work. Open-source libraries, however, are free, flexible, fully customizable and usually built in a community-driven framework with access to extensive support. Aimed at developers with experience in complex machine learning for open-source NLP tools, users need time to formulate infrastructures and money to invest in developers if there is no in-house team of experts already on hand.

Top SaaS tools and NLP libraries:

Tool 1: NLTK

One of the leading tools for NLP, Natural Language Toolkit renders a whole set of programmes and libraries to execute statistical and symbolic analysis in Python. Focussed on research and education in the NLP field, NLTK boasts an active community, a wide range of tutorials, sample datasets and comprehensive resources. Helping to tokenize texts and recognize named entities and tags, NLTK is easy to use and provides a practical introduction to programming for language processing.

Tool 2: SpaCy

Providing the fastest and most accurate syntactic analysis compared to any NLP library available, SpaCy is a successor of NLTK and comes with pre-trained statistical models and word vectors created for use in Python and Cython. Easy to use, well-documented and designed to support large volumes of data, SpaCy best serves customers wanting to prepare texts for deep learning and excels at extraction tasks. Supporting tokenization of 49-plus languages, the tool breaks text into semantic segments, can be used for named entity recognition, and recognizing dependencies in sentences.

Tool 3: MonkeyLearn

A user-friendly, NLP-powered platform, MonkeyLearn offers pre-trained models to perform text analysis tasks or customized machine learning tailored to businesses seeking more accurate in-depth insights. Once models are trained, text analysis tools can be connected to apps such as Google Sheets, Zendesk, Excel and even Zapier. With no coding needed, integrations are made seamless and quick.

Tool 4: Google Cloud

Providing several pre-trained models for sentiment analysis, content classification, entity extraction, and more, Google Cloud Natural Language API offers AutoML Natural Language. Allowing customers to build customized machine learning models and granting access to the Google Cloud infrastructure, customers also gain access to Google’s question, answer and language technology.

Tool 5: IBM Watson

A suite of AI services stored in the IBM Cloud, IBM Watson’s primary feature includes the Natural Language Understanding that enables users to recognize and extract keywords, categories, emotions, entities and more. Able to be modified to different industries and disciplines, the store is loaded with helpful documents to get users started.

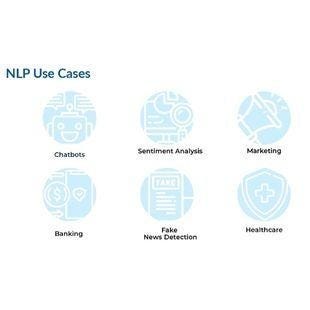

Natural Language Processing use cases

Acting as the cornerstone for numerous applications that we use every day, from translation and autocorrect to chatbots, the NLP market is estimated to reach $43B by 2025 - an increase of $38B from 2018.

Image: Litslink

Use Case 1: Translation

More complex than a simple word-to-word replacement method, language translation, as seen by Google Translate, deconstructs sentences and then reconstructs them in another language to create meaning. Through the power of the NLP task, word sense disambiguation and by following the pre-programmed algorithm that utilizes the pre-built knowledge bank, complex and ambiguous translations occur instantaneously from any language.

Computers do not understand context, so they need a process in which sentences get deconstructed, then reconstructed in another language to add meaning. Word sense disambiguation is then deployed to solve the issue of complexity and ambiguity and follows the pre-programmed algorithm that utilizes the pre-built knowledge bank.

Use Case 2: Conversational AI

Automatic conversation between computers and humans is at the heart of chatbots and virtual assistants such as Apple’s Siri or Amazon’s Alexa. Conversational AI applications rely heavily on NLP and intent recognition to understand user queries and provide relevant responses.

Use Case 3: Dictation and Transcription

Used often in healthcare and journalism, physicians and journalists dictate into a voice recorder for transcription into text later on, through the power of NLP. Such tools help to remove arduous, time-consuming, repetitive and inefficient tasks and free up space for more meaningful and productive human tasks. Dictation software is available on your iPhone’s keyboard, and software like Otter.ai provides transcription.

Use Case 4: Financial Credit Scoring

Performed originally by lenders, banks, and financial institutions who extract data from large unstructured documents such as loans, investments, income and expenses statements, now utilize NLP software to perform these tasks automatically. NLP-driven statistical analysis also determines the creditworthiness of an individual or business much faster, more accurately and more in-depth than a human can.

Use Case 5: Spam Detection

NLP models that use text classification can detect spam-related words, sentences, and sentiments in emails, text messages and social media messaging apps by removing filling and stop words. Data cleaning and preprocessing can then occur ready for tokenization and then PoS tagging.

Natural Language Processing Limitations

Although NLP is a powerful tool with endless benefits, there are still several limitations yet to be addressed.

Limitation 1: Irony and Sarcasm

Proving difficult for machines to decipher as irony and sarcasm use words that are - by definition -positive or negative, yet imply the opposite. Even though models are programmed to recognize cues and phrases such as "yeah, right" or "whatever", humor, and its context, can't always be taught in a linear process (similar to humans learning a new language).

Limitation 2: Errors

Misspelt or misused words can create problems for text analysis. While autocorrect and grammar correction can handle common mistakes, they don’t always understand the writer’s intention. The same proves havoc in the spoken word with mispronunciations, different accents and stutters. However, as databases grow and smart assistants are trained by individual users, these issues can be substantially minimized.

Limitation 3: Colloquialisms and Slang

Idioms, informal phrases, cultural-specific lingo and expressions can cause several problems for NLP - especially those built for wide use. Colloquialisms may not have a dictionary definition, and sometimes expression meanings can differ depending on the geographical location. Cultural language, including slang, morphs constantly with new words evolving daily - so it is difficult for machines to stay up-to-date.

Conclusion

Surrounded by text, we read signs, books, text messages, emails, articles, social media captions and webpages every day.

In 2020, predictions revealed that more than a quarter of all organizations had a chatbot or virtual assistant integrated into their services. Spearheaded by the global pandemic, humans communicated almost entirely online - providing unfathomable amounts of data and insights.

NLP has the power to action those insights and the authority to empower workers to work smarter, more efficiently and intuitively. It can reshape industries, augmenting our professional and personal lives by increasing productivity in less time. While we are still very much in the developmental stages, the rate of advancement is exponential, with breakthroughs occurring almost daily.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)