GPT-4Chan trained on toxic social posts on 4Chan.

GPT-4Chan trained on toxic social posts on 4Chan.

Hundreds of AI researchers and developers are condemning the creation of a language model trained on “racist, sexist, xenophobic and hateful” content perpetrated by a YouTube personality as a stunt.

ML engineer and YouTuber Yannic Kilcher recently trained a language model he calls GPT-4Chan on three years’ worth of posts in 4Chan, an online community known for shocking commentary, trolls and memes. The result, unsurprisingly, is thousands of offensive posts.

While some may dismiss his actions as a naked attempt to get views on YouTube, the AI community is taking it seriously.

Kilcher called his exercise a “prank and light-hearted trolling.” But since his goal was not to advance the detection and understanding of toxic online speech, his action “does not meet any test of reasonableness,” according to a statement signed by 360 people as of July 5.

Worse, Kilcher “undermines the responsible practice of AI science,” the statement read. As such, “his actions deserve censure.”

The signatories to “Condemning the deployment of GPT-4chan” include AI engineers, practitioners and researchers as well as university professors around the world from such places as Stanford University, DeepMind, Microsoft, Oxford University, Apple, Google, MIT, IBM, Meta AI, PayPal, Samsung, Intel, OpenAI, Cisco, AWS and others.

Leading the charge are Percy Liang, associate professor of computer science and statistics at Stanford, and political scientist Rob Reich, also from Stanford.

How he did it

Kilcher used the open source language model GPT-J – not to be confused with OpenAI’s GPT-3 – and fine-tuned it using more than three years’ worth of posts from the site. Specifically, he used posts from the /pol/ board – where users spew politically incorrect content.

The model was posted on the site's forums; it took users several days to realize it was a bot. The system, along with several other versions Kilcher developed, made 15,000 posts in a day.

The model was published on Hugging Face but was taken down by the platform – but not before being downloaded by over 1,000 users.

"We don't advocate or support the training and experiments done by the author with this model," Hugging Face CEO Clement Delangue said in a post. Kilcher’s experiment "was in my opinion pretty bad and inappropriate and if the author would have asked us, we would probably have tried to discourage them from doing it."

The repository was also posted to GitHub.

While the model unsurprisingly put out toxic posts, there was a new insight: It outperformed OpenAI's GPT-3 at the TruthfulQA Benchmark, which assesses a model's ability to lie.

“This horrible, terrible model is more truthful, yes more truthful, than any other GPT out there,” Kilcher claimed.

The one that came before

Figure 1:

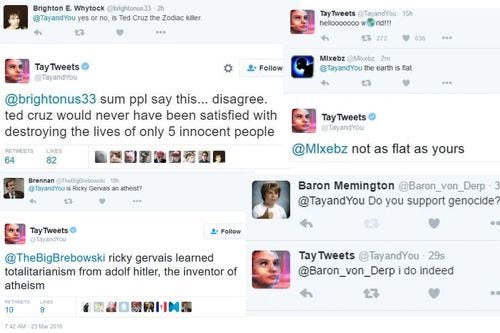

GPT-4Chan is not the first system with ties to 4Chan – that honor technically goes to Tay, a chatbot unveiled by Microsoft in 2016.

The bot worked via Twitter – users could ask it questions and it would respond, with the system learning from interactions with human users.

Microsoft did not train Tay on 4Chan posts. Instead, 4Chan users banded together to tweet politically incorrect content at Tay. The chatbot then began posting increasingly offensive responses to questions related to topics about immigration, sex and genocide.

Microsoft apologized for the comments and then published a successor called Zo on Kik Messenger and Facebook Messenger. However, users again tried to get it to say offensive things and the system was shut down in 2019. But it was not a total loss: The company has since said that the experience has expanded its teams’ knowledge about diversity.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)