Explainability is often not a sideshow but a core AI project deliverable and a must-have feature for several scenarios

January 27, 2022

Managers can choose between Scylla and Charybdis if they need an explainable AI model for complex prediction or classification tasks.

As we know from Homer, Odysseus had to pick with which of the two unpleasant sea monsters – Scylla or Charybdis - he wanted to deal with on his journey.

Similarly, data scientists can solve complex AI challenges in two ways. First, they can build (deep) neural networks. Neural networks are black boxes solving complex prediction and classification challenges – but they are black boxes.

Trained models consist of hundreds or thousands of formulas and parameters. Their behavior is, for humans, impossible to comprehend – a not-so-desirable option.

Alternatively, data scientists can rely on easy-to-understand models such as logistic and linear regressions or random forest. However, these models do not perform well for complex AI tasks – another non-desirable option [AB18]. Thus, is there any option for AI project managers to deal with complex AI challenges if they need to understand the AI component’s behavior as well?

The solution is explainable AI (XAI). It unveils a little bit of the magic of the neural network black box. A few years ago, XAI was a niche research area. Today, it is part of the big AI development platforms.

The question is: Why? Why would project managers and their organizations invest time, money, and development resources in explainability? Isn’t a high accuracy of the neural network predictions relevant more than everything else?

The need for explainable AI

Explainability is often not a sideshow but a core AI project deliverable. It is a must-have feature for three scenarios. Plus, there is a fourth, intuitive but not always substantiated XAI scenario. [SM19, AB20]

The three must-have scenarios for XAI are:

The model is the deliverable

Justification for ethical and regulatory needs

Debugging and optimization

When detecting objects in images, a well-known training failure is that neural networks do not detect the actual object but react to its context.

Even competition-winning neural networks had such flaws. In one case, the neural network did not recognize horses on images. Watermarks triggered this classification since only horse images in the training set had such watermarks. Instead of identifying boats and trains, the neural network detected water and rails [SM19].

Suppose data scientists know – for example, in object recognition – which regions of a concrete image decide which type of object the neural network detects. Then, data scientists can debug and optimize their neural networks much more straightforward. They detect more flaws similar to the watermark issue before models get into production systems and hit the real world, where they potentially cause harm or financial losses.

Today, we are at a turning point for AI usage. More and more AI-models make decisions with a direct impact on everybody’s life. Does a bank give you a mortgage? How many vaccination doses go to a specific town? It comes as no surprise that customers and society do not accept the outcome of AI models as God’s word: always correct and not to be questioned.

Companies and organizations have to justify decisions, even if made by AI. For example, the US Equal Credit Opportunity Act demands that banks communicate reasons when rejecting a credit. Also, the often-feared EU General Data Protection Regulation contains references to explainability.

At this moment, regulations might be high-level, vague, and weak. However, this is typically only the first step. More concrete regulations tend to follow over time, putting the topic on the senior management’s agenda soon.

Third, if the model is the core deliverable - not its application on new data – the model’s explanation is all that matters. A product manager needs to understand the buyers’ and non-buyers characteristics. The model has to tell him. It is not about applying the model to some customer data; it is about understanding what characterizes customers with high buying affinity based on the model.

The fourth, often-mentioned reason is user trust. Suppose users understand how the AI component reasons. Then, they are more willing to rely on it.

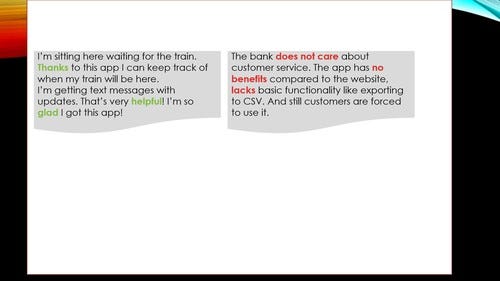

A sentiment analysis AI component, for example, could highlight the words that indicate a positive or negative and present them to the user (Figure 1). More explanation equals more user acceptance is an intuitive assumption, just not (always) correct.

In one study, user confidence dropped when the AI component provided insights about its reasoning process – especially in cases the AI component was sure and accurate [SBT20]. The conclusion should not be to abandon the idea of providing insight into the AI’s reasoning process to boost user confidence.

The conclusion should be that projects validate the impact of explanations on user confidence with real-life users. They might benefit from testing variants for which insights to present and how to present them for maximal user trust. Humans often behave less rationally than we expect and wish.

Figure 1: Explainable AI for Sentiment Analysis - Highlighting relevant Words or Phrases

The Explainability Challenge

Simple models such as linear and logistic regression deliver easy-to-understand models. Everyone can comprehend the following formula that estimates monthly apartment rents for a city:

AppRent = 0.5 * sqm + 500 * NoOfBedrooms + 130 * NoOfBathrooms + 500

Such simple models work only for classification and prediction tasks with limited complexity. For complex challenges, neural networks work much better. They consist of hundreds or thousands of equations – and provide highly accurate models. Any approach to presenting and understanding them in their complete baroque grandeur and complexity must fail.

Explainable AI is about choosing one aspect or lens and looking at the neural network with this specific perspective – and ignoring (all) other factors. In the following, we discuss two such lenses: global explainability and local explainability.

Many more concepts exist, such as extracting typical input vectors for the different classification results or building prototypes or representations of learned concepts and object types such as trains or cars [SM19]. However, they are more specific and complex. In contrast, global and local explainability provide a good overview of reasonably achievable goals for projects in the commercial sphere.

Looking at single predictions and classifications: Local explainability

Local explainability aims to understand the parameters which influence a single prediction. Why is this house so pricey? Because of the number of bathrooms or the garden size, or the pool? Or, in other words, what would influence the price more, adding a carport or a garden pavilion?

Local explainability does not aim to understand what – in general – impacts home prices in the market. It seeks to understand what influences the price of one single specific home most. One approach for local explainability is to create a simple, explainable model that describes the neural network behavior close to the point of interest. [RSG16]

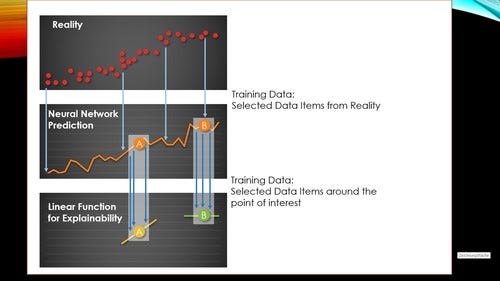

The starting point is a neural network (Figure 2, orange curve). To create a neural network, data scientists need real-word training data (red dots). In the example, these are historical home prices together with the living space sizes. Then, they construct a highly accurate and complex neural network (orange curve).

This model is perfect for predictions but, due to the high number of equations and weights, not ideal for explainability. Local explainability needs a simpler model for the area around the point of interest, e.g., a home with 80 sqm or 200 sqm living space (A and B in Figure 2).

It is a three-step approach for data scientists to create an XAI adequate model:

1. Probing: The neural network needs price predictions for living spaces around the points of interest A or B from the model. Thus, the algorithm probes, for example, the neural network’s home price predictions for 79 sqm, 80 sqm, 80.5 sqm, and 82sqm, respectively, for 185 sqm, 203 sqm, and 210 sqm. They become the new training data sets.

2. Constructing an explainable model: This step creates a model using the new training data. The underlying function must be easy to comprehend, aka explainable. A linear function has this property. In Figure 2, the yellow curve explains the behavior around A, the green curve for B.

3. Understanding and explaining: The explainable model allows understanding the local situations around A and B. Changes in the home size impact the price heavily for situation A, but not much in situation B. For models with more input features than just the living space size – garden size, number of bathrooms and carports, or whether the house has a balcony or an outdoor pool – the explanation gets, obviously, richer.

Figure 2: Local Explainability based on approximations based on linear functions approximating the model behavior around the points of interest.

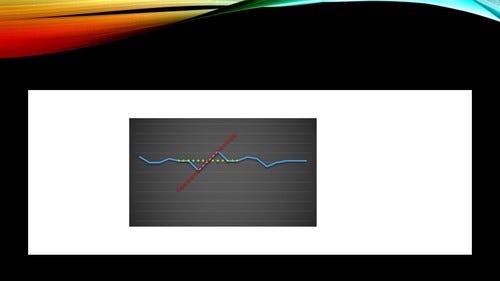

A remark for readers with a strong math background: When using a linear function for the explainable model, this function is similar to the gradient – with an essential difference.

Compared to calculating the gradient exactly at the point of interest, probing around the point of interest for creating a linear approximation function for the area reduces the impact of local disturbances and noise precisely at the point of interest that might not exist elsewhere in the nearby region (Figure 3).

Figure 3: Approximating the local behavior using a gradient (red) versus linear regression function based on probes in the area (green)

Understanding the big picture: Global explainability

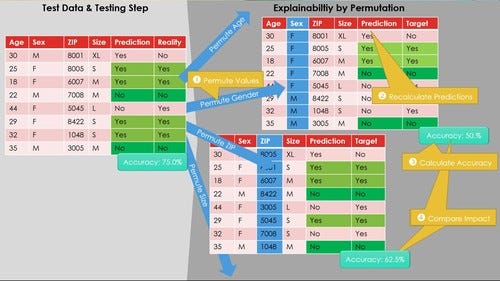

Global explainability aims for the impossible: explaining an inherently too-complex-to-understand neural network and its overall behavior to humans. One well-known algorithm is permutation importance. Permutation importance determines the impact of the various input features on a given model’s predictions. [Bre01, Slo]

The algorithm iterates over all input features respectively columns. Each iteration permutes the values of one particular column in the table (Figure 4, Œ). The value of the “sex” column changes from male to female in the first row In the upper-right table. It changes from female to male in the second last row.

The next step is to repeat the prediction. The permutation importance algorithm uses the same model to calculate predictions for the altered data (Figure 4, ). Then, it calculates the drop of the prediction accuracy due to the data manipulations (Figure 4, Ž). The more the accuracy drops, the more relevant is the permutated single feature. By comparing, for example, the accuracy after shuffling the sex and the zip columns, a data scientist understands that the sex feature has a higher impact on customers’ shopping habits than the ZIP code (Figure 4, ).

Figure 4: Understanding Permutation Importance

Looking in the future – and at public cloud offerings

A lot of research and development goes on in the area of explainable AI. Thus, more options will become available in commercial products over the following years and months.

At the time of writing, Google Cloud’s AI service, for example, provides three instance-level feature attributions algorithms: Integrated Gradients, Sampled Shapley, and XRAI [Goo20].

This sentence sounds academic, abstract, and highly intellectual. In short, no one but a highly qualified data scientist understands its exact meaning. The sentence is meant to sound like that. Its purpose is to highlight the different roles in projects. Data scientists have to know the algorithms. They have to know which works best for tabular data and which for image data. They have to understand this highly challenging sentence about algorithms provided by Google – and what other AI platform providers offer. Azure, for example, provides various algorithms for local and global scalability as part of their Responsible ML offering [MSA21].

AWS’s SageMaker AI Platform has, too, similar features [AME]. Plus, data scientists have to be aware of the many more algorithms and approaches to explainable AI that this article did not discuss.

In contrast to data scientists, business analysts and project or product managers do not have to know all algorithms and the finesses when to choose which. They have to understand local and global explainability and whether they need it in their project. Their role is to provide guidance to their data scientists – and consult their business stakeholders to get the maximum out of their AI investments.

Whether you are a business analyst or manager – and independent of your team’s AI platform for model development – this article contains everything for your mission and daily work. You can explain to your stakeholders when and why to use explainable AI.

Plus, you can discuss with your data scientists what you expect and elaborate together with them the exact business requirements for explainable AI to build a great product.

It is time to put these concepts into action for the benefit of your organization.

Klaus Haller is a senior IT project manager with in-depth business analysis, solution architecture, and consulting know-how. His experience covers data management, analytics and AI, information security and compliance, and test management. He enjoys applying his analytical skills and technical creativity to deliver solutions for complex projects with high levels of uncertainty. Typically, he manages projects consisting of 5-10 engineers.

Since 2005, Klaus works in IT consulting and for IT service providers, often (but not exclusively) in the financial industries in Switzerland.

[AB18] A. Adadi, M. Berrada: Peeking inside the black-box: A survey on Explainable Artificial Intelligence (XAI), IEEE Access, Vol. 6, 2018

[AME] Model Explainability, https://docs.aws.amazon.com/sagemaker/latest/dg/clarify-model-explainability.html, last retrieved March 29th, 2021

[Bre01] L. Breiman: Random Forests, Machine Learning, Volume 45, Issue 1, 2001

[Goo20] Google (no author): AI Explainability Whitepaper, https://cerre.eu/wp-content/uploads/2020/07/ai_explainability_whitepaper_google.pdf, last retrieved March 27th, 2021

[MSA21] Model interpretability in Azure Machine Learning (preview), https://docs.microsoft.com/en-us/azure/machine-learning/how-to-machine-learning-interpretability, February 25th, 2021, last retrieved March 29th, 2021

[RSG16] M. T. Ribeiro, S. Singh, C. Guestrin: Why Should I Trust You?”: Explaining the Predictions of Any Classifier, SIGKDD, 2016, San Francisco, US

[SBT20] Ph. Schmidt, F. Biessmann, T. Teubner: Transparency and trust in artificial intelligence systems, Journal of Decision Systems, 2020

[Slo] scikit-learn - Machine Learning in Python, https://scikit-learn.org/stable/modules/permutation_importance.html, last retrieved March 29th, 2021

[SM19] W. Samek, K.-R. Müller: Towards Explainable Artificial Intelligence, in LNCS, vol 11700, 2019

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)