Report shows a disconnect between technologists and business leaders.

Report shows a disconnect between technologists and business leaders

Keeping humans in the loop when evaluating AI models is critical for model accuracy, but it is also the least funded stage of their lifecycle, according to a new report from Appen and The Harris Poll.

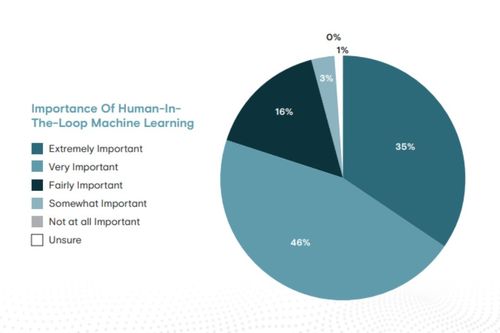

The State of AI and Machine Learning Report states that 81% of surveyed developers believe human-in-the-loop machine learning is very or extremely important with 97% reporting that human oversight in evaluation is important for accurate model performance.

Despite the importance of oversight, human model evaluation was found to have been allocated the least amount in the budget, with some 40% of respondents admitting it is their least funded to the final stage of the model's lifecycle.

“There is a gap between budget allocation and the importance of human-in-the-loop,” according to the report. “Model evaluation is critical to ensuring that the AI model is accurate and reduces the need to source more data.”

“By spending more budget on human-in-the-loop up front, companies will save money, time and will be less likely to require re-evaluations in the future.”

Other key takeaways:

Disconnect between technologists and business leaders: 42% of technologists say data sourcing is “very challenging” compared to just 24% of business leaders

Data accuracy is “critical” to the success of AI, but only 6% of respondents have accuracy rates higher than 90%.

93% agree that responsible AI is a foundation for all AI projects in their organization

Figure 1:

Appen also discovered that 88% of organizations use external data providers to monitor and evaluate systems. Also, 86% of organizations updated their models at least quarterly in 2021. That number increased to 91% in this year’s edition of the report.

Around 92% of surveyed organizations agreed that finding the right data partner is critical to successfully deploying and validating a model. Further, some 83% admitted to wanting one single partner to support all stages of the lifecycle.

Appen’s report also suggests that finding the right partner with the technology and expertise is crucial to successful high-quality results: 93% agreed that technology and expertise at each stage of the lifecycle are important to get high-quality results − with 51% strongly agreeing.

Importance of responsible AI

Nine out of 10 admitted that responsible AI is a foundation for all AI projects within their organization. But just under half (49%) strongly agreed that their organization was committed to using responsible AI, while 44% said they somewhat agree.

Almost all (97%) said that synthetic data is important to creating inclusive datasets, with 39% considering data diversity as being extremely important.

“With the importance of data diversity and desire to reduce bias, we predict this level of interest in new data formats will be mirrored globally as each market reaches maturity in their AI initiatives,” the report said.

“Data ethics isn’t just about doing the right thing, it’s about maintaining the trust and safety of everyone along the value chain from contributor to consumer,” said Erik Vogt, vice president of enterprise solutions at Appen.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)