Sam Altman said dangers from more powerful AI systems are ‘closer’ than most people think

At a Glance

- OpenAI CEO Sam Altman testified before Congress that powerful AI needs to be regulated.

- Altman called for creating a central agency to license AI models, independent audits, and developing safety standards.

- IBM recommends a 'precision regulation' approach.

The CEO of OpenAI today called on Congress to pass more regulations on artificial intelligence but urged lawmakers to not only put guardrails around current capabilities but future ones as well.

“We spend most of our time today on current risks, and I think that’s appropriate,” he told members of the Senate Judiciary Committee. But “as these systems do become more capable, and I’m not sure how far away that is, but maybe not super far, I think it’s important that we also spend time talking about how we’re going to confront those challenges.”

This is because the “prospect of increased danger or risk resulting from even more complex and capable AI mechanisms certainly may be closer than a lot of people appreciate,” Altman said.

“If this technology goes wrong, it can go quite wrong.”

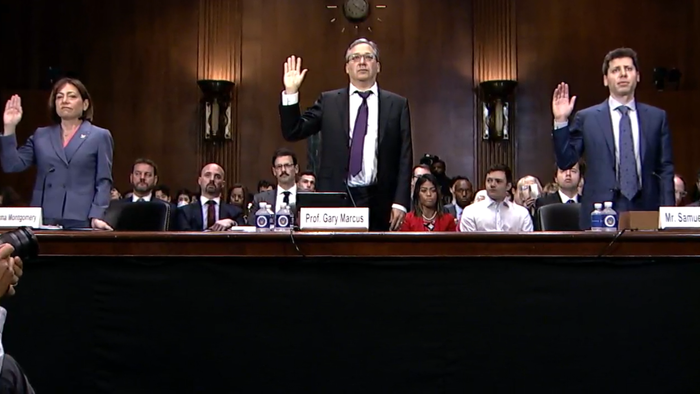

Altman, Christina Montgomery, IBM’s chief privacy and trust officer, and Gary Marcus, professor emeritus at New York University testified in a hearing before the Subcommittee on Privacy, Technology and the Law.

From left: IBM's Christina Montgomery, NYU's Gary Marcus and OpenAI's Sam Altman

Altman said that is why OpenAI does “iterative deployment,” which is releasing capabilities little by little instead of developing it more fully in secret and then releasing it to the public all at once.

“While these systems are still relatively weak and deeply imperfect,” OpenAI lets the public use them in a real-world setting “to figure out what we need to do to make it safer and better,” Altman said. “And that is the only way that I’ve seen in the history of new technology and products of this magnitude to get to a very good outcome.”

With GPT-4, OpenAI vetted it for over six months before general release.

In a rare move, Sen. Richard Blumenthal (D-CT) commended the speakers for not acting like other company executives in the past who balk at any regulation. “I sense that there is a willingness to participate here that is genuine and authentic.”

FDA-like licensing

Asked what three regulatory actions they would recommend, Marcus favored an FDA-like approval process, creation of a nimble monitoring agency for pre- and post-deployment review and funding of AI safety research. Earlier in the hearing, he also called for the creation of something like a nutrition label applied to AI models to improve transparency.

Altman said he would like the U.S. to form a new agency that requires a license for any AI effort above a certain capability. It will have the power to revoke the license as well to ensure compliance with safety standards.

Second, the U.S. should create a set of safety standards to evaluate dangerous capabilities of AI models – such as the ability to self-replicate or self-exfiltrate into the wild, among other risky behaviors.

Third, Altman said he would require independent audits of AI models from third-party experts that can check whether a model is compliant.

Altman also told the senators that the U.S. should crack down harder on big companies but less so on smaller companies and startups so they can continue to innovate.

Altman and Marcus called for international coordination, perhaps overseen by the U.N., OECD or the like. However, Altman said the U.S. should continue to lead these efforts since the tech was developed in America.

Both Altman and Marcus were in favor of creating a central agency that would license AI models to make sure they are not harmful. Montgomery stuck to her precision regulation recommended approach.

However, one concern raised by a senator is the lack of expertise in all levels of government to monitor these AI models since the skills needed reside with employees of the tech companies that are being monitored.

IBM’s ‘precision regulation’ approach

Montgomery said IBM is recommending a “precision regulation” approach based on levels of risk, similar to the EU’s AI Act. “The era of AI cannot be another era of move fast and break things,” she said. “But we don’t have to slam on the brakes on innovation either.”

IBM’s recommended regulatory approach is four-fold:

Develop different rules for different risks, with the strongest regulation applied to use cases with the greatest risks to society.

Clearly define risks and provide clear guidance on AI uses that are inherently high risk. A common definition is key to enabling a clear understanding of what regulatory requirements will apply in different use cases and contexts.

Transparency. AI should not be hidden. Consumers should know when they are interacting with an AI system and that they have the recourse to engage with a real person.

Assessing the impact. For higher risk use cases, companies should be required to conduct impact assessments that show how their systems perform against tests for bias and other ways that they could potentially impact the public and to attest that they have done so.

Montgomery said businesses also play a critical role in AI safety by developing strong internal governance by doing such things as appointing a lead AI ethics officer, creating an ethics board or similar group as a central clearinghouse for resources to guide implementation of AI.

“What we need at this pivotal moment is clear, reasonable policy and sound guardrails,” she said. “These guardrails should be matched with meaningful steps by the business community to do their part. Congress and the business community must work together to get this right. The American people deserve no less.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like