The addition of dedicated AI hardware will allow CPUs to keep pace with the increasing size and complexity of AI models

Today, just some processors have what it takes to accelerate AI neural networks. Tomorrow, all of them will.

Recent developments at Arm and Intel indicate that AI acceleration hardware is becoming a standard feature in their central processing unit (CPU) chips, which are at the heart of all kinds of electronic products.

This development illustrates that artificial intelligence has become so pervasive that AI acceleration has developed into an essential, general-purpose processing task for CPUs, alongside executing instructions from applications and the operating system.

This points to a future when all types of processing chips include some level of hardware AI acceleration, including CPUs, graphics processing units (GPUs) and infrastructure processing units (IPUs).

"Neural nets are the new apps," said Raja M. Koduri, senior vice president and general manager of Intel's Accelerated Computing Systems and Graphics Group, in an interview with ZDNet.

"What we see is that every socket, it's not CPU, GPU, IPU, everything will have matrix acceleration.”

AI CPUs in the data center

Both Arm and Intel have stated that future versions of their high-end CPU architectures will integrate dedicated AI acceleration hardware.

This marks a significant departure for cloud and data center CPUs, which historically have been focused exclusively on delivering as the highest level of general-purpose computing horsepower—to the exclusion of other features such as AI acceleration or graphics processing.

More significantly, this development illustrates that artificial intelligence has become so pervasive that AI acceleration has developed into an essential, general-purpose processing task for CPUs, alongside processing instructions from applications and the operating system.

Arm in March introduced the Armv9 CPU architecture intellectual property (IP), while Intel in August announced its Performance Core CPU. Both CPU architectures include hardware AI acceleration.

Arming up for AI

Arm positions Armv9 as an architecture that can span the gamut of applications, from internet of things (IoT) devices, to mobile systems, to high-end data-center servers.

Arm stated that during the next few years it “will further extend the AI capabilities of its technology with substantial enhancements in matrix multiplication within the CPU.”

Arm at its Arm DevSummit 2021 event in October emphasized its view that Armv9 will play a central role in making AI processing ubiquitous—and will represent a central element of the company’s planned acquisition by AI accelerator leader NVIDIA.

“Arm’s compute platform, combined with the strength of this developer ecosystem, and NVIDIA has deep expertise in AI will result in AI becoming as pervasive as compute is today,” said ARM CEO Simon Segars in his keynote address at the event.

Intel puts AI inside

At its Architecture Day event in August, Intel revealed that its Performance Core for data center applications includes a new matrix engine for accelerating neural networks.

Intel calls the overall approach its Advanced Matrix Extensions (AMX). The AMX engine is dubbed the Tiled Matrix Multiplication Accelerator and Intel describes it as “dedicated hardware and new instruction set architecture to perform matrix multiplication operations significantly faster.”

Like Arm, Intel views the addition of AI hardware acceleration to its data center CPUs as a key element in making AI and AI acceleration a standard feature in computer systems.

Paving the way to AI ubiquity

Arm’s and Intel’s moves to add dedicated AI hardware acceleration to their data-center-oriented CPUs and CPU IP represents a recognition that AI acceleration has become a standard feature in virtually all types of electronic systems.

CPUs historically performed the kinds of tasks that are essential to the functioning of all types of computer systems.

These tasks include conducting processes, calculations and operations based on instructions generated by the operating system, the application code and by other components in electronic systems.

In contrast, AI accelerators are regarded as specialized chips designed to accelerate AI and machine learning applications, including neural networks and machine vision.

However, the distinction between the type of essential general-purpose processing and AI acceleration has become blurry as artificial intelligence increasingly infuses all applications and systems.

AI is becoming prevalent in electronic systems, with ever-growing numbers of edge and data center devices adopting at least a basic level of artificial intelligence acceleration

CPUs are playing a major role in the proliferation of AI. CPUs are extensively used for AI inferencing in cloud and data center settings. CPUs in some applications are adding varying levels of AI capabilities.

For instance, Intel’s Xeon CPU chips for data center applications include the company’s Deep Learning Boost technology, which includes additional instructions designed to speed up less-demanding AI tasks such as inferencing.

However, these chips do not include dedicated AI hardware subsystems that are visible on the semiconductor die.

As a result, Omdia is not classifying these Xeons as AI processors or AI accelerators.

Despite that, AI acceleration hardware has already made its way into some CPUs.

For example, take Intel’s Core processor line, which is targeted at midrange PCs and integrates the company’s Iris X graphics processing unit (GPU) technology.

The on-chip GPU can be used to accelerate NNs, making Intel’s Core chips types of AI accelerators.

While CPUs with AI acceleration like the Intel Core are available, these devices are targeted at edge devices.

Up until now, the data center CPU has been the purest incarnation of the CPU, with every transistor on chips like Intel’s Xeon dedicated to running general-purpose computing with the highest possible performance.

The recent developments at Arm and Intel indicate that AI is becoming so universal in today’s software that hardware AI acceleration has transformed from an extra option to a standard, general-purpose feature.

Increasingly, all types of processing chips will include AI hardware acceleration—both for edge and data center.

AI acceleration everywhere

The global market for AI accelerator chips comprises devices including CPUs with AI hardware acceleration, including GPU-derived AI application specific standard products (ASSPs), proprietary-core AI ASSPs, AI ASICs, field programmable gate arrays (FPGAs) and digital signal processors (DSPs).

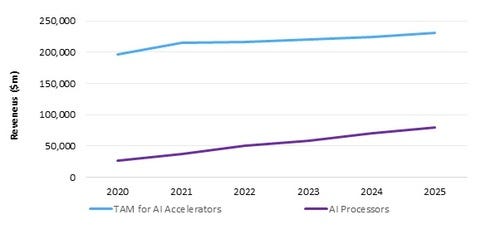

The market for these AI accelerators is massive and fast growing, with revenue set to nearly quadruple in the coming years, rising to $92 billion in 2026, up from $26 billion in 2020, according to current forecasts from Omdia’s AI Processors for Cloud and Data Center report and AI Chipsets for Edge report.

However, with CPUs and other devices adopting dedicated AI hardware, the potential market for AI accelerators has the potential to grow much larger.

The global market for microprocessors (including CPUs), along with DSPs, programmable logic devices (including FPGAs), DSPs, logic ASSPs, and logic ASICs is set to rise $230.2bn in 2025, up from $215bn in 2021, according to Omdia’s Application Market Forecast Tool.

In 2025, the market for these chips will be nearly three times larger than Omdia’s current projections for AI processors and chipsets.

While not all of these chips will integrate dedicated AI hardware acceleration by 2025 or the following years, these devices represent a total available market (TAM) for AI acceleration.

Figure 1: AI processor revenue forecast, world markets: 2019-26 (edge and data center) Source: Omdia

Implications for system designers

For system designers, the rising ubiquity of AI features in software and hardware means that in the future they will no longer have a choice of deciding whether or not to include dedicated hardware AI acceleration in their hardware. Rather, they will have to decide what level of AI acceleration they need.

For designs conducting less-demanding types of AI, such as shallow machine learning or smaller-scale inference, today’s CPUs provide sufficient horsepower.

However, with the size and complexity of AI models on the rise, even simpler AI applications will require more AI processing capability in the future.

The addition of dedicated AI hardware will allow CPUs to keep pace with these demands, while also addressing more complex AI tasks, such as some level of deep learning and training.

However, for the most demanding AI tasks—including large-scale inferencing and training—designers will continue to need to employ other types of chips or cores that provide higher levels of AI acceleration than CPUs can attain, such as GPU-derived AI ASSPs, proprietary AI ASSPs and AI ASICs.

As Intel indicated, neural networks have become the new apps.

The addition of dedicated hardware AI acceleration to CPUs shows that AI acceleration is now an essential, general-purpose processing task.

With CPUs providing a new baseline, all designs at some point in the future may include some level of hardware AI acceleration.

For designers, the challenge will be determining what level of AI acceleration their design needs and picking the right cores to meet their requirements.

Jonathan Cassell is principal analyst, advanced computing at Omdia. Contact him at [email protected]

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)