What will it take to close the gap between training and implementation?

It is fairly well known that the gap between AI model training and implementation is a big one: Around 80% of projects fail to make it.

“The phenomenon of AI models getting trained but never deployed, or else proving to disappoint in production after successful validation, is a common one,” according to the report ‘AI Viewpoint: The Challenge of Implementation‘ by sister research firm Omdia.

Reasons for failing to launch can include model drift, shortcut learning, bias as well as broader business issues such as poorly managed data or failing to design an efficient business process, said report author Alexander Harrowell, Omdia senior analyst of Advanced Computing and AI.

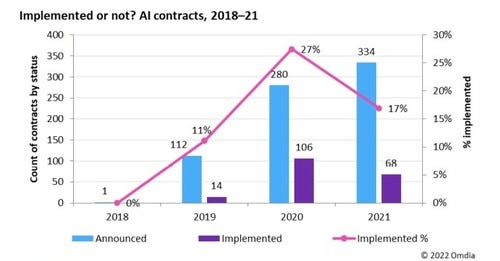

Figure 1:

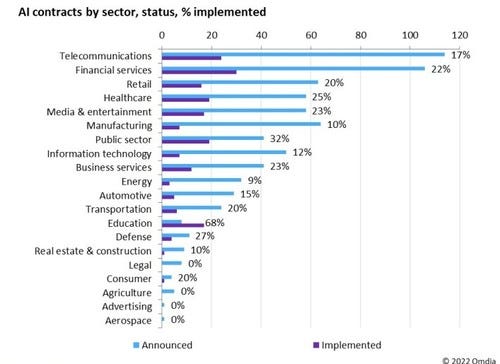

Figure 2:

Not only is it urgent for companies to invest in data analytics, governance and skills, but changes are needed in how success is measured. Some of the most common metrics are 'soft' KPIs – such as 'customer engagement' – but are more amorphous than ROI.

“The conclusion may be that the best way to close the implementation gap is to filter out bad projects further upstream by imposing more rigorous KPIs,” the analyst said.

Here are the main points of the report:

The implementation gap remains a reality and may even be growing. The rate at which AI projects make it from the strategy workshop or press release, through the development and validation phases, into production is still low, and a substantial inventory of undelivered projects exists. These serve to improve the hardware vendors’ numbers and the customers’ image but will eventually be found out.

A key driver of the gap may be projects that are justified by 'soft' KPIs. Retail and the wider consumer sector are the biggest AI growth areas, and they have experienced a bulge of investments into chatbots, VDAs, and product recommendations in the last few years. KPIs such as 'customer engagement' are very common here but are substantially less well-defined than, for example, revenue.

Although those enterprises that report it have seen impressive ROI results, surprisingly few enterprises have chosen to use this as a KPI. This may be due to a so-called file drawer effect, where nobody wants to discuss the projects that did not achieve a return. As a general rule, final goal KPIs such as revenue, ROI, or productivity are rare. The discipline of such KPIs may be an important lever to close the gap.

Across diverse sectors, there is a common pattern of AI readiness. Enterprises across all the sectors studied have made progress in defining strategy and adjusting their organization, and a subset has developed technology and operations capabilities. However, very few are even out of second gear when it comes to data.

Financial services (finserv) and retail did relatively well on AI readiness for contrasting reasons. Finserv was at least 'less bad' on the data category, probably because they already have substantial experience with processes such as credit scoring and fraud detection. Meanwhile, retailers (including e-commerce) stood out for having developed stronger in-house technology and operations skills.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)