AI Sector Facing A 'Diversity Crisis', New Study Finds

April 17, 2019

NEW YORK - The AI industry is facing such 'extreme' race and gender disparity that the sector has reached a crisis point, a new study by NYU and the AI Now Institute has argued.

The diversity crisis in AI is by now well-documented, and for the report authors, 'extreme'. Women comprise only 15% of AI research staff at Facebook and 10% at Google - and there is no public data on the presence of other gender minorities. Meanwhile, only 2.5% of Google's workforce is black, with Facebook and Microsoft both at 4%.

The report authors, which includes Kate Crawford, Meredith Whittaker, and Sarah Myers West of the AI Now Institute and NYU, argue that the current state of the field is 'alarming' as a result.

"The AI sector needs a profound shift in how it addresses the current diversity crisis," the report argues. "The AI industry needs to acknolwedge the gravity of its diversity problem, and admit that existing methods have failed to contend with the uneven distribution of power ,and the means by which AI can reinforce such inequality."

"Further, many researchers have shown that bias in AI systems reflects historical patterns of discrimination. These are two manifestations of the same problem, and they must be addressed together."

Outlining the failures of the industry's past approaches, the report claims that current efforts do not go far enough in acknowledging the nuances of race, gender, and other identities - and has serious knock-on consequences for bias in AI as a result. It cites as an example 'pipeline studies', which attempts to optimize the flow of diverse job candidates from education to industry - yet has produced 'no substantial progress'.

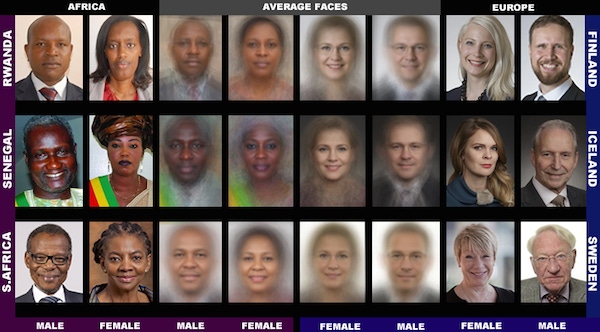

Calling on the industry to take action, the authors argue that the use of AI systems for the classification, detection, and prediction of individuals' race and gender is in urgent need of re-evaluation. Recent controversies attest to this issue such as the AI industry's recent call on Amazon to stop selling facial recognition tools to law enforcement on account of studies finding racial bias in their datasets.

"Such systems are replicating patterns of racial and gender bias in ways that can deepen and justify historical inequality," states the report. "The commercial deployment of these tools is a cause for deep concern."

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)