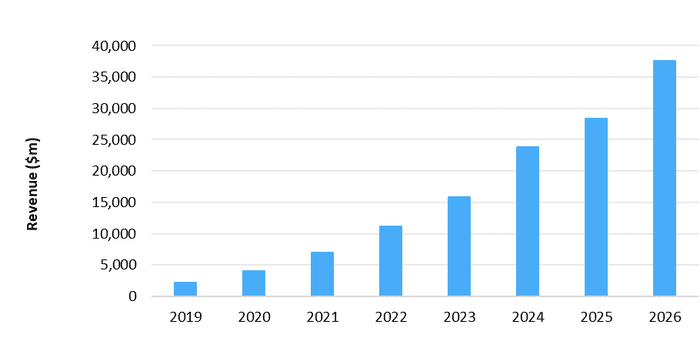

The growth potential for centralized AI is looking as strong as ever: worldwide market revenue for cloud and data center AI processors is forecast to reach $37.6 billion in 2026

The cloud represents the foundation of today’s artificial intelligence-infused world, with hyperscalers’ neural network (NN)-based services underpinning the ongoing explosion in business and consumer adoption of AI technology.

However, much of the attention in the AI community now has shifted to a new frontier: the edge market, which promises enormous volumes and diverse growth opportunities.

Some may believe that this means the era of cloud and data center AI has passed. However, the reality is that the growth potential for centralized AI now is looking as strong as ever. Factors including businesses’ increasing operationalization of AI services and the spread of artificial intelligence usage to the enterprise are expected to continue to drive rising requirements for cloud and data center-based AI services. This in turn is set to trigger a massive increase in demand for semiconductors specifically designed to accelerate NN computing.

Worldwide market revenue for such cloud and data center AI processors is forecast to rise by more than a factor of five in the coming years, increasing to $37.6 billion in 2026, up from $6.9 billion in 2021, as reported by Omdia’s new AI Processors for Cloud and Data Center Forecast Report.

Figure 1: AI processors for cloud and datacenter revenue forecast, world markets: 2019–26

© Omdia 2021

The cloud and data center remain essential as AI proliferates

The cloud and increasingly data centers are the only locations where certain kinds of highly demanding AI acceleration can take place. Cloud hyperscaler and data center operations maintain the only venues with the level of computing, storage, networking, and infrastructure resources available to conduct AI processing on a large scale—both training and high-volume inferencing. Although the increase in AI capabilities in edge devices will serve to mitigate demand growth for AI processing in the cloud and data center segment, centralized AI will remain indispensable for many tasks.

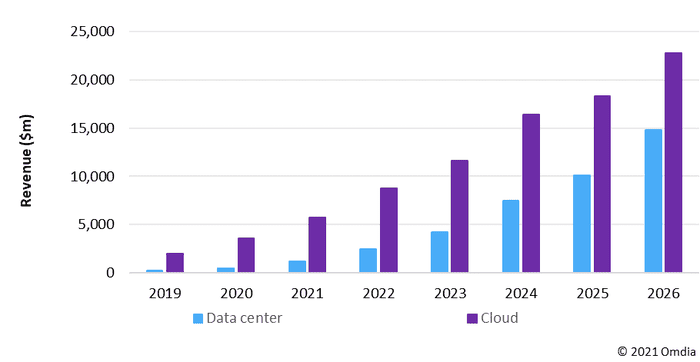

Meanwhile, AI usage is proliferating. Early adopters in the business world are operationalizing and scaling AI, both deepening and broadening their usage of the technology across their operations. Moreover, AI adoption is spreading from the cloud to the wider on-premises data center market. On-premises data center AI processor revenue is expected to expand at a CAGR of 78% from 2020 through 2026—more than double the 36% CAGR for the cloud sector.

These and other factors will drive the continued robust growth of the cloud and data center AI processor market in the coming years.

Figure 2: AI processors for cloud and on-premise data centers by market segment

© Omdia 2021

GPU-based chips retain the lead—but alternatives arise

In its definition of AI processors, Omdia includes only those chips that integrate distinct subsystems dedicated to AI processing. These devices include GPU-derived AI application-specific standard products (GPU-derived AI ASSPs), proprietary-core AI application-specific standard products (proprietary-core AI ASSPs), AI application-specific integrated circuit (AI ASICs) and field-programmable gate arrays (FPGAs). While central processing unit (CPU) chips like Intel’s Xeon are used extensively for AI acceleration in cloud and data center operations, Omdia is not including these devices in its analysis of AI processors for cloud and data center.

GPU-based chips have enabled the proliferation of AI capabilities now unfolding throughout the technology world. The discovery that these chips provide a highly efficient approach to accelerating NNs established this architecture as the leading semiconductor part type for AI training and inferencing—both in data centers and at the edge. While commonly called GPUs, today’s GPU-based devices are actually specialized chips designed for AI acceleration, with blocks of logic dedicated to executing the kind of math required for NNs. In the light of this, Omdia is classifying these devices as GPU-derived AI ASSPs. Although these devices have been specialized for AI, they retain the flexibility and programmability of general-purpose GPUs.

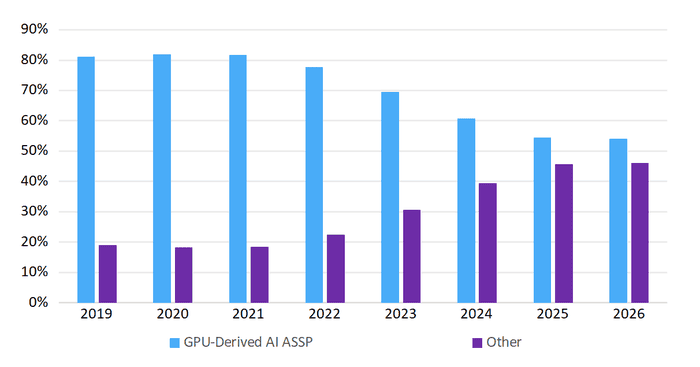

With NVIDIA having established a dominant position among AI software developers with its Compute Unified Device Architecture (CUDA) platform, its market-leading GPU-based AI ASSPs are expected to remain the default choice for many cloud and data -cener operations. However, the future revenue growth for GPU-derived AI ASSPs will lag other types of chips in the cloud and data center market as buyers seek more efficient alternatives. Particularly strong growth will be generated by AI ASICs and proprietary-core AI ASSPs.

Some cloud hyperscalers and other companies have sought alternative AI processor architectures specifically designed to serve their own needs. These companies have developed their own AI ASICs that are aimed at achieving better performance, along with reduced cost and lower power consumption compared to GPU-Derived AI ASSPs. Hyperscalers and on-premise data centers also are adopting proprietary-core AI ASSPs is pursuit of similar advantages.

As a result, the GPU-derived AI ASSPs’ share of market revenue is set to decline to 54% in 2026, down from 82% in 2021.

Figure 3: Revenue percentage for GPU-derived ASSPs for cloud and data center vs. other types of processors, world markets: 2019–2026 © Omdia 2021

Cloud and data center AI presents massive growth opportunities

Even amid the rise of the edge, the cloud and data center AI market remains a fast-growing, high-volume opportunity for suppliers of AI processors. Although GPU-derived AI ASSPs will remain the leading type of AI processor in the cloud and data center market at least through 2026, other types of chips will experience faster growth, spreading this growth opportunity among a variety of suppliers and processor technologies.

Jonathan Cassell is a principal analyst covering AI for the Advanced Computing Intelligence Service at Omdia. Contact him at [email protected]

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)