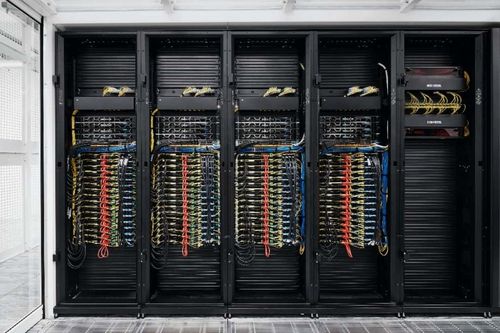

Graphcore, the British startup designing chips for AI workloads, has launched two new data center systems – IPU-POD 128 and 256.

The newly unveiled machines boast 32 and 64 petaFLOPS of AI compute respectively, with the company saying the launch “further extended” its reach into AI supercomputer territory.

The IPU-POD 256 contains 256 GC200 Intelligence Processing Units (IPUs) and 16TB of memory, while the 128 predictably features 128 IPUs and 8.2TB of memory.

Both units began shipping at the end of last week from Atos and other systems integrator partners – and are available in the cloud.

The French IT giant partnered with the startup in the summer – offering its Intelligence Processing Units (IPUs) as part of the ThinkAI product range.

“We are enthusiastic to add IPU-POD128 and 256 systems… into our Atos ThinkAI portfolio to accelerate our customers' capabilities to explore and deploy larger and more innovative AI models across many sectors, including academic research, finance, healthcare, telecoms, and consumer internet," said Agnès Boudot, SVP and head of HPC and quantum at Atos.

Unlimited power!

Graphcore was valued at $2.77bn in January, after securing $222 million in a Series E funding round. Among its plethora of investors are Microsoft, Dell, Samsung, and BMW.

The company’s second generation IPU products began shipping earlier this year.

These include the GC200 IPU, the IPU-M2000—a 1U server blade built around four Colossus GC200 IPUs—and the IPU-POD64, which can run very large models across up to 64 IPUs in parallel for large-scale deployments. Each chip contains 1,472 independent processor cores and 8,832 separate parallel threads, supported by 900MB of in-processor RAM.

Graphcore’s latest product is designed for hyperscalers, national scientific computing labs, and enterprise companies with large AI teams in markets like financial services or pharma, the company said.

The IPU-PODs can enable faster training of large Transformer-based language models, and run large-scale commercial AI inference applications in production.

The startup also offers training and support to help customers with potential IPU-based AI deployments.

Korean ICT giant KT was among the first customers to use the new IPU-PODs. It deployed the 128 to power its hyperscale AI services – using Graphcore IPUs in a dedicated high-density AI zone within its data centers.

“Through this upgrade we expect our AI computation scale to increase to 32 PetaFLOPS of AI compute, allowing for more diverse customers to be able to use KT’s cutting-edge AI computing for training and inference on large-scale AI models,” said Mihee Lee, SVP of cloud/DX business unit at KT.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)