May 24, 2021

Another AI silicon designer becomes a unicorn

Artificial intelligence chip design company Tenstorrent has raised more than $200 million in a Series C round, valuing the company at $1 billion.

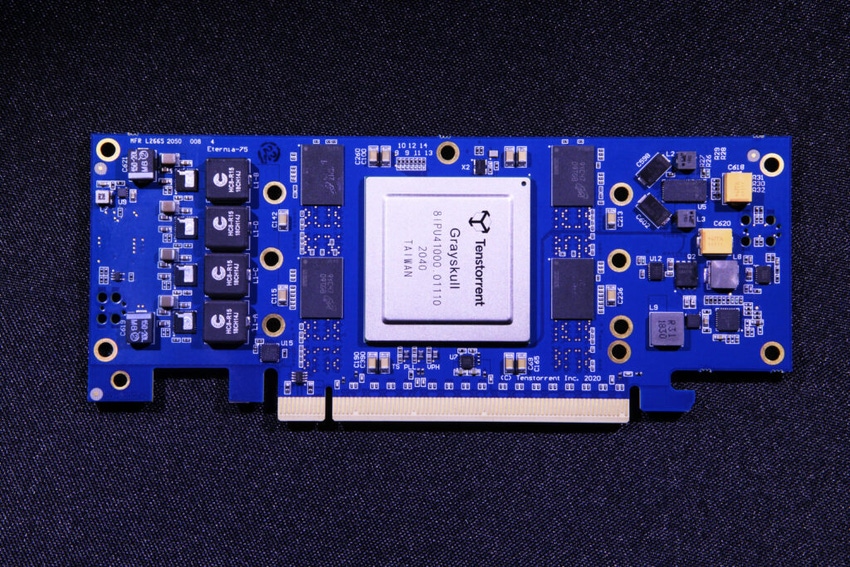

The round was led by Fidelity Management and Research Company, with Eclipse Ventures, Epic CG, and Moore Capital also taking part. Tenstorrent plans to launch its first processor, ‘Grayskull,’ in the second half of the year.

In a market full of hopeful semiconductor startups, Tenstorrent made headlines with a high profile hire – with the company bringing on Jim Keller as its chief technology officer and president last August.

Keller previously worked at Intel, Tesla, AMD, and Apple, and is often called a "rockstar chip designer" for his role in the development of crucial semiconductor advances, including Digital Equipment Corporation's Alpha processors, AMD's K7, K8, and Zen architectures, and Apple's A4 and A5 chips.

The Keller app

“We are bringing to market a highly programmable chip at an accessible price point as a means of driving innovation,” Keller said following the company’s funding round. “AI is going to transform the data center and Tenstorrent’s architecture is best positioned to address this data-written future by being a developer focused solution.”

In an interview with HPCwire, Keller claimed that Tenstorrent differs from competitors because the hardware was built in collaboration with software from the start.

"Some AI chip companies build chips with lots of GFLOPS or TFLOPS, and then they design the software later," Keller said. “What sets Tenstorrent apart is networking, data transformation and math engines of the software stack that work in sync with the hardware.”

Keller was actually Tenstorrent’s first investor, having worked with founder Ljubisa Bajic at AMD.

“Our goal is to continue creating AI focused hardware for developers,” Bajic said. Grayskull is currently in early production, coming in 75W, 150W, and 300W TDP for server markets. The company has already released its inference software, and plans to release training software within a month.

The chip will be launched later this year, available to trial through Tenstorrent’s DevCloud. Larger systems will be available for purchase through unidentified channel partners.

Keller said that the company is currently working on a second generation 'Wormhole' chip that features improved networking, and is designing its 3rd and 4th generations of processors. The company will sell the chips on a PCIe board from as little as $1,000, scaling all the way up to the $100k partner-made systems.

The approach differs from SambaNova, currently the most valuable of the new crop of AI chip startups. The company’s Cardinal chip is only available as-a-service as part of its DataScale offering. AI Business spoke to the company’s CEO Rodrigo Liang earlier this year to find out about the benefits of this model, and what the $5bn business plans to do next.

Then there’s Groq, which last month raised $300m to reach $1 billion valuation. Founded by the designers of Google's TPU processor, Groq hopes to convince people that single-core chips are the future of AI.

December 2020 saw Graphcore raise $222m at a $2.7bn valuation with a focus on large-scale parallel deployments for large AI models.

Then there's Lightmatter, which raised $80m earlier this year for a very different approach – photonic computing. The startup uses light for processing, bringing huge efficiency improvements, but at the cost of accuracy.

Another alternative is being developed at Cerebras, the company behind the world’s largest chips. The startup believes that the best way to deal with increasing AI compute demands is to take advantage of a whole wafer. It launched the latest version of the Wafer Scale Engine last month.

Numerous other competitors are fighting for a share of the market, including NeuReality, Kneron, Wave Computing, Flex Logic, Esperanto Tech, Luminous Computing, Hailo, and Fathom Computing.

All of these hopefuls are betting that slowing advances in conventional processors combined with a boom in artificial intelligence workloads have left the market ripe for disruption. But the incumbents aren’t resting on their laurels, with Intel, Nvidia, and AMD investing heavily in advancing their hardware offerings.

Intel, in particular, has also embarked on an acquisition spree, picking up AI chip companies like Habana and Nirvana with mixed success. Nvidia, meanwhile, is doubling down on its core GPU technology that has served it so well – and expanding into Arm-powered AI chips, as it seeks to acquire Arm. AMD is also looking to bolster its offering with the acquisition of FPGA company Xilinx, while building out its GPU line-up and hitting its stride with powerful Epyc server CPUs.

Then there’s the cloud providers, all of which are publicly or secretly working on chip hardware, mostly focused on AI processing. Last week, Google unveiled the fourth generation of its successful TPU line, while AWS produces own Trainium and Inferentia silicon. Microsoft is working on Arm processors, but has yet to reveal just how far along those efforts are.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)