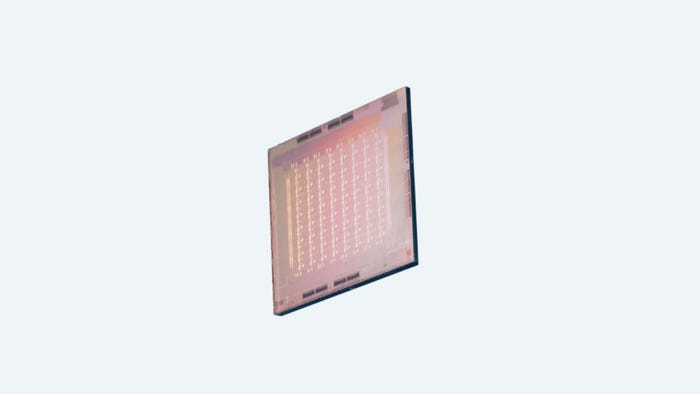

Built by HPE with 6,144 Nvidia A100 GPUs and 1,536 AMD Epyc CPUs

June 2, 2021

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

The National Energy Research Scientific Computing Center at Lawrence Berkeley National Laboratory has switched on the first phase of the world's fastest AI supercomputer.

'Perlmutter' is capable of nearly four exaflops of AI performance, making it the most powerful system in the world at 16- and 32-bit mixed-precision math.

The first set of official jobs on the new supercomputer was released by Nobel Prize-winning astrophysicist Saul Perlmutter, who the system was named after.

“This is a very exciting time to be combining the power of supercomputer facilities with science, and that is partly because science has developed the ability to collect very large amounts of data and bring them all to bear at one time,” Dr. Perlmutter said.

“This new supercomputer is exactly what we need to handle these datasets. As a result, we are expecting to find new discoveries in cosmology, microbiology, genetics, climate change, material sciences, and pretty much any other field you can think of."

Turning to AI to unlock the secrets of the universe

An HPE Cray EX supercomputer, Perlmutter is being built in two phases. Phase 1 features 1,536 GPU-accelerated nodes, each including four A100 Tensor Core GPUs and one 3rd Gen AMD Epyc CPU, all connected by Nvidia’s NVlink. The machine is complemented by a 35PB all-flash Lustre-based storage system.

Later this year, Phase 2 will add another 3,072 CPU-only nodes, each with two AMD Epyc processors and 512GB of memory.

“Perlmutter is a world-class supercomputer with AI capabilities that enable the scientific community to push the boundaries of supercomputing research as we enter the exascale AI era,” said Ian Buck, VP and GM of accelerated computing at Nvidia.

“Nvidia GPU-accelerated computing provides incredible performance and flexibility to the wide range of HPC and AI workloads Perlmutter will tackle.”

The first workloads to be run on the supercomputer include a project hoping to discover how atomic interactions work, which could lead to better batteries and biofuels.

Another project will help assemble the largest 3D map of the visible universe to date, crunching data from the Dark Energy Spectroscopic Instrument – which uses 5,000 fiber-optic "eyes" to capture as many as 5,000 galaxies in 20 minutes.

To work out where to point the system, researchers need to rapidly analyze dozens of exposures – which is where Perlmutter and its GPUs come in.

“The Perlmutter supercomputer will help inspire the next generation of scientists and innovators, allowing the US and DOE to remain a leader in using scientific computation to answer our greatest questions,” David Turk, DOE deputy secretary, said.

“As we continue to enhance and deploy computing platforms like this, our national labs will only be better positioned to develop solutions to today’s toughest problems, from climate change to cybersecurity.”

Later this year, Italian inter-university consortium CINECA will launch the Leonardo supercomputer with just under 14,000 Nvidia A100 GPUs and around 3,500 Intel Sapphire Rapids CPUs. When it comes online later this year at 10 exaflops of AI performance, it is expected to overtake Perlmutter as the world's fastest artificial intelligence supercomputer.

About the Author(s)

You May Also Like