The whole AI world is desperately dependent on one fab

The pandemic year 2020 has had seismic effects on the global semiconductor market.

On one hand, enforced homeworking and reliance on digital entertainment in the absence of other options have kicked off a massive PC replacement cycle, while the demand for cloud services has driven a surge in growth for server silicon in the hyperscale sector.

On the other hand, the abrupt near-closure of the automotive industry in Q2 2020 and its abrupt reopening a year later have created a so-called bullwhip effect in the supply chain for the older process nodes used for microcontrollers and dedicated ASICs. With little demand for these products, major fabs re-allocated their capacity to serve the demand there was, from the TMT sector – which in any case was made up of the latest and greatest models and hence the highest margins. As a result, factories have been idle awaiting chips.

The effort to increase volumes in certain industries has stressed the supply of inputs critical for the whole sector – Intel management noted in a recent analyst call that although they were able to produce as much silicon as they could sell, the packaging step in the process was proving a problem. And while this was going on, the aftershocks of the US-China trade war continue to destabilize the system – unexpectedly, Mediatek is likely to be the biggest mobile SoC vendor this year, as its OEM partners push into the space left by the collapse in Huawei volumes.

AI implementers may find all this to be old news. GPUs, critical to most AI model training and much inference, have been in short supply for some time, with the combination of a cryptocurrency boom driving demand from miners, a global wave of investment in AI infrastructure from enterprises and hyperscalers, and a reliably vigorous demand from gamers (especially during the pandemic) converging on the available capacity.

Market leader NVIDIA, for example, has introduced a line of non-graphical GPUs, essentially pure matrix math accelerators, in an effort to create greater differentiation between its gaming and workstation markets on one hand, and its data center markets on the other. However, the main way in which this helps is by creating price discrimination; NVIDIA can let the effectively unlimited budgets of the hyperscalers and miners rip, capturing the price rises into its margins, without alienating the gamers further.

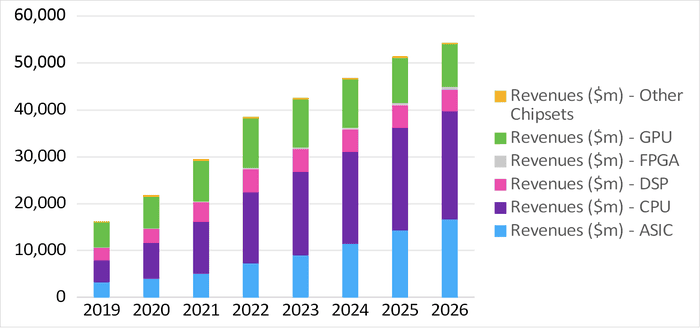

In the AI Chipsets for the Edge report and forecast, Omdia forecasts that the demand for GPUs in this segment is going to peak soon, as more power-efficient ASIC designs from the mobile-first sector take over more workloads.

© Omdia 2021

This will only expose the real problem, though: the whole AI world – even more than the TMT sector more broadly – is desperately dependent on one fab, TSMC.

NVIDIA’s GPUs are all fabbed by TSMC. If we look beyond the GP-GPU ecosystem, though, most of the AI ASICs being built into ARM-based SoCs by the mobile-first vendors are also fabbed there. Apple’s M1 and A14 parts, with their 16-core Neural Engine ASIC block, are an obvious case in point, but so are Qualcomm’s Snapdragons with their Hexagon DSPs, and Mediatek’s Dimensity SoCs with their “APU” ASICs.

Many of the startups in AI silicon – Graphcore is an example, as is Habana Labs even despite its acquisition by Intel – are also reliant on TSMC. There are exceptions – Blaize uses Samsung LSI’s 14nm process, while Groq uses both fabrication and co-design from Marvell – but the question is one of scale.

Intel’s new “IDM 2.0” strategy may help. This aims both to invest heavily in fabrication, and to make contract fabrication for custom silicon a major line of business. As AI innovation has moved into the silicon, custom capacity has become a key issue.

Alexander is a Senior Analyst for Enterprise AI and member of the artificial intelligence analyst team. He covers the emerging enterprise applications of AI and machine learning, with a special focus on business models and AI as an IT service, as well as on the core technology of AI.

Since joining Omdia in 2016, Alexander has covered technologies for small and medium enterprises, with a specific focus on mobile and 5G for business. In previous roles, he developed the Enterprise 5G Innovations Tracker to monitor the emerging enterprise 5G and CBRS ecosystem.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)