We take a look at MLOps, and some of the methods used to maintain model accuracy over time

February 22, 2021

Organizational structure and model management together are the siblings forming MLOps. MLOps – machine learning operations – aims to provide and manage AI services that meet enterprise-level stability requirements suitable for business-critical environments. Such services are based on AI and analytics models. The models are the result of the work of data scientists. They are critical, valuable corporate assets. Thus, organizations have to govern their creation and usage.

Model management implements governance by enforcing their storage, overseeing their deployments, and measuring the after-deployment model performance.

It is impossible to create a model in an enterprise context, deploy the model to production, forget it, and let it work for years. The risk is too high that the model degrades over time without anybody noticing.

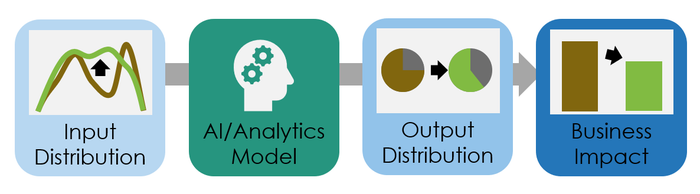

Automated model scoring and KPI monitoring address this challenge by looking for changes ("drift") in the input data and the derived predictions or classifications(Figure 1). Does the input data distribution change, or more concrete, e.g., its average or lower and upper values? Does the output distribution change over time, or the business impact change as a drop in the conversion rate?

When the monitoring detects that a KPI drops below a threshold, this generates an alarm for the data scientists. They have to retrain the AI model. Retraining can take various forms. The simplest option is to train the same neural network architecture with newer data. The most comprehensive retraining approach is to repeat the complete model development process, including feature development and hyperparameter optimization.

Figure 1: Analytical model, neural networks, and drift

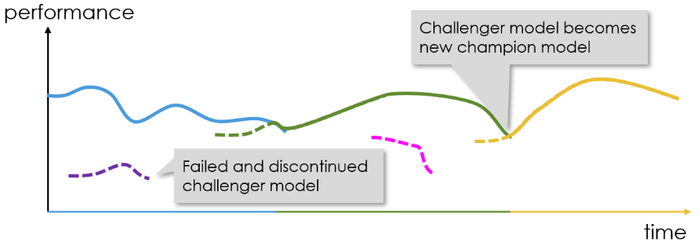

Alarming followed by retraining is a reactive approach. Only a detectable decay of the model triggers the retraining and building of a new model to again reach the desired quality level. In contrast, a champion-challenger pattern is a proactive approach (Figure 2).

The model in production use is named the champion model. Though the model (still) meets the quality requirements, data scientists work already on a challenger model. The challenger model should become the next better model. Data scientists can use newer data or try more sophisticated optimizations and compare the champion and challenger models' performances. When the challenger model outperforms the champion model, the challenger model becomes the new champion model. Suppose the challenger model does not surpass the champion model. In that case, the data scientists discard this challenger model and start working on the next one. In general, the champion-challenger pattern is similar to continuous A/B testing.

Figure 2: Champion-challenger approach

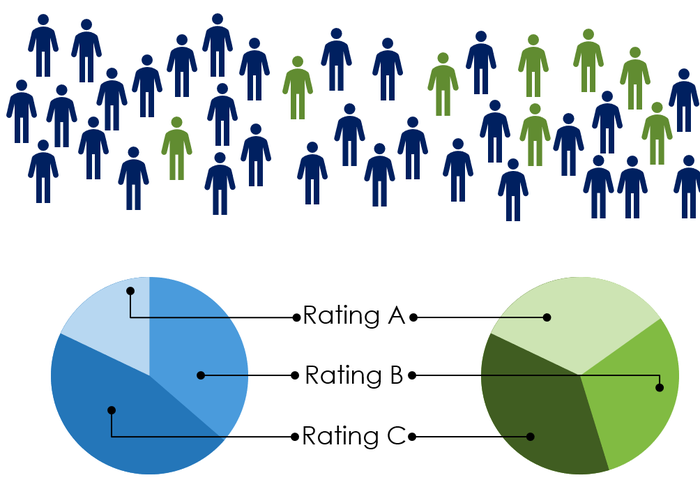

Monitoring infrastructures measuring model KPIs have another use case, which recently got more attention: ensuring that models are ethical and fair. There are cases when models that discriminate against minorities or specific groups are unethical or even unlawful. Proving the opposite can be a challenge. When a model does not has an input variable male/female, it can still discriminate based on gender. A model that increases clothes prices if there is a red lipstick in the shopping basket tends to impact more women than men. Such model behavior is challenging to identify. However, it becomes visible when monitoring and comparing the model outputs for a majority group with the output for a specific group, as illustrated in Figure 3.

Figure 3: Verifying for model output differences for subgroups of the training and production population. The majority respectively the control group is blue, the minority group green. In this example, the majority group has much more A ratings.

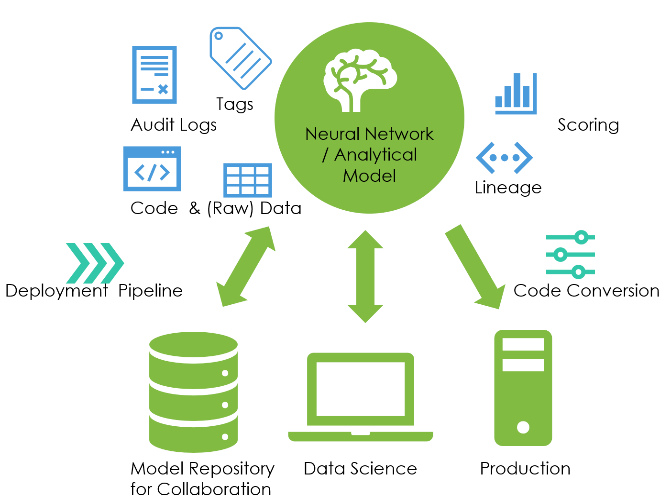

Monitoring the model behavior ensures that the models meet the needed quality level. Besides that, model management has a second aspect: ensuring a smooth progressing of AI models through corporate processes. Three technical features are essential for that (Figure 4):

Data scientists must store models as valuable corporate assets in a central repository. A repository guarantees that models do not get lost or deleted by mistake and enables collaboration between data scientists.

A tool-based managed deployment process or pipeline enforces corporate processes. These processes usually clarify who signs-off which code fragment or models before their usage in production. A process tool ensures that the processes are respected and provides auditable information about important events during the process.

Code conversion functionality helps data scientists and software developers when these two use different technologies. While it is possible to manually turn a Python script into a Java code fragment, this is error-prone. Code conversion functionality automates this manual step.

The repository stores, obviously, the parameters and hyperparameters of the AI model. Furthermore, the repository stores additional information vital for the deployment process, for searching for models, or for audits. Such additional information in a repository can be:

The data preparation and model training code, accompanied by the actual data or a reference to the data. Only this combination enables data scientists to recreate precisely the same model at a later time.

Data lineage documenting how the data got from the source to the point it became training data. Does the financial data come from the enterprise data warehouse or an obscure Excel created by a trainee in the housekeeping department and copied and modified by five teams afterward?

Scores measuring the model performance. They document the rigidness of the quality assurance activities and prove the adequacy of the model.

Audit logs documenting crucial activities such as who changed the training code or data preparation or who deployed the model to which environment.

Tags storing additional information. Examples are the data scientists that worked on the projects, the exact purpose of a model, or why a data scientist used a particular hyperparameter value.

Figure 4: Model management in a corporate context

Storing AI models in a repository together with additional (meta-)data, enforcing a rigid deployment process, and monitoring the after-deployment performance of models in production, are the three pillars of model management.

Model management might be only a sandwich layer between the trendy and hype data science work and the glamorous management level. Still, model management is the glue needed for a sustainable AI initiative. This glue allows corporations to benefit from AI initiatives for years, not only for weeks or a few months. Finally, model management gives future data science projects a kick-start with all the model repository information and artifacts – reuse cannot get more comfortable.

Klaus Haller is a Senior IT Project Manager with in-depth business analysis, solution architecture, and consulting know-how. His experience covers Data Management, Analytics & AI, Information Security and Compliance, and Test Management. He enjoys applying his analytical skills and technical creativity to deliver solutions for complex projects with high levels of uncertainty. Typically, he manages projects consisting of 5-10 engineers.

Since 2005, Klaus works in IT consulting and for IT service providers, often (but not exclusively) in the financial industries in Switzerland.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)