October 28, 2022

We are surrounded by risks every day, in every organization, and with every activity we undertake. The field of risk management is a discipline devoted to identifying, quantifying, and managing risks so that we as humans can live safe, secure, and happy lives. Every time we fly in a plane, drive a car, swallow a pill or eat a salad, we are relying on the tireless work of risk managers to make that activity safe while keeping things cost-effective.

Risk management is understanding events that might happen, regardless of how unlikely or remote. For example, when driving a car, there is the risk that a tire might blow out. The probability is low, but the impact if it happens is quite high. Conversely, the risk that a headlight will stop working is relatively high, but the impact if that happens is moderate, but not zero, which is why we have two headlights.

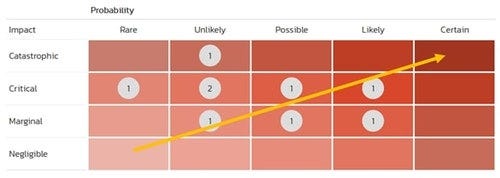

When assessing risks, consideration must be given to the combination of impact/severity and probability/likelihood; this is called risk exposure and determines how much effort should be put into mitigating the risk − by reducing its severity or its probability.

Identifying risks overlooked by humans

Traditionally, the first step in risk management is to identify all the risks and then quantify their probability and severity. This is done by humans brainstorming possible events that can happen and using their previous industry knowledge to make an educated guess about the probability and severity. One of the major pitfalls in this process is that humans (especially in teams) are affected by biases such as confirmation bias, hindsight bias, and availability bias. Put differently, we tend to overestimate previously known risks and either ignore or downplay obscure edge cases that might be truly serious.

Using Artificial Intelligence (AI) in the field of risk management offers the potential to address biases in several ways.

Firstly, when you have large datasets of real-world evidence, AI applications and Machine Learning (ML) models can uncover hitherto unknown risks, so-called ‘zero-day’ risks. For example, an AI system that is monitoring unrelated data feeds for weather, hospital stays, and traffic could identify risk in a new automotive system (e.g., a new autopilot mode) that has been obscured from view.

In addition to identifying hitherto unknown risks from large uncorrelated data sets, an AI system could take previously identified risks and quantify them systematically for impact and probability using external environment data vs. human intuition. This would take a lot of the guesswork and variability out of classic risk management models.

Understanding risks in our models

Conversely, using AI and ML for safety-critical systems such as automotive or aerospace autopilots can introduce new risks into the system. When we build an AI/ML model, unlike a traditional algorithmic computer system, we are not defining the requirements and the coding steps to fulfill those requirements. Instead, we are providing a dataset, known rules, and boundary conditions and letting the model infer its own algorithms from the provided data.

This allows AI/ML systems to create new methods that are not previously known to humans and find unique correlations and breakthroughs. However, these new insights are unproven and may be only as good as the limited dataset they were based on. The risk is that we start using these models for production systems, and they behave in unexpected and unpredictable ways.

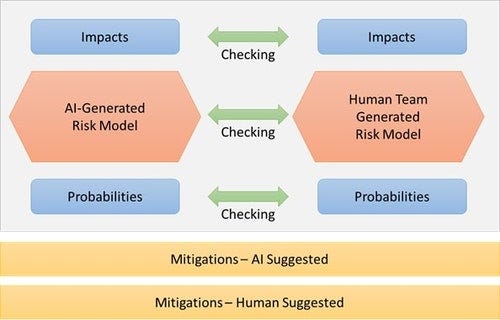

This is a classic example of a problem that needs independent risk analysis and risk management, either from a separate AI/ML model that can ‘check’ the primary one or from human experts who can validate the possible risks that could occur in the AI system.

Harmony between humans and machines

So, introducing AI and ML into complex safety or mission-critical systems brings both benefits and challenges from a risk management perspective; a sensible framework for adopting AI/ML techniques recognizes these factors and attempts to maximize the benefits whilst mitigating the challenges.

Humans can check the machines

As we begin to implement AI/ML models, making sure that we have a clear grasp of the business requirements, use cases, and boundary conditions (or constraints) becomes critical.

Defining the limits of the data sets employed and the specific use cases that the model was trained on will ensure that the model is only used to support activities that its original data set was representative of.

In addition, the importance of having humans independently check the results predicted by the AI/ML models cannot be overlooked. This independent verification and validation (IV&V) will allow humans to ensure the AI models are being used appropriately without introducing unnecessary risk and unintended vulnerability into the system.

For example, when deploying an autopilot to an automotive or aviation platform, such IV&V will verify the plane or car behaves as expected, either in a simulator or real-world test environment.

Machines can check the humans

Conversely, AI models can be used to check the risk management models and assessments made by humans to ensure they are not missing zero-day risks. In this case, the computer acts as its own IV&V for human decision-making. This is a mirror image of the previous point. In this case, we’re taking advantage of machine learning models to avoid human biases and find flaws in our existing risk models.

Harmony

Instead of seeing AI as an alternative to classic risk management methods, we should embrace the inherent harmony between computers and humans checking each other’s assumptions and recommendations.

Using a combination of manual risk assessment techniques and AI tools, we can reduce the overall risk in systems while at the same time allowing unprecedented innovation and breakthroughs. If you look at many historical safety accidents (Three-mile Island, Chernobyl, etc.), the human factor often presents the most risk.

About the Author(s)

You May Also Like

.png?width=100&auto=webp&quality=80&disable=upscale)

.png?width=400&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)