Apple Launches First Multimodal AI Model

MM1, Apple's latest AI model, could revolutionize Siri and iMessage by understanding images and text contextually

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Apple's MM1 AI model excels in processing images and text with state-of-the-art multimodal capabilities

Apple has unveiled MM1, a family of multimodal models capable of handling images and text.

The models range up to 30 billion parameters and are competitive with the initial versions of Google Gemini.

MM1 can follow instructions and reason across images among the model's multimodal abilities. The example below shows it can deduce how much two beers cost based on the price on the menu.

.jpg?width=700&auto=webp&quality=80&disable=upscale)

Credit: Apple

MM1 boasts in-context learning, meaning the model can understand and respond to queries based on the context provided within the current conversation without needing explicit retraining or fine-tuning for each new type of query or task.

In-context learning could allow the model to generate descriptions of images or answer questions about the content of photo-based prompts based on content it has not seen before.

MM1 also enjoys multi-image reasoning, which means the model can understand, interpret and draw conclusions from multiple images within the same query. With multi-image reasoning, Apple’s MM1 can handle more complex and nuanced interactions with visual content.

With its multimodal understanding capabilities, Apple could use MM1 to improve its voice assistant, Siri, by enabling it to answer questions based on images, for instance. MM1 could also help understand the context of shared images and texts within iMessage, offering users more relevant suggestions for responses.

For now, Apple has not released MM1, nor said what the model will be used for. Details of the model were outlined in a paper published last week.

But MM1 is “just the beginning,” according to Brandon McKinzie, an Apple senior research engineer working on multimodal models. He said Apple is “already hard at work on the next generation of models.”

Inside MM1

Apple’s new large multimodal model has several underlying mechanics that improve its abilities.

Among them is its hybrid encoder, which processes both visual and textual data. This enables MM1 to understand and generate content that integrates both forms seamlessly.

Another key component of MM1 is its vision-language connector. This bridges the gap between the visual perception processed by the image encoder and the textual understanding handled by the language model.

Essentially, the vision-language connector brings together the model’s separate abilities for processing images and text, allowing the visual perception from images and the language understanding to work together.

MM1 is scalable and efficient thanks to its use of both traditional dense models and mixture-of-experts (MoE) variants. The use of MoE allows the model to increase capacity without increasing computational demands during inferencing. In simple terms, MM1 can handle more while still being efficient.

The research team behind it unearthed optimized data handling strategies following extensive rounds of studies examining the impact of different data types on model performance. For example, they uncovered that for large-scale multimodal pre-training using a mix of image-caption interleaved image-text, and text-only data was “crucial” for achieving state-of-the-art performance results.

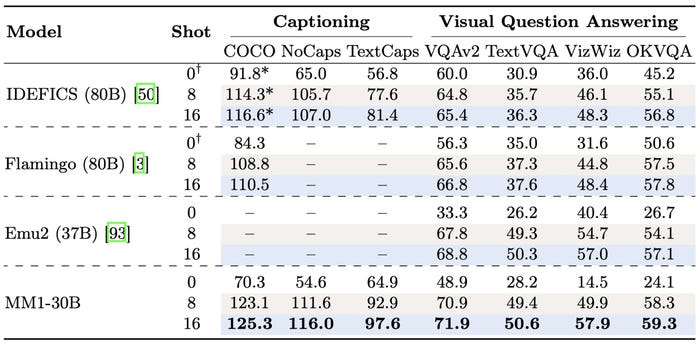

In terms of performance, the 30 billion parameter version of MM1 outperforms existing results for pre-trained models on multimodal benchmarks. MM1 beats models like Flamingo and IDEFICS that are over double in size.

Credit: Apple

Apple’s AI plans continue

MM1 marks the latest step in Apple’s quiet generative AI march.

Just last month, it emerged that Apple had ended its self-driving car plan, Project Titan, to focus on generative AI.

Unlike Microsoft and Google, Apple has been quietly working on AI projects. Reports emerged last summer that it’s working on its own web application-based chatbot service called ‘Apple GPT.’

While nothing concrete on the so-called Apple GPT has surfaced, Apple did release MLX, an open-source toolkit to allow developers to train and run large language models on its hardware.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)