What you need to know before scaling up your AI-first startup

There are a number of challenges directly and specifically related to developing AI capabilities and scaling a data science function in a business

March 17, 2021

Sponsored Content

It’s always exciting to be at the ground floor of nascent technology like artificial intelligence (AI) and machine learning (ML), as you grow with the industry.

For those of us building companies in the space, however, it does come with growing pains. There’s plenty of accessible, conventional wisdom and advice on how to scale your business, but a lot of that isn’t applicable in an industry that’s only really been around in the last decade.

When I talk to my fellow founders and colleagues in the space, no one is working off a defined playbook like in other sectors.

There are a number of challenges directly and specifically related to developing AI capabilities and scaling a data science function in a business. The impact and efficacy of data science on the business as it scales are directly tied to the following three pillars:

Product alignment and user value

Pragmatism and System Thinking

Data and Machine Learning infrastructure

Each one of these aspects is a factor directly related to the impact data science will have in the organization. For the founders of today who are just starting to build their companies, or even for those who like me have been doing it for a while, I wanted to share some learnings and advice based on my own experiences scaling an AI-first startup.

Product alignment and user value

In companies that use data science to improve services and products (versus pure research done in universities) it is critical to understand and frame problems around clients’ needs. Once this is done, the teams can explore potential solutions to these problems using different approaches and areas of expertise. One of my suggestions is to be explicit on your objectives, how to measure success, what assumptions you have made and what are the hypotheses for each experiment or idea.

Clearly coupling data science initiatives and value to users is critical. One common mistake in data science is to work on a local optimization where a ‘model’ is improved over a long time, but the actual value for the user is not only implicit, but also marginal. In some scenarios, the actual impact might even be negative. By framing problems around user value, the teams might find simpler or more effective solutions (potentially without data science).

I believe that, in the majority of industrial cases, the main challenge in data science is not how to solve a problem, but knowing what problem to solve in order to maximize impact.

Pragmatism and System Thinking

Many people working in data science are driven by trying to improve the current best approach on a given problem. This could be the ‘best model’ in production for a company or a state of the art (SOTA) solution in academia. While this might be a good approach for academics and for some industrial problems, it is not the best approach for all organizations.

If you are a scaleup company with AI at your core, you might have several problems you are trying to solve at any given time. For a few of them, usually related to the differentiation or defensibility of the company, it makes complete sense to optimize the models as much as you possibly can, even if it will take months or years to do so. However, for many other data science initiatives, you should always consider the opportunity cost of spending time on a non-critical component after you have achieved a ‘good enough’ level of value for clients. In many cases, improvements that are not directly perceivable by the users might not be worth investing too much time on. This is not because data science would not be able to help, but rather because there might be other problems with higher return on investment (ROI). The main principle is to not let perfect be the enemy of the good.

Another common issue is defining exactly what constitutes the ‘best’ solution. Quality is just one part of the equation, but other aspects such as efficiency, explainability, ease of deployment and monitoring, or consistency could be as important depending on your problem space. Probably one of the best examples of this is how Netflix learnt a lot from, but never implemented in production, the winner of their famous recommendation challenge.

When using data science to solve a problem in industry, best practice is generally to start with the simplest possible solution that might solve the problem end to end. This is the quickest way to gather information about the problem and the solution. After this, there will be less uncertainty surrounding the problem and the suitability of the potential solution, as well as which aspects are in greatest need of improvement. The ‘simplest’ solution, could be an off-the-shelf model or even a set of simple heuristics. The irony of this advice is that while almost every Data Scientist would agree this makes sense, many still opt for more complex initial solutions in practice.

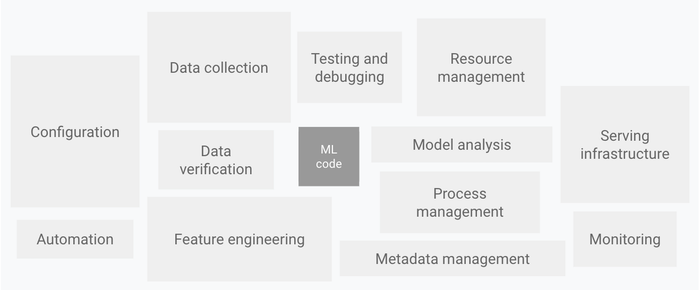

Using system thinking is pivotal because ML models tend to be a small cog in a much larger mechanism, as you can see in the above picture taken from the paper Hidden Technical Debt in Machine Learning Systems. Deployment of models is never the end and model monitoring is as important as model training. Moreover, I would encourage everyone to assume AI systems will make mistakes (because they will) and to create a system that can elegantly deal with, and hopefully learn from, those mistakes.

Infrastructure and ML Ops

Machine Learning Operations (ML Ops) is one of the trendiest areas at the moment for a good reason. If used effectively, it will be a multiplier for the company’s data science impact. By ensuring it is easy, scalable and safe to deploy models data scientists will run more experiments. By allowing for the testing, debugging and monitoring of models in production, many problems will be detected more quickly. This could include internal problems (e.g., bugs in some of the models) as well as external factors (e.g. data distribution and topic shift changes).

While ML Ops is becoming more popular, it is still difficult to prioritize this type of work in a growing company. This type of investment will take effort and it will probably be an opportunity cost in the short term. However, it will definitely pay off in the medium and long term. There are other aspects related to the mindset of data science development that can be very useful at this stage. As a company grows, ensuring reproducibility and replicability for all meaningful experiments, and having the capability to seamlessly rerun (or reuse) code related to old experiments will increase the speed and reduce the frustration of the data science function.

Conclusion

Scaling data science in a scaleup is not a simple task. In addition to the challenges faced across the business (communication, direction and focus, people growth and agility) it has its own unique difficulties. The situation is further complicated by the fact that some of the approaches and behaviors that worked well in the start-up stage are not well suited for this new phase of growth.

I believe that the most important point to remember as the company grows, is to understand clearly why and how the efforts of the function affect the company goals, and then support the people in the function and teams as best as possible to allow them to deliver solutions with a clear and tangible impact company wide.

Dr. Miguel Martinez is co-founder and Chief Data Scientist at Signal AI

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)