Meta Unveils Llama 3, the Most Powerful Open Source Model Yet

Meta unveils new Llama 3 model trained on a dataset seven times larger than the one used to build Llama 2

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Meta's new Llama 3 claims AI performance crown as the "most capable" open source model yet.

- The company unveils state-of-the-art 8B and 70B parameter versions of its Llama language model.

- The new models boast architectural innovations and pretraining improvements powering superior performance.

Meta has unleashed Llama 3, its next-generation open-source language model that establishes new performance heights in reasoning, code generation and instruction following.

The company is touting Llama 3 as "the most capable openly available” large language model to date, outclassing offerings from rivals like Google and Anthropic at similar scales.

The Llama series of large language models is among the most important in the AI space, powering many applications and forming the basis for models developers have built upon including Vicuna and Alpaca.

“This next generation of Llama demonstrates state-of-the-art performance on a wide range of industry benchmarks and offers new capabilities, including improved reasoning,” according to Meta’s announcement.

.jpg?width=700&auto=webp&quality=80&disable=upscale)

Credit: Meta

The new models understand language nuances and can handle complex tasks like translation and dialogue generation.

Meta boosted the model’s scalability and performance allowing Llama 3 to handle multi-step tasks. The Facebook parent company boasted that the model “drastically elevates capabilities” like reasoning, code generation and instruction following.

Llama 3 also has a lower prompt refusal rate than prior versions as Meta’s AI engineers adopted a more refined post-training process which also boosted the diversity of the model’s answers.

Llama 3 comes in two sizes - eight billion parameters, making it slightly larger than the prior smallest Llama model, and a 70 billion parameter version.

Both versions have an 8k context length, meaning they can handle inputs of around 6,000 words of context.

Businesses can build with Llama 3 from today. It’s available to download from Meta’s website. It’s also accessible on cloud services, including AWS via Amazon SageMaker JumpStart.

The Llama 3 models are also coming to Databricks, Google Cloud, Hugging Face, IBM watsonX, Nvidia Nim and Microsoft Azure among many others.

The model supports hardware from a variety of providers including AMD, AWS, Dell, Intel, Nvidia and Qualcomm.

The latest models are powering Meta AI, Meta’s new chatbot solution, which is currently limited to responses in English to users in a dozen countries, including the U.S., Canada, Singapore and New Zealand.

More to Come

Meta announced the launch of two Llama 3 models but said these are "just the beginning.”

Previously Meta shipped a 13 billion parameter version when it launched Llama 2, and the company is planning on launching larger versions, as well as Long versions with improved memory and context lengths like Llama 2 Long.

The Llama developer did provide a glimpse at one model to come - a version of Llama 3 that’s a whopping 400 billion parameters in size. That would make possibly the largest open source model ever released.

While Meta is still training those systems, it did showcase early model performance details, with an early version of the 400 billion parameter model achieving impressive scores on industry-standard benchmarks.

“Our team is excited about how they’re trending,” the company said.

Reacting to the launch, Jim Fan, senior AI research scientist at Nvidia Research said the 400 billion version, when launched, will “mark the watershed moment that the community gains open-weight access to a GPT-4-class model.”

“[The 400 billion parameter model] will change the calculus for many research efforts and grassroot startups. I pulled the numbers on Claude 3 Opus, GPT-4-2024-04-09 and Gemini,” Fan said on X (formerly Twitter).

Performance and Architecture

Credit: Meta

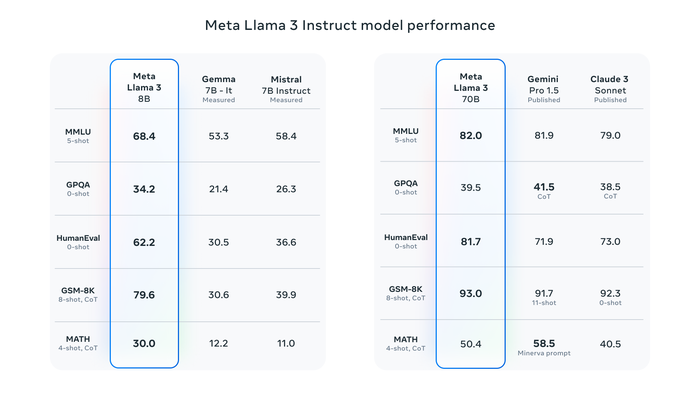

The new large language models are powerful, achieving state-of-the-art performance on industry benchmarks like MMLU and HumanEval.

The release features pretrained and instruction-fine-tuned models that demonstrate state-of-the-art performance on industry benchmarks and offer improved reasoning and coding capabilities compared to previous versions.

The smaller Llama 3 achieved widely higher scores than Google’s Gemma-7B and Mistal’s Mistral-7B Instruct.

The larger Llama 3 model meanwhile outperformed scores from both Google Gemini Pro 1.5 and Anthropic’s Claude 3 Sonnet. However, both models are closed-based systems, meaning Meta had to go off what scores the respective model developers published.

Meta’s chief AI scientist Yann LeCun said on X (formerly Twitter) that the team behind LLama 3 had even seen results where the smaller model outpaced the larger version.

The models performed well due to their underlying architecture, with improvements made to Meta’s post-training procedures that “substantially reduced false refusal rates, improved alignment, and increased diversity in model responses.”

Llama 3 uses a decoder-only transformer architecture. Compared to Llama 2, Llama 3 uses a tokenizer that encodes language more efficiently than a traditional encoder + decoder approach.

In simple terms, the underlying architecture allows the model to be better at generating text compared to previous iterations.

Add into the mix grouped query attention (GQA), and the model’s efficiency is also improved.

To support responsible deployment, Meta introduced new tools like Llama Guard 2 for content filtering based on the MLCommons taxonomy, CyberSec Eval 2 to assess risks related to code generation and Code Shield for runtime filtering of insecure code outputs.

The Data Powering Llama 3

While Meta has not disclosed the dataset it used to train Llama 3, the company did reveal it was built using a “large, high-quality training dataset” that’s seven times larger than the one used for Llama 2 and four times the amount of code.

Meta said it opted for a heavy emphasis on pretraining data, or the data that’s used to perform initial training of a model, using over 15 trillion tokens from publicly available sources.

More than 5% of that dataset is high-quality non-English data from more than 30 languages, though Meta said it does not expect the same level of performance in these languages as in English.

Llama 3 also leverages a series of data-filtering pipelines to clean pretraining data. Among those pipelines are filtered for not safe for work (NSFW) content and text classifiers to predict data quality.

The Llama 3 dataset contains synthetic data too. Meta used the prior Llama 2 model to generate the training data for the text-quality classifiers.

The model was trained on two custom-built data center-scale GPU clusters, which each contain 24,576 Nvidia H100 GPUs. Meta disclosed the infrastructure used to train the model in March. It’s also using the mammoth hardware stack for wider AI research and development.

Despite not disclosing the training data it used, Meta affirmed its commitment to open source development.

“We have long believed that openness leads to better, safer products, faster innovation, and a healthier overall market. This is good for Meta, and it is good for society,” the company said. “We’re taking a community-first approach with Llama 3, and starting today, these models are available on the leading cloud, hosting, and hardware platforms with many more to come.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)