AI Everywhere and the Implications for Hardware

Enterprises are adopting AI faster than you think, but the real value is still ahead of us – according to a new Omdia survey, commissioned by Intel.

March 28, 2023

Sponsored Content

At a Glance

- Operationalising new AI applications drives a need for more hardware and software efficiency

- Enterprises adopting AI have more distinct and diverse models than previously assumed

- There is a high ratio of training to inference, and CPUs remain the most common choice for AI model training

The pace of AI adoption continues to surge, from the traditional use cases of chatbots/virtual assistants, to ever new applications (such as Intel showcasing a live AI/video analytics solution for Search & Rescue).

In tracking publicly announced projects, Omdia tracked a 2019-2020 surge, driven by chatbots and virtual assistants. Since 2020, excitement around this category cooled and growth shifted into the categories of process optimization, predictive analytics, and customer experience.

Omdia saw this shift from low-hanging fruit – projects that are easy to demonstrate, show quick wins, and often come ready-made from a vendor – to a deeper digital transformation engaging AI in fundamental business decisions and processes. This second wave of adoption generates more value, although over a longer payoff period and with more investment needed to operationalize. Data scientists and software developers are key to this process, working with both line-of-business domain experts and infrastructure engineers. In most cases, this shift will be towards greater computational complexity as well as to greater integration with the core business, making demands of the technology as well as the people.

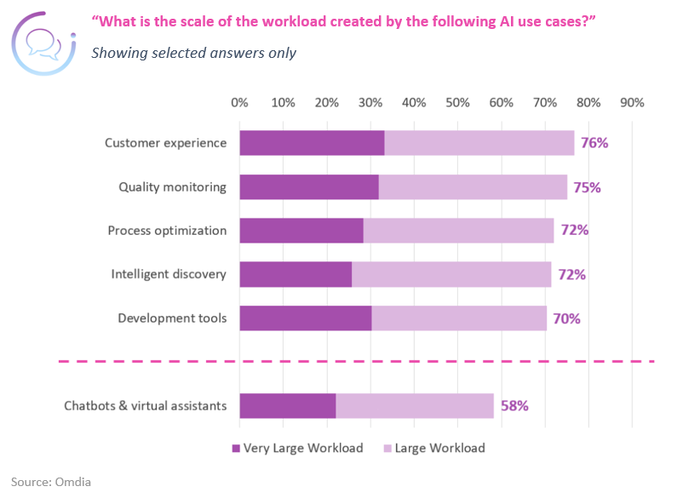

A new Omdia survey (commissioned by Intel) of 304 global enterprises, found that rising use cases are already driving greater demand for compute, with several categories ranking higher than “chatbots and virtual assistants” as a “very large” or “large” compute workload.

These applications are proving harder to operationalize too – whilst newer projects are less likely to be implemented yet, Omdia data shows Chatbots are by far the easiest projects to implement, followed by visual analytics.The projects of the future are running at an implementation rate of around 10-20%.

That said, the pace of AI adoption continues to increase rapidly, as it expands across all geographies, enterprises and vertical industries.

What does this mean for your production AI architecture?

As AI applications push forwards, it brings increasing importance to the full development cycle – from data collection and preparation (such as in the Intel project example), through to production and inference.

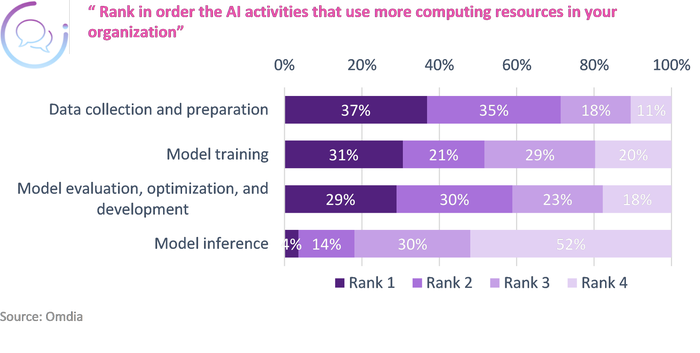

New models and applications are released frequently, as are new data sets, and inference is not necessarily served at scale. Survey results showed that substantially more computing resources is used for data preparation, model training, and model evaluation than for inference serving. Only infrastructure engineers thought training was a bigger workload than data preparation.This is a reminder that it’s important to have visibility over compute utilization across your workloads – with monitoring tools such as the infrastructure dashboard in cnvrg.io.

Hardware choices will be rather different from those facing a hyperscale operation serving the same model to an Internet-sized audience. Flexibility and mix will dominate over headline TOPS numbers and throughput. The mix of configurations across the fleet of servers will have to be richer in CPU compute; a view backed by survey results, 45% of respondents saying CPUs were their primary AI model training option.

Conclusions

As the enterprise AI market shifts gears and adoption ramps up, developers are working with more models, of higher complexity/diversity and with more model training projects than ever before.Good AI outcomes come from considering these needs from the outset, in enabling developers to deliver.

Real enterprises deploying AI need an efficient software development process, running on infrastructure that can cope with a high ratio of training and development work to inference. This means a wide selection of performant CPU-based instance types to support their developers and data scientists. Forthcoming CPUs are increasingly likely to include AI inference acceleration as part of the core instruction set – using technologies such as Intel’s AMX (Advanced Matrix Extensions).

Read the full Omdia and Intel whitepaper here.

Brought to you by:

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)