Is Machine Learning Everywhere? Not Quite, But It Could Be

Is Machine Learning Everywhere? Not Quite, But It Could Be

June 1, 2018

By Nisha Talagala

Over the last few years, there has been no shortage of discussion about artificial intelligence and machine learning (ML). From self-driving cars to smart home devices, we’ve heard it all. However, despite all of the hype around artificial intelligence and machine learning, not many companies are actually using it.

According to research from McKinsey Global Institute, only 20% of companies have deployed any AI technology and only 10% have deployed three or more. Out of 160 AI use cases examined, only 12% had progressed beyond the experimental stage. But successful early AI adopters report profit margins that are 3-15% higher than industry average, so for the 88 percent who have not put ML into production, there is a great deal of untapped potential.[1]

In order for companies to take advantage of all that AI and ML have to offer, and to generate a positive return on investment (ROI) using ML, they must understand the challenges that come with putting them into production as well as what it will take for them to successfully deploy, manage, and scale across their entire business.

Barriers to ML Implementation Across the Enterprise

There are a number of challenges that companies may face when attempting to deploy ML in their business, including:

Real-World Dynamism: Incoming data feeds can change dramatically, possibly beyond what was evaluated in the Data Scientist sandbox.

Mismatched Expertise: Operating ML in production requires the combined skills of both IT operations and data scientists, not just one or the other. The expertise of IT operations professionals is in the deployment and management of software and services in production. Whilst data scientists are experts in the algorithms and associated mathematics.

Complexity of ML: Unlike other analytics, rule-based relational databases or pattern-matching key-value based systems, the core of ML algorithms are mathematical functions whose data-dependent behaviour is not intuitive to most individuals.

Reproducibility and Diagnostics:As ML algorithms can be probabilistic, there is no consistently “correct” result. For example, even for the same data input, many different outputs are possible depending on what recent training occurred, and other factors.

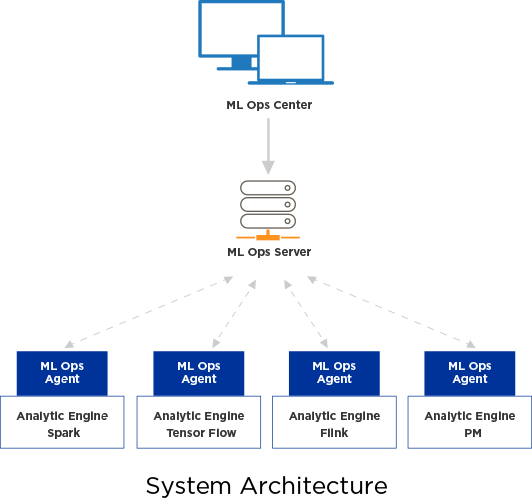

Inherent Heterogeneity:In contrast to other application spaces such as databases, there is no single type of analytic engine used for ML. As there are countless, varying ML algorithms that exist in the world today, many different specialised analytic engines have emerged, focusing on each as a result. Practical ML solutions frequently combine different algorithmic techniques, requiring the production deployment to leverage multiple engines.

How to Operationalise ML

Successfully deploying ML across an enterprise is not an easy feat. All areas of the organisation must learn how to work together and understand the necessary steps they must take. In order to do this effectively, many are beginning to adopt MLOps (a compound of “machine learning” and “operationalisation”). MLOps is a practice for collaboration and communication between an organisation's academic (i.e., data scientists, data engineers, developers, etc.) and economic (i.e., IT, operations, and governance staff, and business analysts) professionals during the deployment of ML in production.

As a standard practice, MLOps enables organisations to code ML management tasks and optimally execute the ML lifecycle. There are several key areas that MLOps takes into account, including:

Defining the ML application

Success metrics (key performance indicators)

Risk management

Governance and compliance

Automation

Scale

Collaboration

By implementing the practice of MLOps, all areas of an organisation can not only tackle the many challenges they may face when working to deploy ML across the enterprise, but also work together seamlessly and address any issues that may arise. As we see more organisations adopt an MLOps approach to putting ML into production, we’ll begin to see more real-world usage of ML, and businesses will start to see a ROI on their investment in ML.

[1] McKinsey Global Institute, Artificial Intelligence: The Next Digital Frontier?, 2017

Nisha Talagala

Nisha Talagala is the Co-Founder, CTO and VP of Engineering at ParallelM. She has more than 15 years of expertise in software development, distributed systems, I/O solutions, persistent memory, and flash. She holds 54 patents in distributed systems, networking, storage, performance and non-volatile memory and serves on multiple industry and academic conference program committees.

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)