Case Study: AI for Customer Engagement at Google

In this paper, Google's Customer Engagement Team explains how they use AI to delight billions of users and reveals its flywheel for success

August 9, 2023

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

First published in Management and Business Review, which is backed by the business schools of Cornell, Dartmouth, INSEAD and other top universities.

Google was founded with a goal 'to organize the world's information and make it universally accessible and useful’ and is guided by a simple tenet: Focus on the user and all else will follow. Today, the company is home to several consumer products with more than one billion users, and multiple business products with more than one million users.

While these products succeeded by focusing on the user, there’s another thread that ties them together: Each one experienced and was managed through an exponential growth phase. You cannot solve exponential problems with linear solutions.

The Customer Engagement organization at Google is responsible for being the human face of the company, facilitating billions of interactions each month. As the business scales, the number of engagements grows even faster and can range from a customer filing a ticket to fix an issue all the way to an in-person meeting with a representative to help the client achieve business objectives.

Our interactions start with customer support from tens of thousands of Google representatives and expand to include custom tools and processes that bring significant improvement to human interaction at scale. Today, we have taken this one step further by delivering AI-enhanced experiences that bring even more value to the customer.

Focus on the user and all else with follow.

This is the story of how we managed this growth in our marketing, sales, and support teams while delivering great experiences to users. We start with a customer-first mindset, offer a prioritization framework, provide case study specifics with the AI that was employed along with the impact on customer engagement, and conclude with lessons learned.

Customer-first customer engagement

Customer expectations continuously grow and younger customers continue to drive patterns that become widely adopted in all age groups. Today’s trends include frictionless content creation, hyper-personalized feeds, and snackable content. In this environment, nobody has the time or patience to wait to talk on the phone. Digital experiences should 'just work’ and when they do not, customers want to solve the problem on their terms with the least amount of friction and effort.

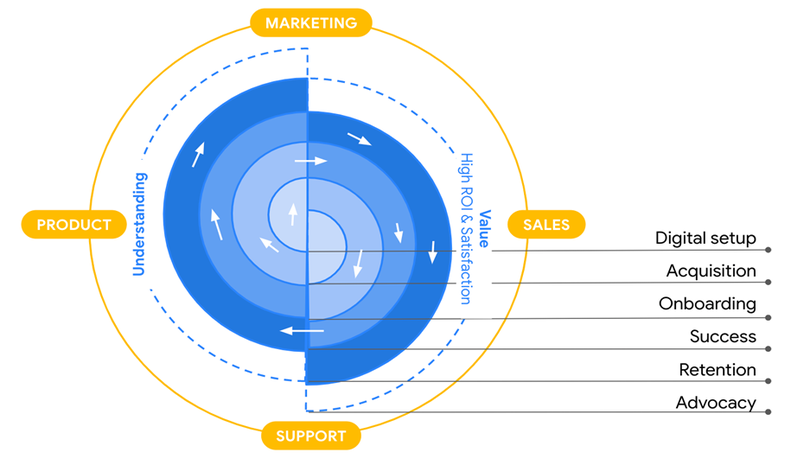

Our customers want one personalized, proactive, and contextual Google experience across all surfaces or touchpoints in a way that respects privacy. Sophisticated software is required to deliver on this promise, so we built a simple customer-first model to guide our use of AI in customer engagement.

The customer success flywheel shows how we build solutions informed by deep customer understanding, with the key success criterion being the provision of customer value at high satisfaction. This starts at the earliest customer engagement with Google (middle of the flywheel) and as we learn more about our customers in product, marketing, sales and support, we are able to deliver more customer value.

This creates a positive feedback loop and enables every surface to be customer-aware and deliver 'one size fits one’ solutions at a global scale across the breadth of customers we serve. Our success metrics are similarly customer-oriented around customer understanding, value, and satisfaction.

CUSTOMER SUCCESS FLYWHEEL

Prioritization framework

We prioritize the best customer experience and outcome regardless of how we deliver it. One way for us to confirm that we are optimizing properly is to ask if we would be comfortable sharing our metrics dashboards with our customers. As organizations are pressured to focus on metrics within their control, it is easy to optimize for success that is not customer-informed or for the customer’s benefit. By anchoring our work in customer understanding, value, and satisfaction, we are able to orient our efforts toward our customers' needs.

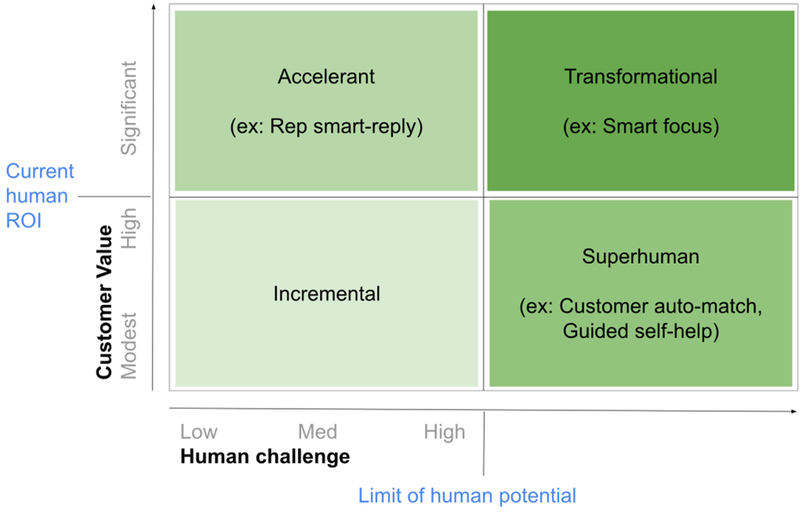

To help ourselves think about these opportunities, we built a framework for customer engagement transformation that showcases the need for AI to break out of the 'Incremental’ quadrant and helps guide our thinking to push the limits of what is possible for humans.

FRAMEWORK FOR CUSTOMER ENGAGEMENT TRANSFORMATION

The y-axis is focused on customer value directly and reflects the inherent current limits of human ROI, given labor is not free. This highlights the need for our solutions to raise productivity − such as smart reminders, meeting scheduling, auto-pitch deck creation, among others − so our customers get more value. The x-axis is focused on how challenging the tasks are for humans. This motivates the powerful assistance of AI especially in tasks where the volume, complexity, and opportunity of optimizing these to yield the best customer outcome quickly becomes too complex for humans to fully understand and maximize.

The path to transformative impact is built on top of foundational AI research. Within Alphabet, we are lucky to have world-class research teams such as DeepMind and Google Research (now Google DeepMind) pioneering breakthroughs in AI. We are pushing toward a future that delivers a coordinated set of hyper-personalized solutions that maximize the best of human and technology intelligence and deliver the most customer value long term.

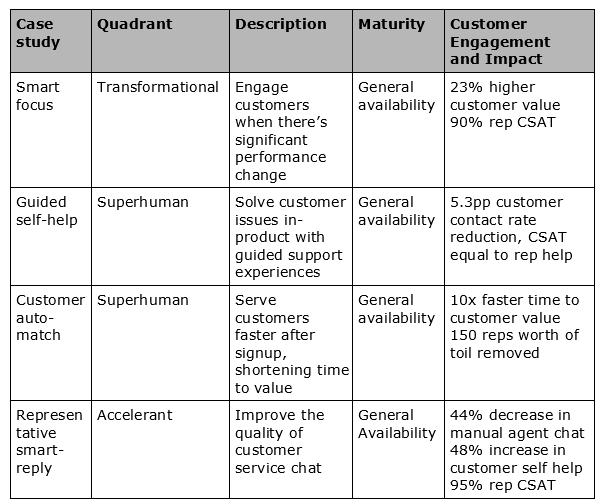

Below are four case studies where we have made significant progress:

Smart focus: Engage customers to significantly improve outcomes

We are developing tools to help our sales and support representatives manage a myriad of simultaneous objectives – serving customer needs, hitting KPIs, and helping run a high-performance organization. They have access to insights that can help our customers meet their business objectives, and because the breadth of scope is so large, it can be paralyzing to decide where to spend their time. Our objective was to build AI that can help them focus better, resulting in improved outcomes for our customers.

Innovative AI application

Past efforts to direct human attention often used hard-coded conditional logic and heuristics to approximate average performance, but we set out to achieve three things:

We leveraged AI to build an intelligent detector, which automatically tunes sensitivity for different representatives and customers. This helps provide a very high signal-to-noise ratio so representatives listen when we have something to say. Standard anomaly detection would not work for a large variety of customer data at different scales.

We converted heuristic-explanation problems into multi-dimensional, multi-metric correlations and attribution models, which significantly reduced the computational complexity and helped achieve scaled impact. This helps ensure that no matter what opportunity we raise, we are able to give a rationale to our representatives, so they can evaluate the logic and explain it to a customer or colleague.

We designed an experimentation environment that enabled causal measurement of the impact and provided feedback loops to improve and train the model.

Resulting customer engagement

The impact of these initiatives means that representatives start their day with awareness of whether there is a high priority item to engage. When one is present, they can investigate the context, prepare the right customer response, and magically have a timely conversation well placed to help.

So far, customer and representative outcomes have materially improved. Customers avoided unplanned campaign delays and were able to add to high ROI campaigns as well as optimize their campaigns and improve the efficiency of their spend with Google.

In aggregate, customers found 23.5% more value with Google and the associated 95% Confidence Interval (CI) is 15% to 32%. This is measured as customer spend over the subsequent three weeks compared to a randomized controlled trial. Representatives also appreciated the help from the system and provided a satisfaction score of 90% − double the sentiment for non-AI attempts in this space previously.

Guided self-help: Diagnose and resolve issues using scalable, intelligent support

Each year, billions of customers interact with hundreds of Google’s consumer products such as Search, Android, and YouTube. As Google’s offerings and customer base grow in diversity, so does the complexity of issue identification and resolution.

Customers can ask for help in many different ways, and problems they raise can have many potential root causes. One way that Google Support scales self-help offerings, increases representative productivity, and improves customer satisfaction and resolution is with large language models (LLM) such as multi-task unified model (MUM) and language models for dialog applications (LaMDA).

You cannot solve exponential problems with linear solutions.

These promising, scaled LLM applications orient us toward an AI-guided support experience. Our goal is for customers to interact naturally with intelligent, automated support, such that they feel empowered by and prefer self-help over support alternatives. We have invested in the two critical steps in approaching customers’ issues: diagnosis and resolution.

For diagnosis, we determine the root cause of an issue by looking at a set of 'symptoms.’ For resolution, we help the customer choose the appropriate course of action based on the facts. Our incumbent self-help experience is rich in information, encompassing help articles and representative transcripts. But customers still bear a significant burden in both steps: articulating their issue, navigating through a tree of symptoms and root causes, and trying out different resolutions.

Innovative AI application

Our strategy serves to lighten the customer’s burden in diagnosis and resolution. For diagnosis, we used cutting-edge LLM applications to efficiently and effectively understand the issues in the 'customer’s own words’ and guide them towards a specific resolution. For resolution, we use MUM to rank and serve resolutions on support interfaces like our search page and escalation forms.

These AI applications remove complexity for our customers. In certain instances, we now shortcut customers directly to the right resolution. Initial results show a 20% to 40% increase in being able to answer their questions depending on product area, and neutral to positive in the quality of the answer in all affected touchpoints.

Resulting customer engagement

A primary metric of self-help success is the support escalation rate, where a customer cannot solve the problem alone and thus needs help from a human representative. Our experiments showed a 5.3pp reduction (95% CI 3.9pp to 6.7pp) in contact rate (escalations) versus control, offering 2.8 times the performance achieved with the latest neural networks using long short-term memory (LSTM). We achieved this contact rate reduction while achieving no degradation of our high customer CSAT compared with agent benchmarks.

Customer auto-match: Serve customers faster after signup, shortening time to value

Helping Google Ads customers starts with understanding which campaigns and accounts they are using to achieve their digital marketing goals. At Google scale, this is quite complex − there are millions of new accounts created each year, with little customer data collected to avoid adding unnecessary friction during signup. Customers use Google Ads through different legal entities, divisions, third party agencies and consultants − and there is often no easy way to determine the right person or group with whom Google should engage.

To identify which accounts belonged to each customer, we initially asked several hundred representatives to manually examine each new Ads account and connect it to the right entity in our customer hierarchy. This worked, but was slow and difficult to scale and efficiently support the ever-growing and expanding set of Ads customers. We needed a more automated solution.

Innovative AI application

To solve this problem, we decided to implement a set of approaches that would more readily match our customers to their account:

A combination of AI models that would identify the most likely owner of a new account in our customer hierarchy

A rule-based system that allowed our teams to specify high-confidence rules and signals manually for handling of simple cases

As the final line of defense, a small team of analysts to resolve the most complex and ambiguous accounts, and build datasets for ML training and validation

Resulting customer engagement

Customers benefit the most with a 10-fold reduction in the time to value from our engagement teams. Google representatives also appreciate the significant reduction in toil required – we have increased productivity by the equivalent of 150+ employees annually.

Representative Smart-Reply: Improve the quality of customer service chat

Google Support offers personalized one-on-one support over chat, email, and phone to certain consumer and business customers. Initially, we served these engagements with representatives but as Google’s product ecosystem grows in complexity, it can be hard for any one person to remember the latest policies in each market for each product. Further, we wanted to expedite time to resolution and increase customer satisfaction amidst this growing complexity.

Innovative AI application

We invested in AI-suggested replies for representatives. During a live chat interaction, a representative sees an AI-suggested 'next best response’ to send to the customer. The representative chooses either to approve the AI suggestion or override with a human input. The suggestions are trained on representative transcripts and optimized on representative acceptance and resolution. Each case interaction increases the model’s confidence for future recommendations.

After success with early iterations, we started conducting experiments for Smart Replies in a supervised mode. This is where an AI-suggested reply message is auto-sent to the customer after a fixed time duration. The human representative can opt-out from sending the message, however the message is auto-sent if there is no representative intervention.

This evolution from representative opt-in to opt-out points to a future where representatives can move from manually handling a case with opt-in recommendations to supervising AI with minimal human involvement, enabling them to manage complex customer escalations. Recently, we observed the first instance where a representative-customer conversation on a complex support issue was fully handled by the model with no manual intervention from the human supervisor.

Resulting customer engagement

Google representatives loved the assistance, with 95% CSAT and early results indicating that customers resolved their issues faster and with greater accuracy, based on key leading indicators such as 44% reduction (95% CI 42.1% to 45.9%) in manual representative messages in chat. The use of smart-reply also led to a 48% (95% CI 46.1% to 49.9%) increase in customer click-through rate (CTR) to self-guided support pages. As a result, we have reduced our dependence on fallible human efforts in high-pressure, repetitive situations and improved the quality of our customer’s help experience.

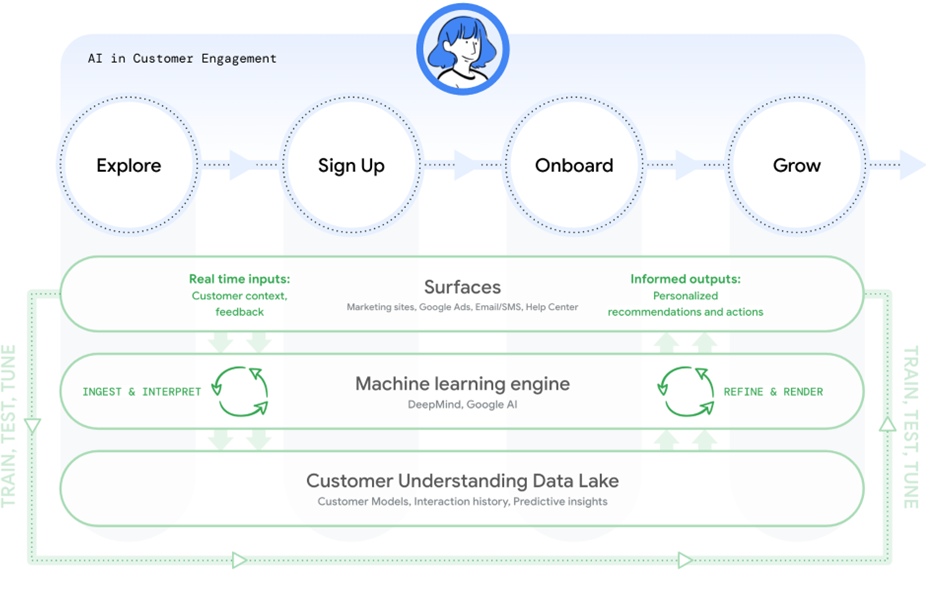

Google’s technology architecture for AI in customer engagement

To power the case studies above, we built a technology architecture oriented to the customer’s lifecycle, increasingly engaging with customers to better understand their goals and providing assistance and guidance in an easy-to-use manner. There are three key components:

The Customer Understanding Data Lake collects, curates and derives valuable privacy-preserving insights to create a complete picture of customers in our ads business and power our AI layer.

Our machine learning engine is composed of AI/ML technologies within Google, finely tuned to solve complex and unique problems.

Finally, intelligence insights and actions are carefully integrated into various customer touchpoints (e.g. marketing sites, help center, core products, sales tools) and personalized customer journeys, actions and context.

Each element of our architecture was custom-built and is optimized to help our customers achieve their business goals with as little friction as possible.

TECHNOLOGY ARCHITECTURE FOR AI IN CUSTOMER ENGAGEMENT (SIMPLIFIED)

Key leadership learning points

Measurement rigor

To confidently invest in a portfolio of AI projects, we must measure the impact of each project and understand which bets have been implemented successfully. We anchor on total impact for our customers and the company, which is a product of both scale and value per unit.

We run Randomized Control Trials (RCTs, or A/B tests) and use proven data science techniques to prove causality between our work and the impact metrics, accepting statistically significant results within a narrow confidence interval, typically 95%.

To ensure consistency, we do not allow teams to grade their own homework but rather have a centralized, independent group of data scientists and business experts that certify impact measurement and provide guidance. In aggregate, we are confident we are delivering clear and quantified value for customers and powering a portion of the growth of Google’s Ads business.

Because of the high level of rigor required to show statistically relevant results, this type of impact is inherently a lagging indicator. To make decisions along the way, we consider leading indicators such as sentiment, repeat usage, task success, and assistance feedback on both the customer- and representative-facing experiences.

Sentiment is measured both within a workflow (transactional) and outside (relationship). Our AI-powered experiences are 10pp to 20pp higher than non-AI-powered ones and have been key to us cracking above the 75% CSAT threshold for customer engagement products.

Repeat usage is an improved measure of coverage and adoption that allows us to detect early promise of a new AI feature. In the smart-reply example, this might be the difference between something being used a couple times a day versus multiple times in every chat conversation. Performance of greater than 40pp improvement is possible as we saw here with manual agent chat improvements.

Task success is a broad term that encompasses the achievement of a customer’s or representative’s objective. In the sales domain, this would be pitch rate or win rate while in support it could be first-time resolution or total resolution time. Historical baselines give us a clear threshold to shoot for and we have seen individual AI solutions provide step changes in success rates by 10 times on hard-to-improve tasks.

Assistance feedback helps us understand when an AI failed and needed human intervention. We intentionally design loops that have a human available to train our systems – and representatives are a great way to build confidence that an AI solution is ready to go directly to the customer. We use this as an indicator for how quickly we are learning and training the system, and a preview of where the system may need guardrails before going to a customer.

Finally, projects that successfully move the leading indicators above often go on to produce lagging indicator success as well. We use a three-stage funnel to move from estimation to validation to certified impact, with historical comparables at each step.

Support frameworks

With customer value and ROI as our north star, we found that there was a need for frameworks to orient the teams in the same direction, provide guidance for building in the near-term while taking steps towards an aspirational long-term vision. These frameworks clarify what exists today and what should exist in the future, with a coordinated path to get there while keeping us true to our north star.

For example, the customer success flywheel clarified the need for deep customer understanding to provide optimal customer value. The 2x2 framework maps customer value and human potential and provides a singular methodology to identify, develop, and prioritize opportunities.

Tips on leveraging AI

With the growing number of AI capabilities and business applications, people are often tempted to 'catch the wave’ to avoid missing out. As a result, organizations often spend a significant amount of time trying out various techs and solutions. To balance maximum business impact with fast time-to-market, we recommend three things:

Identify the most important use cases before picking the technology

Treat AI solutions as building blocks that you can assemble to solve the bigger problem

Prototype with simple solutions with a focus on learning

In summary, our effort to manage the exponential demand on our marketing, sales, and support teams led to many AI-powered initiatives. Each experience is optimized for different business goals, but developed with our customer-first principle, helping us to deliver the best possible experiences. The prioritization framework discussed in this paper also emerged as a second, important guide in challenging teams to push AI past the conventional boundaries of human ability, and prevent optimizing for easy wins that do not prioritize our customers’ business goals.

Our journey will never be done and we continue to look for new ways to deliver more value to our customers.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.png?width=100&auto=webp&quality=80&disable=upscale)

.png?width=400&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)