Google DeepMind Breakthrough Is ‘Milestone’ Toward AGI

AlphaGeometry can solve Olympiad level math, proving AI can develop robust reasoning skills without human demonstrations

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Google DeepMind’s new AlphaGeometry is a powerful new open source model for solving math problems.

- Using a unique neuro-symbolic system, it was able to match the performance of an Olympic gold medalist in math.

- This 'milestone' is one step closer to more advanced and general AI, the researchers said.

Researchers at Google DeepMind have developed an AI system capable of solving geometry math problems at the level of an Olympian gold medalist.

Dubbed AlphaGeometry, the new AI system can solve complex geometry problems despite not having any human demonstrations during training.

In a paper published in Nature, the researchers wrote that this model “is a breakthrough in AI performance.” That is because AI systems struggle with complex problems in geometry and mathematics “due to a lack of reasoning skills and training data.”

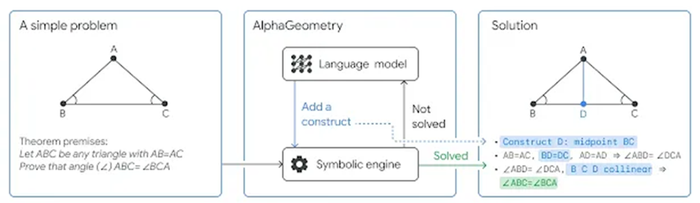

The team overcame this weakness by building a system that combines both a neural language model for predictive power with a rule-bound deduction engine. If AlphaGeometry gets stuck, the system would ask its neural language model for suggestions and the pair would work in tandem to find solutions.

The team also developed a method to create a “vast” pool of synthetic data with 100 million unique samples so that no human demos were needed for AI training.

The result was a breakthrough performance. “Solving Olympiad-level geometry problems is an important milestone in developing deep mathematical reasoning on the path towards more advanced and general AI systems,” the researchers wrote.

Co-author Thang Luong tweeted that "our work marks an important milestone towards advanced reasoning, which, I believe, is the key prerequisite for AGI."

Access AlphaGeometry’s code and the model itself via GitHub. It is open source and available for commercial use under an Apache 2.0 license.

AI model vs. top mathematicians

AlphaGeometry was given a test of 30 Olympiad geometry problems. It solved 25 within the standard Olympiad time limit. The average human gold medalist solves 25.9 problems. By comparison, the prior state-of-the-art system solved just 10 in that time.

Credit: Google DeepMind

The model’s neuro-symbolic system worked together to solve the problems. Neural language models are quick at seeing patterns and relationships in data but cannot robustly explain its decisions. Symbolic deduction engines, however, are “based on formal logic and use clear rules” to come to conclusions, but can be slow and inflexible.

“AlphaGeometry’s language model guides its symbolic deduction engine towards likely solutions to geometry problems. Olympiad geometry problems are based on diagrams that need new geometric constructs to be added before they can be solved, such as points, lines or circles,” the researchers said. “AlphaGeometry’s language model predicts which new constructs would be most useful to add, from an infinite number of possibilities. These clues help fill in the gaps and allow the symbolic engine to make further deductions about the diagram and close in on the solution.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)