Scoping machine learning projects: The six questions each analytics translator has to know

Innovative ML projects can only succeed if they manage to transform a business problem into clear tasks data scientists can work on

June 26, 2020

Innovative machine learning projects can only succeed if they manage to transform a business problem into clear tasks data scientists can work on. Analytics Translators, Business Analysts and Project Managers can master this challenge by following six guiding questions we present in this article.

The role of analytics and deep learning in a data-driven company is clear: generate knowledge for operational and strategic decision making.

Using machine learning, companies get insights no human can derive. Only algorithms can analyze hundreds and thousands of parameters and attributes, millions of data points or log entries, and filter out the four or five important ones for understanding, for example, customer behavior.

While data scientists and engineers know how to build and optimize machine learning algorithms and models, companies benefit from that only if the project has a clear scope. Before discussing and implementing models, someone must understand the business requirements. Someone must define the project goals as well as intermediate milestones and work out concrete work packages for data scientists and engineers. This someone can be a project manager or business analyst – or the new role of an analytics translator. They need people skills, project planning, and a good understanding of IT – plus a methodology how to approach the scoping challenge for machine learning projects.

In the following, we discuss six questions that help analytics translators, business analysts, and project managers to define a clear scope and to provide all needed information to the data scientists such that they can work efficiently.

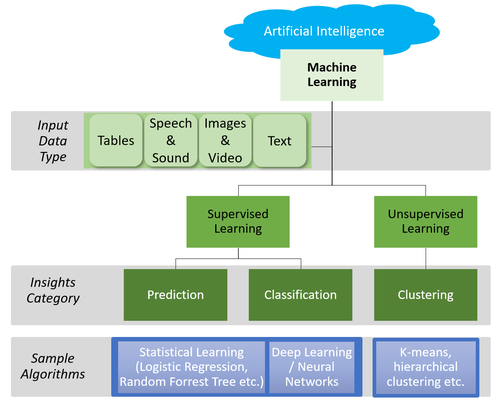

Figure 1: Structuring the algorithm selection process

Question 1: The business goal

The first question is a generic project management question. It is about understanding what the business wants to achieve. It is a clear sign of trust into the innovation potential of machine learning when the business spends half a million or three million on implementing a machine learning solution. They believe that machine learning can help them to run the business better. However, to prevent disappointments later, the project has to understand:

What is the exact goal of the business? Why do they put money in?

How can you check whether the project delivered everything they expected?

How does the project relate to strategic and tactical business goals?

What is the expected timeline?

How much are they willing to spend?

Answering these questions gets and keeps projects on track. Furthermore, the analytics translator and the project manager can verify whether the expectations and the actual project set-up and timeline fit.

Question 2: Insights categories

Once the business goal is clear, the next step is to clarify the expected insights type – prediction, classification, or clustering – and on which kind of data the algorithms operate.

Since the terminology in the machine learning world is sometimes confusing and overlapping, we base our discussion on a highly simplified model (Figure 2), which focuses on the concepts needed for the interaction between analytics translator, the business, and data scientists. In Machine Learning, a sub-discipline of Artificial Intelligence, two machine learning algorithm classes are the most relevant once in practice: supervised and unsupervised learning. Supervised learning algorithms get training data with input and the corresponding correct output data. There is always a pair, such as the word in English and the correct German translation: <red, rot> <hat, Hut>. Classification is such a supervised learning algorithm.

Many people, even without any data science experience, know a classification algorithm from the Harry Potter book and movie series: The Sorting Hat. The hat tells new students to which of the four houses each one fits best. Another example for classifications is deciding whether an image contains a dog or a cat. Besides classification, there is also the insights category prediction. Such algorithms predict which customers most likely buy a pink sportscar or which might fail paying back a mortgage – or they predict how much ice cream shops can expect to sell next week depending on last year’s sales numbers and this week’s weather.

Figure 2: A simplified visualization of the machine learning space

Unsupervised learning means that you just have input training data, but there is no expected and clearly defined outcome. For example, a clustering algorithm looks at all data points and come up with e.g. three groups of data points that are quite close together. Sales and marketing departments might use such algorithms to identify customer groups with similar behavior (e.g., price-sensitive, hedonistic etc.).

When the insights category is understood, the project has to clarify on which kind of data the machine learning algorithms operate. There are various options with tables being the most popular one. Database tables or CSV files are obvious examples, though it does not matter how the data is stored. It matters that the machine learning algorithms get structured, table-liken input. Thus, it does not matter if data is stored in Hadoop if the training data is table input, e.g., generated using Hive-Queries with their Schema-on-Read concept.

Images and videos are another type of input data. Examples are images shot by a camera during the production process to check whether the produced items have a good quality or video streams from CCTVs to check for persons entering restricted areas.

Speech and sound (e.g., engine noise) or text in forms of documents or emails are additional options. They are (at this moment) less often used. Since many companies have less experience with existing services and libraries, projects have to make sure that they really stay on track delivering a practical solution useful for the business.

For the actual implementation, data scientists can use many existing machine learning algorithms. Popular choices are deep learning / neural networks or traditional statistical learning algorithms such as logistic regression or Random Forests. However, algorithms should not be chosen at this project phase, but by the data scientists in the actual implementation phase (see Figure 2).

Question 3: The training set

All machine learning algorithms depend on a set of training data to learn a model – and the accuracy of the model depends not only on the machine learning algorithm but on the quality of the training data as well.

In case of supervised learning, the training data depends on sample input values plus the expected, correct output. Training a model for distinguishing between tomatoes and cherries requires many sample images with a label stating whether a concrete image is a cherry or a tomato. Getting such labeled training data is a challenge for many projects.

In general, there are three main approaches: logs, manual labeling, and downloading existing training sets from the web. Existing training sets enable researchers to develop better algorithms for standard problems such as image classification. They are useful to find another, better solutions for problems for which there are already many solutions – and this is basic research and usually not in the interest of commercial projects. Thus, commercial projects either use logs (e.g., the shopping history of customers or click logs showing how customers navigate a web page) or time-consuming manual data labeling (e.g., humans mark whether an image contains a cherry or a tomato).

Obviously, the quality and correctness of labels impacts the prediction quality of the machine learning model. Five top biologists discussing about every picture get better results than asking a drunken monkey. So, automating human judgment e.g. which parts of a legal document are important requires that someone with adequate knowledge prepares the training set, even if this is an expensive person.

Question 4: “Prediction only” versus “Prediction and Explanation”

When the sales department of a TV streaming platform tries to boost sales, they can ask two similar questions requiring the data scientists to train two different models. The first question is “Whom should we contact to sell what?”, the second “What are the characteristics of potential customers buying or not buying a specific product?”

The first question - “Whom should we contact to sell what?” – is a highly operational question. The sales managers want a list whom to call, mail, or send a letter to sell what. The sales managers to not care whether the list comes from a statistical model, a 100-layer neural network nobody understands, or from a fortune-teller using a crystal ball. The question is (just) about the accuracy of the prediction who buys.

This is different for the second question: “What are the characteristics of potential customers buying or not buying a specific product?” Again, the data scientists need a model that predicts who is likely to buy what. This time, the model must be easy to understand so that it is clear what are the factors deciding the buying behavior of the customers. A fortune-teller or difficult to understand 100-layer neural network might not be the right solution for such a question, whereas simpler and less accurate statistical models can be helpful. The beauty of many statistical models is that it is clear on the first glace, that e.g. age and household influence the buying decision whereas the gender not.

The decision for a “prediction only” or for “prediction and explanation” model is not final. The decision is needed to have a clear project scope. The effort itself is limited for extending a solution by training one or two additional models, at least if everything else works.

Question 5: The prediction frequency

The prediction frequency has no direct impact on the model training or the algorithm, but impact platform decisions, e.g., whether using just a Jupyter notebook or whether implementing the trained model in Java.

Prediction frequency relates to how often the model is used for making prediction. Is it a strategic question that is just asked once (e.g., should the company open new branches in big or small cities?) or are there thousands of predictions per second such as for fraud detection for credit card payments.

Knowing how often the model is used gives an indication of whether there is the need to integrate the model with existing solutions. It does not impact the model training much but has a massive impact on the potential overall project scope.

Question 6: The novelty factor

Building and training machine learning models and experimenting with them is great fun. There are many open source tools available for free. Cloud providers such as AWS provide even complete data science platforms where data scientists can build, test, and train new types and architectures for image classification or object detection. On the other side, there are pre-trained neural networks available for download in the web. AWS provides a Recognizer service which developers can invoke with an image to get objects on the image identified.

Developers have a wide variety of options on what to use as building blocks and what to develop themselves. Thus, it is important to clarify what kind of innovation the machine learning algorithm should bring. Mostly, the innovation is on using machine learning algorithms and models rather than developing them. As a consequence, projects need a clear guidance on what has to develop in-house and for what existing external solutions and services are to be used. Once this is clear, the last of the six questions is answered. It is time to hand over to developers and data scientists. It is time for them to choose frameworks and algorithms and build the needed model and technical solution.

Klaus Haller is a Senior IT Project Manager with in-depth business analysis, solution architecture, and consulting know-how. His experience covers Data Management, Analytics & AI, Information Security and Compliance, and Test Management. He enjoys applying his analytical skills and technical creativity to deliver solutions for complex projects with high levels of uncertainty. Typically, he manages projects consisting of 5-10 engineers.

Since 2005, Klaus works in IT consulting and for IT service providers, often (but not exclusively) in the financial industries in Switzerland.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)